Hi Chris,

Here's the info requested, the config:

root@pve1:~# qm config 103 --current

agent: 1

bios: ovmf

boot: order=scsi0;ide0;net0

cores: 2

cpu: x86-64-v2-AES

efidisk0: Storage_NFS:103/vm-103-disk-2.qcow2,efitype=4m,pre-enrolled-keys=1,size=528K

ide0: none,media=cdrom

lock: backup

machine: pc-q35-8.1

memory: 8192

meta: creation-qemu=8.1.5,ctime=1716035235

name: Windows11

net0: virtio=BC:24:11:C4:CC:93,bridge=vmbr0,firewall=1

numa: 0

ostype: win11

scsi0: Storage_NFS:103/vm-103-disk-3.qcow2,discard=on,iothread=1,size=64G

scsihw: virtio-scsi-single

smbios1: uuid=8231ea81-9d77-40e4-bb26-d3be1e1c7723

sockets: 1

tpmstate0: Storage_NFS:103/vm-103-disk-1.raw,size=4M,version=v2.0

vga: virtio

vmgenid: 3c399401-fec8-4d8f-b7d3-6942bc680005

The logs on the pbs:

root@pbs:~# journalctl --since "30 min ago" > journal.txt

Sep 18 09:49:45 pbs proxmox-backup-proxy[874]: rrd journal successfully committed (33 files in 0.009 seconds)

Sep 18 10:12:28 pbs sshd-session[12006]: Accepted password for root from 192.168.2.110 port 58217 ssh2

Sep 18 10:12:28 pbs sshd-session[12006]: pam_unix(sshd:session): session opened for user root(uid=0) by root(uid=0)

Sep 18 10:12:28 pbs systemd-logind[682]: New session 2 of user root.

Sep 18 10:12:28 pbs systemd[1]: Created slice user-0.slice - User Slice of UID 0.

Sep 18 10:12:28 pbs systemd[1]: Starting

user-runtime-dir@0.service - User Runtime Directory /run/user/0...

Sep 18 10:12:28 pbs systemd[1]: Finished

user-runtime-dir@0.service - User Runtime Directory /run/user/0.

Sep 18 10:12:28 pbs systemd[1]: Starting

user@0.service - User Manager for UID 0...

Sep 18 10:12:28 pbs (systemd)[12012]: pam_unix(systemd-user:session): session opened for user root(uid=0) by root(uid=0)

Sep 18 10:12:28 pbs systemd-logind[682]: New session 3 of user root.

Sep 18 10:12:28 pbs systemd[12012]: Queued start job for default target default.target.

Sep 18 10:12:28 pbs systemd[12012]: Created slice app.slice - User Application Slice.

Sep 18 10:12:28 pbs systemd[12012]: Reached target paths.target - Paths.

Sep 18 10:12:28 pbs systemd[12012]: Reached target timers.target - Timers.

Sep 18 10:12:28 pbs systemd[12012]: Listening on dirmngr.socket - GnuPG network certificate management daemon.

Sep 18 10:12:28 pbs systemd[12012]: Listening on gpg-agent-browser.socket - GnuPG cryptographic agent and passphrase cache (access for web browsers).

Sep 18 10:12:28 pbs systemd[12012]: Listening on gpg-agent-extra.socket - GnuPG cryptographic agent and passphrase cache (restricted).

Sep 18 10:12:28 pbs systemd[12012]: Starting gpg-agent-ssh.socket - GnuPG cryptographic agent (ssh-agent emulation)...

Sep 18 10:12:28 pbs systemd[12012]: Starting gpg-agent.socket - GnuPG cryptographic agent and passphrase cache...

Sep 18 10:12:28 pbs systemd[12012]: Listening on keyboxd.socket - GnuPG public key management service.

Sep 18 10:12:28 pbs systemd[12012]: Starting ssh-agent.socket - OpenSSH Agent socket...

Sep 18 10:12:28 pbs systemd[12012]: Listening on gpg-agent.socket - GnuPG cryptographic agent and passphrase cache.

Sep 18 10:12:28 pbs systemd[12012]: Listening on ssh-agent.socket - OpenSSH Agent socket.

Sep 18 10:12:28 pbs systemd[12012]: Listening on gpg-agent-ssh.socket - GnuPG cryptographic agent (ssh-agent emulation).

Sep 18 10:12:28 pbs systemd[12012]: Reached target sockets.target - Sockets.

Sep 18 10:12:28 pbs systemd[12012]: Reached target basic.target - Basic System.

Sep 18 10:12:28 pbs systemd[12012]: Reached target default.target - Main User Target.

Sep 18 10:12:28 pbs systemd[12012]: Startup finished in 182ms.

Sep 18 10:12:28 pbs systemd[1]: Started

user@0.service - User Manager for UID 0.

Sep 18 10:12:28 pbs systemd[1]: Started session-2.scope - Session 2 of User root.

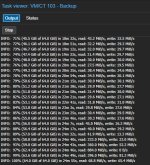

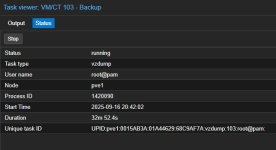

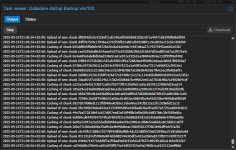

As for the logs on the pbs of the backup. Since the system freezes I cannot download it. A screenshot would only show the chunks, and then it stops, no other messages to be seen. I have to reboot the pbs to regain control.

There are no other tasks running.

If you have more files I can check, then please let me know.

I tried a ubuntu vm with 64GB disk and that worked fine, so it looks like backing up the Windows 11 64GB vm gives a problem. Just a thought, could it be that the small tpm disk is a problem here?