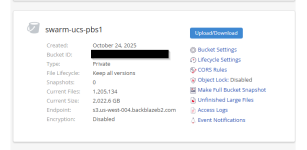

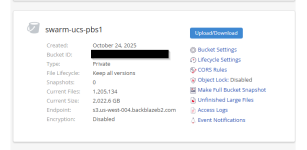

On PBS 4.0.18 using S3 storage (Backblaze B2), I've had issues with garbage collection not deleting chunks.

The prune and GC jobs are both running fine, and reporting that everything deleted correctly, however I do not see any change in B2.

I'm not using any versioning or object locking.

I made that mistake during the PBS beta, and thought that was the cause (I've since rebuilt and re-synced).

I've also ensured I have the S3 quirk set 'Skip If-None-Match header'

I've deleted a VM I no longer need (and set the GC access time to a low number to ensure it gets cleaned up).

In B2, I saw no change in the bucket size, and saw that the total file count increased.

I double checked the logs in journalctl and saw nothing noteworthy with this GC run

Any assistance would be greatly appreciated.

I can upload any additional logs as needed.

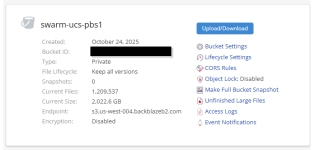

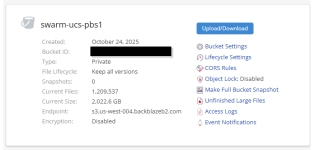

The prune and GC jobs are both running fine, and reporting that everything deleted correctly, however I do not see any change in B2.

I'm not using any versioning or object locking.

I made that mistake during the PBS beta, and thought that was the cause (I've since rebuilt and re-synced).

I've also ensured I have the S3 quirk set 'Skip If-None-Match header'

I've deleted a VM I no longer need (and set the GC access time to a low number to ensure it gets cleaned up).

In B2, I saw no change in the bucket size, and saw that the total file count increased.

I double checked the logs in journalctl and saw nothing noteworthy with this GC run

Any assistance would be greatly appreciated.

I can upload any additional logs as needed.

Attachments

Last edited: