In the process of backing up my server (oh the irony) i managed to screw up my configuration, so i can't acces my data anymore.

So i've gathered that my problem probably lays in the /etc/pve/storage.cfg, as this is being overwritten when you join a cluster - wich i did, for testing purposes.

I am building a new, more energy efficient server atm that was supposed to replace my existing server.

Well, you should really read the documentation before you click on anything, lesson learned.

My setup:

Server with 5x 1tb HDDs in a ZFS Pool + 1x SSD for the OS

On the server there's an OpenMediaVault VM running (id 100), sharing files via smb.

Kernel Version Linux 5.4.65-1-pve #1 SMP PVE 5.4.65-1 (Mon, 21 Sep 2020 15:40:22 +0200)

PVE Manager Version pve-manager/6.2-6/ee1d7754

The node is called "pve", the ZFS Pool is called "Local1" (and is alive and happy, according to the Proxmox Gui).

OMV complains on boot that it cant find its Data volume, which used to be the shared storage.

The data is on "vm-100-disk-0", the raw disk image wich can be found under

/dev/zvol/Local1

(i can see it in the Node's terminal, so it should be accessible.)

Right now my storage.cfg looks like this:

Wich is basically the default config, as i gathered.

In the guide for joining a cluster it also states that you will have to re-add your storage locations manually, but i cant find an example configuration that would fit that usecase.

Anyone who has any idea what should be written in the storage.cfg exactly?

Or maybe someone running OpenMediaVault on Proxmox could share their storage.cfg?

I am really lost at the moment.

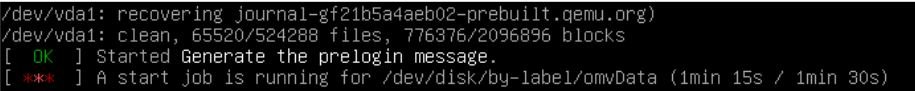

Proxmox also gives me this error over and over again during boot:

Not sure if thats relevant or not for this problem.

If anyone can help me: Thanks so much in advance!

So i've gathered that my problem probably lays in the /etc/pve/storage.cfg, as this is being overwritten when you join a cluster - wich i did, for testing purposes.

I am building a new, more energy efficient server atm that was supposed to replace my existing server.

Well, you should really read the documentation before you click on anything, lesson learned.

My setup:

Server with 5x 1tb HDDs in a ZFS Pool + 1x SSD for the OS

On the server there's an OpenMediaVault VM running (id 100), sharing files via smb.

Kernel Version Linux 5.4.65-1-pve #1 SMP PVE 5.4.65-1 (Mon, 21 Sep 2020 15:40:22 +0200)

PVE Manager Version pve-manager/6.2-6/ee1d7754

The node is called "pve", the ZFS Pool is called "Local1" (and is alive and happy, according to the Proxmox Gui).

OMV complains on boot that it cant find its Data volume, which used to be the shared storage.

Code:

Jan 10 13:09:17 omgomv monit[602]: 'filesystem_srv_dev-disk-by-label-omvData' unable to read filesystem '/srv/dev-disk-by-label-omvData' state

Jan 10 13:09:17 omgomv monit[602]: 'filesystem_srv_dev-disk-by-label-omvData' trying to restart

Jan 10 13:09:17 omgomv monit[602]: 'mountpoint_srv_dev-disk-by-label-omvData' status failed (1) -- /srv/dev-disk-by-label-omvData is not a mountpoint

Jan 10 13:09:47 omgomv monit[602]: Filesystem '/srv/dev-disk-by-label-omvData' not mountedThe data is on "vm-100-disk-0", the raw disk image wich can be found under

/dev/zvol/Local1

(i can see it in the Node's terminal, so it should be accessible.)

Right now my storage.cfg looks like this:

Wich is basically the default config, as i gathered.

In the guide for joining a cluster it also states that you will have to re-add your storage locations manually, but i cant find an example configuration that would fit that usecase.

Anyone who has any idea what should be written in the storage.cfg exactly?

Or maybe someone running OpenMediaVault on Proxmox could share their storage.cfg?

I am really lost at the moment.

Proxmox also gives me this error over and over again during boot:

Code:

Jan 10 12:50:41 pve rrdcached[917]: handle_request_update: Could not read RRD file.

Jan 10 12:50:41 pve pmxcfs[930]: [status] notice: RRDC update error /var/lib/rrdcached/db/pve2-node/pve: -1

Jan 10 12:50:41 pve pmxcfs[930]: [status] notice: RRD update error /var/lib/rrdcached/db/pve2-node/pve: mmaping file '/var/lib/rrdcached/db/pve2-node/pve': Invalid argumentNot sure if thats relevant or not for this problem.

If anyone can help me: Thanks so much in advance!

Last edited: