Hi,

I have a Windows Server 2022 guest, running on Proxmox 8.3.5. When the guest os starts to perform some high storage I/O, data are corrupted and window logs warning as "Reset to device, \Device\RaidPort2, was issued." Hist storage I/O is basically data received from remote hosts, because this is a backup offsite server, so it is "high" but it is not a database server or other application server running heavy local tasks.

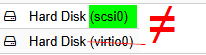

I found a lot of threads about this kind of problem with previous drivers, but it may be solved with new 0.1.271.1 version that I've installed yesterday. Still I have same problem, and the only way to run the server is to switch every disk to SATA.

Any idea? This makes simple not available Proxmox as platform for Windows guests so is really strange IMHO

physical storage is based on a pool of local disks and a pool of local SSD, with ZFS volume. VM disks (raw format) have same problem from both pools.

thanks

I have a Windows Server 2022 guest, running on Proxmox 8.3.5. When the guest os starts to perform some high storage I/O, data are corrupted and window logs warning as "Reset to device, \Device\RaidPort2, was issued." Hist storage I/O is basically data received from remote hosts, because this is a backup offsite server, so it is "high" but it is not a database server or other application server running heavy local tasks.

I found a lot of threads about this kind of problem with previous drivers, but it may be solved with new 0.1.271.1 version that I've installed yesterday. Still I have same problem, and the only way to run the server is to switch every disk to SATA.

Any idea? This makes simple not available Proxmox as platform for Windows guests so is really strange IMHO

physical storage is based on a pool of local disks and a pool of local SSD, with ZFS volume. VM disks (raw format) have same problem from both pools.

thanks