Hi.

I have Proxmox 8.2 with 3 nodes cluster. When I shutdown one of the nodes for maintenance for example I receive all these replication emails regarding the containers of the node.

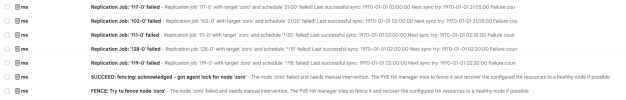

The emails contain the following content:

I do not understand why this happens, I do not understand why the dates are from 1970. Any ideas? Thanks.

I have Proxmox 8.2 with 3 nodes cluster. When I shutdown one of the nodes for maintenance for example I receive all these replication emails regarding the containers of the node.

The emails contain the following content:

Code:

Replication job '112-0' with target 'franky' and schedule '21:00' failed!

Last successful sync: 1970-01-01 02:00:00

Next sync try: 1970-01-01 21:05:00

Failure count: 1

Error:

command '/usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=franky' -o 'UserKnownHostsFile=/etc/pve/nodes/franky/ssh_known_hosts' -o 'GlobalKnownHostsFile=none' root@192.168.81.96 -- pvesr prepare-local-job 112-0 local-zfs:subvol-112-disk-0 --last_sync 0' failed: exit code 255I do not understand why this happens, I do not understand why the dates are from 1970. Any ideas? Thanks.