Hi,

I have a 3 node system, all Dell 610 with 128 gb ram and 3tb HD on ZFS.

I had started several VMs in all 3 servers, 2 or 3 each), each Vm replicating to the other 2 servers, and also setup HA, to all the VMs in a circular mode, So VMs on 1, would HA to 2 and then 3, VMs on 2 will HA to 3 and then 1, VMs on 3 would HA to 1 and then 2.

Today when I got to do my daily check up, and all the VMs had moved out of node 1. I looked around for the reason for this but could not find anything, other than the server has rebooted (this I saw only on the iDrac of the server where I saw the "boot capture" (nothing weird and still no reason why it rebooted).

When I checked on the 'replication' of the VMs that were on server 1, I saw errors replicating back to 1.

I disabled the replications, and send the server for a nice reboot.

Once it was up, I have tried setting up replications again, but they fail with a cryptic error.

Can anyone shed some light on this?

=========================================

Virtual Environment 5.0-23/af4267bf

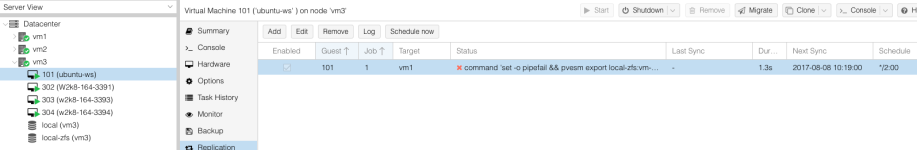

Virtual Machine 101 ('ubuntu-ws' ) on node 'vm3'

2017-08-08 09:37:00 101-1: start replication job

2017-08-08 09:37:00 101-1: guest => VM 101, running => 0

2017-08-08 09:37:00 101-1: volumes => local-zfs:vm-101-disk-2

2017-08-08 09:37:01 101-1: create snapshot '__replicate_101-1_1502199420__' on local-zfs:vm-101-disk-2

2017-08-08 09:37:01 101-1: full sync 'local-zfs:vm-101-disk-2' (__replicate_101-1_1502199420__)

2017-08-08 09:37:02 101-1: delete previous replication snapshot '__replicate_101-1_1502199420__' on local-zfs:vm-101-disk-2

2017-08-08 09:37:02 101-1: end replication job with error: command 'set -o pipefail && pvesm export local-zfs:vm-101-disk-2 zfs - -with-snapshots 1 -snapshot __replicate_101-1_1502199420__ | /usr/bin/ssh -o 'BatchMode=yes' -o 'HostKeyAlias=vm1' root@172.21.82.21 -- pvesm import local-zfs:vm-101-disk-2 zfs - -with-snapshots 1' failed: exit code 255

============================================

This VM (101) is the only linux Vm and it is onlly used for testing.

I have tried with the VM on, originally, and this error is now with VM off.

I have also tried a manual migration (VM off) (online not checked)

=================

Requesting HA migration for VM 101 to node vm1

TASK OK

=================

But it does not migrate

this is the LOG

==============================

task started by HA resource agent

2017-08-08 09:44:53 starting migration of VM 101 to node 'vm1' (172.21.82.21)

2017-08-08 09:44:53 found local disk 'local-zfs:vm-101-disk-2' (in current VM config)

2017-08-08 09:44:53 copying disk images

2017-08-08 09:44:53 start replication job

2017-08-08 09:44:53 guest => VM 101, running => 0

2017-08-08 09:44:53 volumes => local-zfs:vm-101-disk-2

2017-08-08 09:44:54 create snapshot '__replicate_101-1_1502199893__' on local-zfs:vm-101-disk-2

2017-08-08 09:44:54 full sync 'local-zfs:vm-101-disk-2' (__replicate_101-1_1502199893__)

send from @ to rpool/data/vm-101-disk-2@__replicate_101-0_1502164859__ estimated size is 7.34G

send from @__replicate_101-0_1502164859__ to rpool/data/vm-101-disk-2@__replicate_101-1_1502199893__ estimated size is 1.48M

total estimated size is 7.34G

TIME SENT SNAPSHOT

rpool/data/vm-101-disk-2 name rpool/data/vm-101-disk-2 -

volume 'rpool/data/vm-101-disk-2' already exists

command 'zfs send -Rpv -- rpool/data/vm-101-disk-2@__replicate_101-1_1502199893__' failed: got signal 13

send/receive failed, cleaning up snapshot(s)..

2017-08-08 09:44:55 delete previous replication snapshot '__replicate_101-1_1502199893__' on local-zfs:vm-101-disk-2

2017-08-08 09:44:55 end replication job with error: command 'set -o pipefail && pvesm export local-zfs:vm-101-disk-2 zfs - -with-snapshots 1 -snapshot __replicate_101-1_1502199893__ | /usr/bin/ssh -o 'BatchMode=yes' -o 'HostKeyAlias=vm1' root@172.21.82.21 -- pvesm import local-zfs:vm-101-disk-2 zfs - -with-snapshots 1' failed: exit code 255

2017-08-08 09:44:55 ERROR: Failed to sync data - command 'set -o pipefail && pvesm export local-zfs:vm-101-disk-2 zfs - -with-snapshots 1 -snapshot __replicate_101-1_1502199893__ | /usr/bin/ssh -o 'BatchMode=yes' -o 'HostKeyAlias=vm1' root@172.21.82.21 -- pvesm import local-zfs:vm-101-disk-2 zfs - -with-snapshots 1' failed: exit code 255

2017-08-08 09:44:55 aborting phase 1 - cleanup resources

2017-08-08 09:44:55 ERROR: migration aborted (duration 00:00:02): Failed to sync data - command 'set -o pipefail && pvesm export local-zfs:vm-101-disk-2 zfs - -with-snapshots 1 -snapshot __replicate_101-1_1502199893__ | /usr/bin/ssh -o 'BatchMode=yes' -o 'HostKeyAlias=vm1' root@172.21.82.21 -- pvesm import local-zfs:vm-101-disk-2 zfs - -with-snapshots 1' failed: exit code 255

TASK ERROR: migration aborted

====================================

I am completely at a loss here and I am afraid that if this continues, I can not rely on proxmox for my production environment (so far this is ALMOST all testing, only have a couple of Development machines that are really working).

I have a 3 node system, all Dell 610 with 128 gb ram and 3tb HD on ZFS.

I had started several VMs in all 3 servers, 2 or 3 each), each Vm replicating to the other 2 servers, and also setup HA, to all the VMs in a circular mode, So VMs on 1, would HA to 2 and then 3, VMs on 2 will HA to 3 and then 1, VMs on 3 would HA to 1 and then 2.

Today when I got to do my daily check up, and all the VMs had moved out of node 1. I looked around for the reason for this but could not find anything, other than the server has rebooted (this I saw only on the iDrac of the server where I saw the "boot capture" (nothing weird and still no reason why it rebooted).

When I checked on the 'replication' of the VMs that were on server 1, I saw errors replicating back to 1.

I disabled the replications, and send the server for a nice reboot.

Once it was up, I have tried setting up replications again, but they fail with a cryptic error.

Can anyone shed some light on this?

=========================================

Virtual Environment 5.0-23/af4267bf

Virtual Machine 101 ('ubuntu-ws' ) on node 'vm3'

2017-08-08 09:37:00 101-1: start replication job

2017-08-08 09:37:00 101-1: guest => VM 101, running => 0

2017-08-08 09:37:00 101-1: volumes => local-zfs:vm-101-disk-2

2017-08-08 09:37:01 101-1: create snapshot '__replicate_101-1_1502199420__' on local-zfs:vm-101-disk-2

2017-08-08 09:37:01 101-1: full sync 'local-zfs:vm-101-disk-2' (__replicate_101-1_1502199420__)

2017-08-08 09:37:02 101-1: delete previous replication snapshot '__replicate_101-1_1502199420__' on local-zfs:vm-101-disk-2

2017-08-08 09:37:02 101-1: end replication job with error: command 'set -o pipefail && pvesm export local-zfs:vm-101-disk-2 zfs - -with-snapshots 1 -snapshot __replicate_101-1_1502199420__ | /usr/bin/ssh -o 'BatchMode=yes' -o 'HostKeyAlias=vm1' root@172.21.82.21 -- pvesm import local-zfs:vm-101-disk-2 zfs - -with-snapshots 1' failed: exit code 255

============================================

This VM (101) is the only linux Vm and it is onlly used for testing.

I have tried with the VM on, originally, and this error is now with VM off.

I have also tried a manual migration (VM off) (online not checked)

=================

Requesting HA migration for VM 101 to node vm1

TASK OK

=================

But it does not migrate

this is the LOG

==============================

task started by HA resource agent

2017-08-08 09:44:53 starting migration of VM 101 to node 'vm1' (172.21.82.21)

2017-08-08 09:44:53 found local disk 'local-zfs:vm-101-disk-2' (in current VM config)

2017-08-08 09:44:53 copying disk images

2017-08-08 09:44:53 start replication job

2017-08-08 09:44:53 guest => VM 101, running => 0

2017-08-08 09:44:53 volumes => local-zfs:vm-101-disk-2

2017-08-08 09:44:54 create snapshot '__replicate_101-1_1502199893__' on local-zfs:vm-101-disk-2

2017-08-08 09:44:54 full sync 'local-zfs:vm-101-disk-2' (__replicate_101-1_1502199893__)

send from @ to rpool/data/vm-101-disk-2@__replicate_101-0_1502164859__ estimated size is 7.34G

send from @__replicate_101-0_1502164859__ to rpool/data/vm-101-disk-2@__replicate_101-1_1502199893__ estimated size is 1.48M

total estimated size is 7.34G

TIME SENT SNAPSHOT

rpool/data/vm-101-disk-2 name rpool/data/vm-101-disk-2 -

volume 'rpool/data/vm-101-disk-2' already exists

command 'zfs send -Rpv -- rpool/data/vm-101-disk-2@__replicate_101-1_1502199893__' failed: got signal 13

send/receive failed, cleaning up snapshot(s)..

2017-08-08 09:44:55 delete previous replication snapshot '__replicate_101-1_1502199893__' on local-zfs:vm-101-disk-2

2017-08-08 09:44:55 end replication job with error: command 'set -o pipefail && pvesm export local-zfs:vm-101-disk-2 zfs - -with-snapshots 1 -snapshot __replicate_101-1_1502199893__ | /usr/bin/ssh -o 'BatchMode=yes' -o 'HostKeyAlias=vm1' root@172.21.82.21 -- pvesm import local-zfs:vm-101-disk-2 zfs - -with-snapshots 1' failed: exit code 255

2017-08-08 09:44:55 ERROR: Failed to sync data - command 'set -o pipefail && pvesm export local-zfs:vm-101-disk-2 zfs - -with-snapshots 1 -snapshot __replicate_101-1_1502199893__ | /usr/bin/ssh -o 'BatchMode=yes' -o 'HostKeyAlias=vm1' root@172.21.82.21 -- pvesm import local-zfs:vm-101-disk-2 zfs - -with-snapshots 1' failed: exit code 255

2017-08-08 09:44:55 aborting phase 1 - cleanup resources

2017-08-08 09:44:55 ERROR: migration aborted (duration 00:00:02): Failed to sync data - command 'set -o pipefail && pvesm export local-zfs:vm-101-disk-2 zfs - -with-snapshots 1 -snapshot __replicate_101-1_1502199893__ | /usr/bin/ssh -o 'BatchMode=yes' -o 'HostKeyAlias=vm1' root@172.21.82.21 -- pvesm import local-zfs:vm-101-disk-2 zfs - -with-snapshots 1' failed: exit code 255

TASK ERROR: migration aborted

====================================

I am completely at a loss here and I am afraid that if this continues, I can not rely on proxmox for my production environment (so far this is ALMOST all testing, only have a couple of Development machines that are really working).