Hello,

Recently when I check my server, I saw that one of the ssd's media wearout indicator is above %10. And I want to change this ssd with near one before it fails.

I researched inside the forum and I took some notes. But before proceeding, I wished to ask you, to experts, did I miss something?

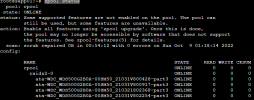

When I execute proxmox-boot-tool status, I saw that all ssd's are using uefi system. According to notes in wiki, this system needs to use systemd-boot.

Server hard disk layout is as below:

The steps which I will follow are;

1. To determine zpool status

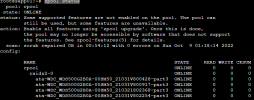

System is using raidz2 on rpool

2. To determine serial number of the ssd

And it shows that /dev/sdc is mapped to ata-WDC_WDS500G2B0A-00SM50_210321802368

3. To take the relevant ssd to offline

4. Server will be taken to offline, and ssd will be replaced.

5. Partition table creation

ata-WDC_WDS500G2B0A-00SM50_21031V800917 is healthy drive

/dev/new-device-id-part2 is the new ssd's EFI partition

6. Replace zpool

/dev/ata-WDC_WDS500G2B0A-00SM50_210321802368-part3 is the old zfs partition

/dev/new-device-id-part3 is the new zfs partition

7. Enable larger disk usage

8. Check status of zpool

These are the details which I have collected from several posts and wiki side. But before proceeding, I wished to take your advices is there anything wrong?

Thank you so much

Kind regards

Recently when I check my server, I saw that one of the ssd's media wearout indicator is above %10. And I want to change this ssd with near one before it fails.

I researched inside the forum and I took some notes. But before proceeding, I wished to ask you, to experts, did I miss something?

When I execute proxmox-boot-tool status, I saw that all ssd's are using uefi system. According to notes in wiki, this system needs to use systemd-boot.

Server hard disk layout is as below:

The steps which I will follow are;

1. To determine zpool status

Bash:

zpool status

System is using raidz2 on rpool

2. To determine serial number of the ssd

Bash:

ls -la /dev/disk/by-idAnd it shows that /dev/sdc is mapped to ata-WDC_WDS500G2B0A-00SM50_210321802368

3. To take the relevant ssd to offline

Bash:

zpool offline rpool /dev/ata-WDC_WDS500G2B0A-00SM50_2103218023684. Server will be taken to offline, and ssd will be replaced.

5. Partition table creation

Bash:

sgdisk ata-WDC_WDS500G2B0A-00SM50_21031V800917 -R /dev/new-device-id

Bash:

sgdisk -G /dev/new-device-idata-WDC_WDS500G2B0A-00SM50_21031V800917 is healthy drive

Bash:

proxmox-boot-tool format /dev/new-device-id-part2

Bash:

proxmox-boot-tool init /dev/new-device-id-part2/dev/new-device-id-part2 is the new ssd's EFI partition

6. Replace zpool

Bash:

zpool replace -f rpool /dev/ata-WDC_WDS500G2B0A-00SM50_210321802368-part3 /dev/new-device-id-part3/dev/ata-WDC_WDS500G2B0A-00SM50_210321802368-part3 is the old zfs partition

/dev/new-device-id-part3 is the new zfs partition

7. Enable larger disk usage

Bash:

zpool set autoexpand=on rpool8. Check status of zpool

Bash:

zpool statusThese are the details which I have collected from several posts and wiki side. But before proceeding, I wished to take your advices is there anything wrong?

Thank you so much

Kind regards

Last edited: