Hi,

We have three nodes, and two OSDs were in a down/out status on PVE2 about three months ago. We replaced them using the commands below, and they came back online properly. However, we had a power issue last week and had to reboot PVE2. After the reboot, the two OSDs we replaced three months ago went down/out again. Is this the correct procedure for the replacement task?

Note: When we replaced the OSDs, we had to use a different SSD model.

1 - The faulty OSDs were down/out.

2 - Turned PVE2 off, replaced the new SSDs, and turned it back on. then run commands below.

3 - ceph osd destroy {id}

4 - ceph-volume lvm zap /dev/sdX

5 - ceph-volume lvm prepare --osd-id {id} --data /dev/sdX

6 - Start/In in the GUI.

Now, two new OSDs have failed in PVE3. Should we use exactly these commands to replace the OSDs?

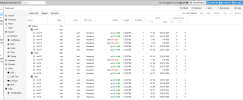

Current OSD status

PVE2 Disk Status

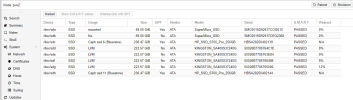

PVE3 Disk Status

We have three nodes, and two OSDs were in a down/out status on PVE2 about three months ago. We replaced them using the commands below, and they came back online properly. However, we had a power issue last week and had to reboot PVE2. After the reboot, the two OSDs we replaced three months ago went down/out again. Is this the correct procedure for the replacement task?

Note: When we replaced the OSDs, we had to use a different SSD model.

1 - The faulty OSDs were down/out.

2 - Turned PVE2 off, replaced the new SSDs, and turned it back on. then run commands below.

3 - ceph osd destroy {id}

4 - ceph-volume lvm zap /dev/sdX

5 - ceph-volume lvm prepare --osd-id {id} --data /dev/sdX

6 - Start/In in the GUI.

Now, two new OSDs have failed in PVE3. Should we use exactly these commands to replace the OSDs?

Current OSD status

PVE2 Disk Status

PVE3 Disk Status