Proxmox hangs (high CPU load resulting in a required reboot of server) when trying to delete a VM via GUI if the hard disk of the VM is a LVM disk. Note I have tried several methods to ‘delete’ the VM, all roads lead to a hang on the ‘lvremove’ command which then hangs up udev and ALL disks on the server become slow resulting in a required reboot and/or run-away CPU load. In the images attached I opt to remove the VM config file by hand, confirm qm is not running the VM and use "lvremove -f" command by hand (hoping the -f will force it to work... it did not). I have 3 servers in a cluster and this happens on at least 2 (have not tested server 3).

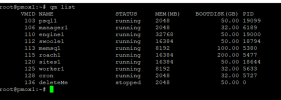

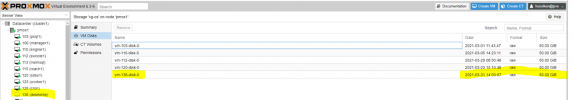

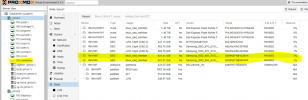

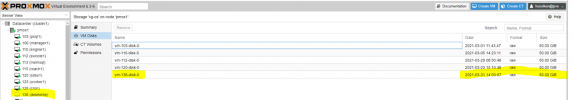

see qm1.png pmox1-3.png t2-3.png

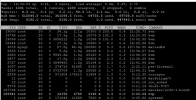

After running "qm list" and confirming 136 is no longer running I then do:

"lvremove -f /dev/vg-os/vm-136-disk-0"

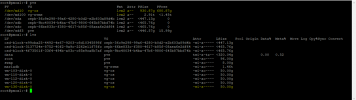

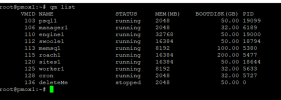

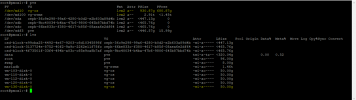

This hangs and NEVER returns. Opening other terminals I can run "lvdisplay" this hangs and takes minutes to finish. Running top:

see cpuLoad.php

Notice the kvm processes - they all will eventually shoot over 200%

All VM’s on the box become un responsive.

Dmesg (parts so message is not to long)

[31180.696577] INFO: task kvm:18794 blocked for more than 120 seconds.

[31180.696606] Tainted: P O 5.4.106-1-pve #1

[31180.696633] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[31180.696668] kvm D 0 18794 1 0x00000000

[31180.696669] Call Trace:

[31180.696672] __schedule+0x2e6/0x700

[31180.696673] ? wbt_exit+0x30/0x30

[31180.696675] ? __wbt_done+0x40/0x40

[31180.696676] schedule+0x33/0xa0

[31180.696678] io_schedule+0x16/0x40

[31180.696680] rq_qos_wait+0xf7/0x170

[31180.696682] ? sysv68_partition+0x2d0/0x2d0

[31180.696684] ? wbt_exit+0x30/0x30

[31180.696685] wbt_wait+0x9f/0xe0

[31180.696687] __rq_qos_throttle+0x28/0x40

[31180.696690] blk_mq_make_request+0x11f/0x5b0

[31180.696693] generic_make_request+0xcf/0x310

[31180.696696] flush_bio_list+0x4e/0xf0 [raid1]

[31180.696698] raid1_unplug+0xc8/0xf0 [raid1]

[31180.696700] blk_flush_plug_list+0xb3/0x110

[31180.696703] blk_finish_plug+0x26/0x40

[31180.696705] blkdev_write_iter+0xbe/0x140

[31180.696708] aio_write+0xf1/0x190

[31180.696710] ? poll_select_finish+0x210/0x210

[31180.696712] ? poll_select_finish+0x210/0x210

[31180.696714] ? _cond_resched+0x19/0x30

[31180.696716] io_submit_one+0x77c/0xb20

[31180.696719] __x64_sys_io_submit+0xa9/0x190

[31180.696721] ? __x64_sys_io_submit+0xa9/0x190

[31180.696724] ? fput+0x13/0x20

[31180.696726] ? ksys_read+0xa3/0xe0

[31180.696729] do_syscall_64+0x57/0x190

[31180.696731] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[31180.696732] RIP: 0033:0x7fcdccc0ff59

[31180.696735] Code: 00 c3 66 2e 0f 1f 84 00 00 00 00 00 0f 1f 44 00 00 48 89 f8 48 89 f7 48 89 d6 48 89 ca 4d 89 c2 4d 89 c8 4c 8b 4c 24 08 0f 05 <48> 3d 01 f0 ff ff 73 01 c3 48 8b 0d 07 6f 0c 00 f7 d8 64 89 01 48

[31180.696736] RSP: 002b:00007fffcd884878 EFLAGS: 00000246 ORIG_RAX: 00000000000000d1

[31180.696737] RAX: ffffffffffffffda RBX: 00007fcdc207ce20 RCX: 00007fcdccc0ff59

[31180.696738] RDX: 00007fffcd8848c0 RSI: 0000000000000001 RDI: 00007fcdc201c000

[31180.696739] RBP: 00007fcdc201c000 R08: 00007fcdc207f310 R09: 0000000000000001

[31180.696739] R10: 0000000000000000 R11: 0000000000000246 R12: 0000000000000001

[31180.696740] R13: 0000000000000005 R14: 00007fffcd8848c0 R15: 0000000000000001

[31180.696744] INFO: task kvm:19000 blocked for more than 120 seconds.

[31180.696774] Tainted: P O 5.4.106-1-pve #1

[31180.696800] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[31180.696833] kvm D 0 19000 1 0x00004000

[31180.696834] Call Trace:

[31180.696835] __schedule+0x2e6/0x700

[31180.696836] schedule+0x33/0xa0

[31180.696837] md_super_wait+0x74/0xb0

[31180.696838] ? wait_woken+0x80/0x80

[31180.696839] md_bitmap_wait_writes+0x9b/0xb0

[31180.696840] md_bitmap_unplug+0xfd/0x120

[31180.696841] flush_bio_list+0x23/0xf0 [raid1]

[31180.696842] raid1_unplug+0xc8/0xf0 [raid1]

[31180.696843] blk_flush_plug_list+0xb3/0x110

[31180.696844] blk_finish_plug+0x26/0x40

[31180.696845] __x64_sys_io_submit+0x122/0x190

[31180.696846] do_syscall_64+0x57/0x190

[31180.696847] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[31180.696847] RIP: 0033:0x7f8618d19f59

[31180.696849] Code: Bad RIP value.

[31180.696849] RSP: 002b:00007ffde2acb988 EFLAGS: 00000246 ORIG_RAX: 00000000000000d1

[31180.696850] RAX: ffffffffffffffda RBX: 00007f860e186e20 RCX: 00007f8618d19f59

[31180.696850] RDX: 00007ffde2acb9d0 RSI: 000000000000001e RDI: 00007f860e126000

[31180.696850] RBP: 00007f860e126000 R08: 00007f860e189310 R09: 000000000000001e

[31180.696851] R10: 0000000000000000 R11: 0000000000000246 R12: 000000000000001e

[31180.696851] R13: 0000000000000005 R14: 00007ffde2acb9d0 R15: 0000000000000001

[31180.696858] INFO: task kvm:19099 blocked for more than 120 seconds.

[31180.696872] Tainted: P O 5.4.106-1-pve #1

[31180.696885] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[31180.696902] kvm D 0 19099 1 0x00000000

.......

[31180.696953] INFO: task lvremove:188475 blocked for more than 120 seconds. ////<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

[31180.696968] Tainted: P O 5.4.106-1-pve #1

[31180.696982] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[31180.696999] lvremove D 0 188475 21657 0x00000000

[31180.697000] Call Trace:

[31180.697002] __schedule+0x2e6/0x700

[31180.697003] schedule+0x33/0xa0

[31180.697004] schedule_timeout+0x205/0x330

[31180.697005] ? blk_flush_plug_list+0xb3/0x110

[31180.697006] io_schedule_timeout+0x1e/0x50

[31180.697006] wait_for_completion_io+0xb7/0x140

[31180.697009] ? wake_up_q+0x80/0x80

[31180.697009] submit_bio_wait+0x61/0x90

[31180.697010] blkdev_issue_discard+0x7a/0xd0

[31180.697012] blk_ioctl_discard+0xc4/0x110

[31180.697013] blkdev_ioctl+0x4e7/0x9e0

[31180.697014] block_ioctl+0x3d/0x50

[31180.697015] do_vfs_ioctl+0xa9/0x640

[31180.697016] ? __do_sys_newfstat+0x5f/0x70

[31180.697017] ksys_ioctl+0x67/0x90

[31180.697017] __x64_sys_ioctl+0x1a/0x20

[31180.697018] do_syscall_64+0x57/0x190

[31180.697019] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[31180.697020] RIP: 0033:0x7fbeae0f7427

[31180.697021] Code: Bad RIP value.

[31180.697021] RSP: 002b:00007ffd4fb311d8 EFLAGS: 00000246 ORIG_RAX: 0000000000000010

[31180.697022] RAX: ffffffffffffffda RBX: 0000564696f132e3 RCX: 00007fbeae0f7427

[31180.697022] RDX: 00007ffd4fb31200 RSI: 0000000000001277 RDI: 0000000000000007

[31180.697022] RBP: 00007ffd4fb31230 R08: 0000564696f134f8 R09: 00007ffd4fb310e0

[31180.697023] R10: 0000000000000000 R11: 0000000000000246 R12: 0000564696d53ec0

[31180.697023] R13: 00007ffd4fb31c60 R14: 0000000000000000 R15: 0000000000000000

[31229.860265] sd 0:2:14:0: [sdd] tag#3306 Abort request is for SMID: 3307

[31229.860269] sd 0:2:14:0: attempting task abort! scmd(0x00000000d4c9c50b) tm_dev_handle 0xe

[31229.926556] sd 0:2:14:0: [sdd] tag#3171 BRCM Debug mfi stat 0x2d, data len requested/completed 0x20000/0x0

[31229.926600] sd 0:2:14:0: [sdd] tag#3200 BRCM Debug mfi stat 0x2d, data len requested/completed 0x6000/0x0

[31229.926623] sd 0:2:14:0: [sdd] tag#759 BRCM Debug mfi stat 0x2d, data len requested/completed 0x1000/0x0

[31229.926725] sd 0:2:14:0: [sdd] tag#761 BRCM Debug mfi stat 0x2d, data len requested/completed 0x1000/0x0

[31229.926747] sd 0:2:14:0: [sdd] tag#3262 BRCM Debug mfi stat 0x2d, data len requested/completed 0x6000/0x0

[31229.926768] sd 0:2:14:0: [sdd] tag#757 BRCM Debug mfi stat 0x2d, data len requested/completed 0x1000/0x0

[31229.926924] sd 0:2:14:0: [sdd] tag#755 BRCM Debug mfi stat 0x2d, data len requested/completed 0x1000/0x0

[31229.926976] sd 0:2:14:0: [sdd] tag#3258 BRCM Debug mfi stat 0x2d, data len requested/completed 0xa00/0x0

.....

more upon request

see qm1.png pmox1-3.png t2-3.png

After running "qm list" and confirming 136 is no longer running I then do:

"lvremove -f /dev/vg-os/vm-136-disk-0"

This hangs and NEVER returns. Opening other terminals I can run "lvdisplay" this hangs and takes minutes to finish. Running top:

see cpuLoad.php

Notice the kvm processes - they all will eventually shoot over 200%

All VM’s on the box become un responsive.

Dmesg (parts so message is not to long)

[31180.696577] INFO: task kvm:18794 blocked for more than 120 seconds.

[31180.696606] Tainted: P O 5.4.106-1-pve #1

[31180.696633] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[31180.696668] kvm D 0 18794 1 0x00000000

[31180.696669] Call Trace:

[31180.696672] __schedule+0x2e6/0x700

[31180.696673] ? wbt_exit+0x30/0x30

[31180.696675] ? __wbt_done+0x40/0x40

[31180.696676] schedule+0x33/0xa0

[31180.696678] io_schedule+0x16/0x40

[31180.696680] rq_qos_wait+0xf7/0x170

[31180.696682] ? sysv68_partition+0x2d0/0x2d0

[31180.696684] ? wbt_exit+0x30/0x30

[31180.696685] wbt_wait+0x9f/0xe0

[31180.696687] __rq_qos_throttle+0x28/0x40

[31180.696690] blk_mq_make_request+0x11f/0x5b0

[31180.696693] generic_make_request+0xcf/0x310

[31180.696696] flush_bio_list+0x4e/0xf0 [raid1]

[31180.696698] raid1_unplug+0xc8/0xf0 [raid1]

[31180.696700] blk_flush_plug_list+0xb3/0x110

[31180.696703] blk_finish_plug+0x26/0x40

[31180.696705] blkdev_write_iter+0xbe/0x140

[31180.696708] aio_write+0xf1/0x190

[31180.696710] ? poll_select_finish+0x210/0x210

[31180.696712] ? poll_select_finish+0x210/0x210

[31180.696714] ? _cond_resched+0x19/0x30

[31180.696716] io_submit_one+0x77c/0xb20

[31180.696719] __x64_sys_io_submit+0xa9/0x190

[31180.696721] ? __x64_sys_io_submit+0xa9/0x190

[31180.696724] ? fput+0x13/0x20

[31180.696726] ? ksys_read+0xa3/0xe0

[31180.696729] do_syscall_64+0x57/0x190

[31180.696731] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[31180.696732] RIP: 0033:0x7fcdccc0ff59

[31180.696735] Code: 00 c3 66 2e 0f 1f 84 00 00 00 00 00 0f 1f 44 00 00 48 89 f8 48 89 f7 48 89 d6 48 89 ca 4d 89 c2 4d 89 c8 4c 8b 4c 24 08 0f 05 <48> 3d 01 f0 ff ff 73 01 c3 48 8b 0d 07 6f 0c 00 f7 d8 64 89 01 48

[31180.696736] RSP: 002b:00007fffcd884878 EFLAGS: 00000246 ORIG_RAX: 00000000000000d1

[31180.696737] RAX: ffffffffffffffda RBX: 00007fcdc207ce20 RCX: 00007fcdccc0ff59

[31180.696738] RDX: 00007fffcd8848c0 RSI: 0000000000000001 RDI: 00007fcdc201c000

[31180.696739] RBP: 00007fcdc201c000 R08: 00007fcdc207f310 R09: 0000000000000001

[31180.696739] R10: 0000000000000000 R11: 0000000000000246 R12: 0000000000000001

[31180.696740] R13: 0000000000000005 R14: 00007fffcd8848c0 R15: 0000000000000001

[31180.696744] INFO: task kvm:19000 blocked for more than 120 seconds.

[31180.696774] Tainted: P O 5.4.106-1-pve #1

[31180.696800] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[31180.696833] kvm D 0 19000 1 0x00004000

[31180.696834] Call Trace:

[31180.696835] __schedule+0x2e6/0x700

[31180.696836] schedule+0x33/0xa0

[31180.696837] md_super_wait+0x74/0xb0

[31180.696838] ? wait_woken+0x80/0x80

[31180.696839] md_bitmap_wait_writes+0x9b/0xb0

[31180.696840] md_bitmap_unplug+0xfd/0x120

[31180.696841] flush_bio_list+0x23/0xf0 [raid1]

[31180.696842] raid1_unplug+0xc8/0xf0 [raid1]

[31180.696843] blk_flush_plug_list+0xb3/0x110

[31180.696844] blk_finish_plug+0x26/0x40

[31180.696845] __x64_sys_io_submit+0x122/0x190

[31180.696846] do_syscall_64+0x57/0x190

[31180.696847] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[31180.696847] RIP: 0033:0x7f8618d19f59

[31180.696849] Code: Bad RIP value.

[31180.696849] RSP: 002b:00007ffde2acb988 EFLAGS: 00000246 ORIG_RAX: 00000000000000d1

[31180.696850] RAX: ffffffffffffffda RBX: 00007f860e186e20 RCX: 00007f8618d19f59

[31180.696850] RDX: 00007ffde2acb9d0 RSI: 000000000000001e RDI: 00007f860e126000

[31180.696850] RBP: 00007f860e126000 R08: 00007f860e189310 R09: 000000000000001e

[31180.696851] R10: 0000000000000000 R11: 0000000000000246 R12: 000000000000001e

[31180.696851] R13: 0000000000000005 R14: 00007ffde2acb9d0 R15: 0000000000000001

[31180.696858] INFO: task kvm:19099 blocked for more than 120 seconds.

[31180.696872] Tainted: P O 5.4.106-1-pve #1

[31180.696885] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[31180.696902] kvm D 0 19099 1 0x00000000

.......

[31180.696953] INFO: task lvremove:188475 blocked for more than 120 seconds. ////<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

[31180.696968] Tainted: P O 5.4.106-1-pve #1

[31180.696982] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[31180.696999] lvremove D 0 188475 21657 0x00000000

[31180.697000] Call Trace:

[31180.697002] __schedule+0x2e6/0x700

[31180.697003] schedule+0x33/0xa0

[31180.697004] schedule_timeout+0x205/0x330

[31180.697005] ? blk_flush_plug_list+0xb3/0x110

[31180.697006] io_schedule_timeout+0x1e/0x50

[31180.697006] wait_for_completion_io+0xb7/0x140

[31180.697009] ? wake_up_q+0x80/0x80

[31180.697009] submit_bio_wait+0x61/0x90

[31180.697010] blkdev_issue_discard+0x7a/0xd0

[31180.697012] blk_ioctl_discard+0xc4/0x110

[31180.697013] blkdev_ioctl+0x4e7/0x9e0

[31180.697014] block_ioctl+0x3d/0x50

[31180.697015] do_vfs_ioctl+0xa9/0x640

[31180.697016] ? __do_sys_newfstat+0x5f/0x70

[31180.697017] ksys_ioctl+0x67/0x90

[31180.697017] __x64_sys_ioctl+0x1a/0x20

[31180.697018] do_syscall_64+0x57/0x190

[31180.697019] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[31180.697020] RIP: 0033:0x7fbeae0f7427

[31180.697021] Code: Bad RIP value.

[31180.697021] RSP: 002b:00007ffd4fb311d8 EFLAGS: 00000246 ORIG_RAX: 0000000000000010

[31180.697022] RAX: ffffffffffffffda RBX: 0000564696f132e3 RCX: 00007fbeae0f7427

[31180.697022] RDX: 00007ffd4fb31200 RSI: 0000000000001277 RDI: 0000000000000007

[31180.697022] RBP: 00007ffd4fb31230 R08: 0000564696f134f8 R09: 00007ffd4fb310e0

[31180.697023] R10: 0000000000000000 R11: 0000000000000246 R12: 0000564696d53ec0

[31180.697023] R13: 00007ffd4fb31c60 R14: 0000000000000000 R15: 0000000000000000

[31229.860265] sd 0:2:14:0: [sdd] tag#3306 Abort request is for SMID: 3307

[31229.860269] sd 0:2:14:0: attempting task abort! scmd(0x00000000d4c9c50b) tm_dev_handle 0xe

[31229.926556] sd 0:2:14:0: [sdd] tag#3171 BRCM Debug mfi stat 0x2d, data len requested/completed 0x20000/0x0

[31229.926600] sd 0:2:14:0: [sdd] tag#3200 BRCM Debug mfi stat 0x2d, data len requested/completed 0x6000/0x0

[31229.926623] sd 0:2:14:0: [sdd] tag#759 BRCM Debug mfi stat 0x2d, data len requested/completed 0x1000/0x0

[31229.926725] sd 0:2:14:0: [sdd] tag#761 BRCM Debug mfi stat 0x2d, data len requested/completed 0x1000/0x0

[31229.926747] sd 0:2:14:0: [sdd] tag#3262 BRCM Debug mfi stat 0x2d, data len requested/completed 0x6000/0x0

[31229.926768] sd 0:2:14:0: [sdd] tag#757 BRCM Debug mfi stat 0x2d, data len requested/completed 0x1000/0x0

[31229.926924] sd 0:2:14:0: [sdd] tag#755 BRCM Debug mfi stat 0x2d, data len requested/completed 0x1000/0x0

[31229.926976] sd 0:2:14:0: [sdd] tag#3258 BRCM Debug mfi stat 0x2d, data len requested/completed 0xa00/0x0

.....

more upon request

Last edited: