Due to excludes first not working as I expected (see thread .pxarexclude not working as expected) I aborted the first backup-manager-client backups.

However before I did so it stored a lot of chunks of VM image files and other stuff that was not supposed to be backed up.

On aborting the backup the backup disappeared from the datastore content list in PBS. So I thought by clicking "Start GC" on the datastore I will get the chunks used for it back, as long as they have not been reused for one of the subsequent backups with working excludes.

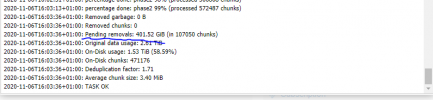

However, it does not seem to be the case:

However I see

As the partial backup did not show up, I suspect it was deleted cause it was incomplete, so there are some chunks that are not referenced. I thought those would be deleted straight away without me having to prune something first.

However before I did so it stored a lot of chunks of VM image files and other stuff that was not supposed to be backed up.

On aborting the backup the backup disappeared from the datastore content list in PBS. So I thought by clicking "Start GC" on the datastore I will get the chunks used for it back, as long as they have not been reused for one of the subsequent backups with working excludes.

However, it does not seem to be the case:

Code:

2020-07-15T11:10:45+02:00: starting garbage collection on store usb

2020-07-15T11:10:45+02:00: Start GC phase1 (mark used chunks)

2020-07-15T11:10:47+02:00: Start GC phase2 (sweep unused chunks)

2020-07-15T11:10:47+02:00: percentage done: 1, chunk count: 931

2020-07-15T11:10:47+02:00: percentage done: 2, chunk count: 1904

2020-07-15T11:10:47+02:00: percentage done: 3, chunk count: 2824

2020-07-15T11:10:47+02:00: percentage done: 4, chunk count: 3803

[…]

2020-07-15T11:10:48+02:00: percentage done: 97, chunk count: 93784

2020-07-15T11:10:48+02:00: percentage done: 98, chunk count: 94761

2020-07-15T11:10:48+02:00: percentage done: 99, chunk count: 95736

2020-07-15T11:10:48+02:00: Removed bytes: 0

2020-07-15T11:10:48+02:00: Removed chunks: 0

2020-07-15T11:10:48+02:00: Pending removals: 67004125560 bytes (41642 chunks)

2020-07-15T11:10:48+02:00: Original data bytes: 2121239972535

2020-07-15T11:10:48+02:00: Disk bytes: 108477348693 (5 %)

2020-07-15T11:10:48+02:00: Disk chunks: 55040

2020-07-15T11:10:48+02:00: Average chunk size: 1970882

2020-07-15T11:10:48+02:00: TASK OKHowever I see

Pending removals: 67004125560 bytes (41642 chunks) in there. How can I make it remove those chunks?As the partial backup did not show up, I suspect it was deleted cause it was incomplete, so there are some chunks that are not referenced. I thought those would be deleted straight away without me having to prune something first.