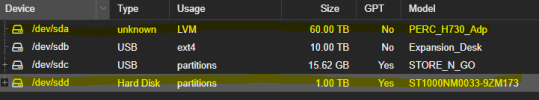

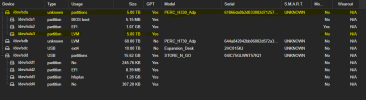

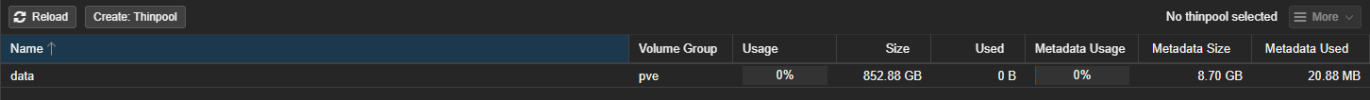

I'm new to proxmox and linux alike. I've been an IT professional for a long time in the windows world. I'm really enjoying learning linux and proxmox. I have a Dell T460 with a single 1tb sata boot drive and a Proc H730p running a raid 6 of 8 10tb drives. I have 6 more 1tb drives that I would like to use as my boot/local storage, mainly because I keep running out of space on my current 1tb drive.

I would like to reinstall proxmox on the 7 1tb drives using ZFS (RAIDZ-1). Another option I have is I also have a Proc H330 I could use to put those drives in a raid 5 config, but if I remember when installing proxmox the first time it didn't see my raid 6 config till I was in the web console (it wasn't an option to install proxmox to). The issue I have is that I have 1 VM with 2 14tb drives attached and 1 VM with 2 10tb drives attached, and I don't have anything big enough to back that up to.

Is it possible to reinstall proxmox to either a ZFS or HW raid and restore the OS from backup and restore the LXC's/VM's without those larger drives attached, reattach the raid 6 storage without losing the data then reattach those large disks back to the VM's? If so what should I be backing up (which files and folders)?

If I'm making sense, if anyone has a better idea to achieve the about please, I'm all ears. Thanks in advance.

I would like to reinstall proxmox on the 7 1tb drives using ZFS (RAIDZ-1). Another option I have is I also have a Proc H330 I could use to put those drives in a raid 5 config, but if I remember when installing proxmox the first time it didn't see my raid 6 config till I was in the web console (it wasn't an option to install proxmox to). The issue I have is that I have 1 VM with 2 14tb drives attached and 1 VM with 2 10tb drives attached, and I don't have anything big enough to back that up to.

Is it possible to reinstall proxmox to either a ZFS or HW raid and restore the OS from backup and restore the LXC's/VM's without those larger drives attached, reattach the raid 6 storage without losing the data then reattach those large disks back to the VM's? If so what should I be backing up (which files and folders)?

If I'm making sense, if anyone has a better idea to achieve the about please, I'm all ears. Thanks in advance.