Hi there,

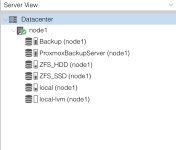

I had a working cluster with two hosts and HA using a Rasperry PI as a QI Device.

Host 1 boot drive failed (i use a USB as a boot drive, something i learned here on the forum is risky and i am waiting for hardware to change it).

I have now Host 2 and the QI Device running, and i reinstalled proxmox on a new USB drive on Host 1, i gave it the same FQDN and same IP. But did not try to reintegrate into the cluster.

I was trying to follow this guide from serve the home https://www.servethehome.com/9-step-calm-and-easy-proxmox-ve-boot-drive-failure-recovery/2/ and i was now on the part where i could import ZFS filesystems back on to the host, since they did not have any mallfunction, all contents should be on the drives.

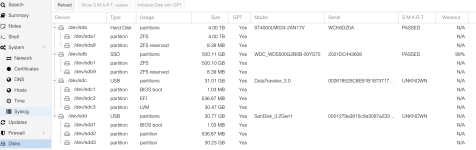

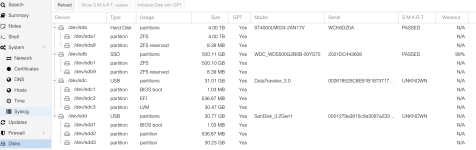

I have two pools "ZFS_SDD" on an SSD and "ZFS_HDD" on a Hard Disk, the guide says you should see the pools using the command "zpool status", but it just says:

Maybe i am missing a step?

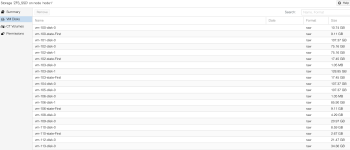

On disks i see the file systems.

Also, do you guys recommend me following the STH guide? I didn't find anything here similar to it.

Please excuse for any lack of basic knowledge, i am here to learn.

Thanks in advance!

I had a working cluster with two hosts and HA using a Rasperry PI as a QI Device.

Host 1 boot drive failed (i use a USB as a boot drive, something i learned here on the forum is risky and i am waiting for hardware to change it).

I have now Host 2 and the QI Device running, and i reinstalled proxmox on a new USB drive on Host 1, i gave it the same FQDN and same IP. But did not try to reintegrate into the cluster.

I was trying to follow this guide from serve the home https://www.servethehome.com/9-step-calm-and-easy-proxmox-ve-boot-drive-failure-recovery/2/ and i was now on the part where i could import ZFS filesystems back on to the host, since they did not have any mallfunction, all contents should be on the drives.

I have two pools "ZFS_SDD" on an SSD and "ZFS_HDD" on a Hard Disk, the guide says you should see the pools using the command "zpool status", but it just says:

root@node1:~# zpool status

no pools availableMaybe i am missing a step?

On disks i see the file systems.

Also, do you guys recommend me following the STH guide? I didn't find anything here similar to it.

Please excuse for any lack of basic knowledge, i am here to learn.

Thanks in advance!