Hello Proxmox users,

I noticed a strange thing or maybe this is expected behavior I don't know. To make it short...

I have a Proxmox VE installed on HPE Proliant DL380 Gen8, 2xCPU 2,49GHz, hardware RAID 5 with 6x Samsung Enterprise PM893 480GB SSD drives, 256GB RAM DDR4-3200.

There are only two VMs running on it.

One is clean Windows 2022 Server without any services installed yet and the other one is Windows 2019 with MS SQL 2019 working with simple databases.

Both have very similiar hardware configuration in Proxmox VE, both have harddrives configured as SCSI.

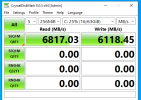

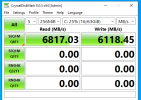

Please take a look on the screens I've attached below is there any reason these benchmarks are so different?

I've check them many times on different server loads and benchmark gives me pretty much all the time the same result.

Here is Windows 2022

And there is Windows 2019

I noticed a strange thing or maybe this is expected behavior I don't know. To make it short...

I have a Proxmox VE installed on HPE Proliant DL380 Gen8, 2xCPU 2,49GHz, hardware RAID 5 with 6x Samsung Enterprise PM893 480GB SSD drives, 256GB RAM DDR4-3200.

There are only two VMs running on it.

One is clean Windows 2022 Server without any services installed yet and the other one is Windows 2019 with MS SQL 2019 working with simple databases.

Both have very similiar hardware configuration in Proxmox VE, both have harddrives configured as SCSI.

Please take a look on the screens I've attached below is there any reason these benchmarks are so different?

I've check them many times on different server loads and benchmark gives me pretty much all the time the same result.

Here is Windows 2022

And there is Windows 2019