Hi.

I have mounted a cephfs-disk in proxmox 7.3 using sshfs. The cephfs-disk is physically installed on another proxmox-server in the same LAN, but not in the same cluster.

I backed up some VM's and containers on this drive, in order to restore them again on the destination server for migration purposes.

One of the backup failed due to too little diskspace, but the backup-process didn't seem to exit in a clean way. So on the destination host, I removed all backups (the incomplete one + the ones I have already successfully migrated) using the rm-command.

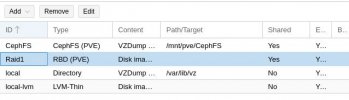

However, the disk on the destination-server "Raid1" that hosts the freshly migrated VM's and containers tells me:

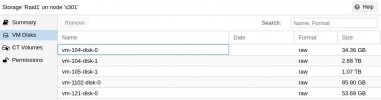

"Usage: 25.81% (2.45 TB of 9.49 TB)". This is strange, since the VM's have combined 3.5TB of VM-disks (see second code snippet below). I have not (yet) encountered any data missing. Instead of the VM-disks, the GUI shows "rbd error: rbd: listing images failed: (2) No such file or directory (500)".

Here is some info about the two VM's with VM-disks on the drive giving the rdb-error:

Could you please advise on how to proceed? How may I investigate and fix this without risking to loose data?

I have mounted a cephfs-disk in proxmox 7.3 using sshfs. The cephfs-disk is physically installed on another proxmox-server in the same LAN, but not in the same cluster.

I backed up some VM's and containers on this drive, in order to restore them again on the destination server for migration purposes.

One of the backup failed due to too little diskspace, but the backup-process didn't seem to exit in a clean way. So on the destination host, I removed all backups (the incomplete one + the ones I have already successfully migrated) using the rm-command.

However, the disk on the destination-server "Raid1" that hosts the freshly migrated VM's and containers tells me:

"Usage: 25.81% (2.45 TB of 9.49 TB)". This is strange, since the VM's have combined 3.5TB of VM-disks (see second code snippet below). I have not (yet) encountered any data missing. Instead of the VM-disks, the GUI shows "rbd error: rbd: listing images failed: (2) No such file or directory (500)".

root@s301:~# rbd ls -l cephpool

rbd: error opening pool 'cephpool': (2) No such file or directory

rbd: listing images failed: (2) No such file or directory

Here is some info about the two VM's with VM-disks on the drive giving the rdb-error:

root@s301:~# grep disk /etc/pve/qemu-server/104.conf

scsi0: local-lvm:vm-104-disk-0,size=32G

unused0: Raid1:vm-104-disk-0

virtio1: Raid1:vm-104-disk-1,size=2500G

root@s301:~# grep disk /etc/pve/qemu-server/105.conf

unused0: Raid1:vm-105-disk-0

virtio0: local-lvm:vm-105-disk-0,size=80G

virtio1: Raid1:vm-105-disk-1,size=1000G

root@s301:~#

Could you please advise on how to proceed? How may I investigate and fix this without risking to loose data?

Last edited: