Hi Proxmox Community,

I had a similar question in regards to when I was using some Windows 11 VMs and discovered that the ballooning option for ram consumption can cause a problem as it was maxing out my ram on the Proxmox GUI screen for the VM but not inside the windows VM. If you have ballooning on for Windows and don't load the VirtIO drivers then it can max out ram in Proxmox GUI even if you are doing nothing and ram set to 24GB.

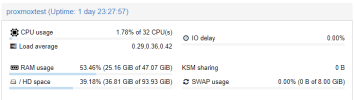

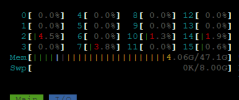

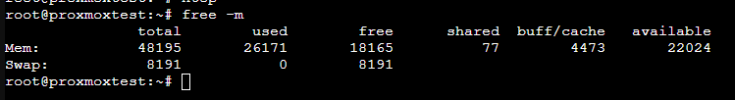

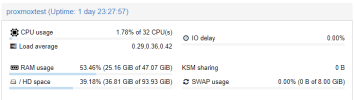

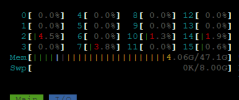

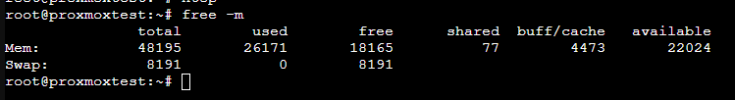

This question has to do with overall ram consumption of a node as I currently have a PowerEdge R420 with 48GB of ram. It has 9 VMs that are all not running, Zpool of 3 disks, and consistent replication to other servers. The first node, my PowerEdge, is sitting at 25/47 GB consumption, that is 53% ram usage when it's not running any VMs. The documentation says that ZFS will consume ram at a rate of base 2 plus each value of TB space. So that would be 2+12 for 14GB of ram consumption, but not sure if this a generalized guideline or if it's hardcoded. HTOP command shows the system consuming 4GB with orange being cache. free -m is showing me that I have 26,171 MB of consumed ram which is in line with what Proxmox is telling me but the buff/cache is saying 4473 MB.

Zpool ram consumption 14GB

Proxmox OS 4GB

Cache 4.4GB

I'm assuming the consistent caching is replication and updating disks. That makes it 22.4GB so I'm still missing some ram usage somewhere.

Overall I'm trying to understand why is this consuming so much ram? I intend to make some servers in the future and want to spec them out appropriately but it feels like I'm going into the 200+GB of ram just to service a couple quick VMs.

If this is the norm then does anyone know the rate at which I need to increase ram to GB of data replication?

I had a similar question in regards to when I was using some Windows 11 VMs and discovered that the ballooning option for ram consumption can cause a problem as it was maxing out my ram on the Proxmox GUI screen for the VM but not inside the windows VM. If you have ballooning on for Windows and don't load the VirtIO drivers then it can max out ram in Proxmox GUI even if you are doing nothing and ram set to 24GB.

This question has to do with overall ram consumption of a node as I currently have a PowerEdge R420 with 48GB of ram. It has 9 VMs that are all not running, Zpool of 3 disks, and consistent replication to other servers. The first node, my PowerEdge, is sitting at 25/47 GB consumption, that is 53% ram usage when it's not running any VMs. The documentation says that ZFS will consume ram at a rate of base 2 plus each value of TB space. So that would be 2+12 for 14GB of ram consumption, but not sure if this a generalized guideline or if it's hardcoded. HTOP command shows the system consuming 4GB with orange being cache. free -m is showing me that I have 26,171 MB of consumed ram which is in line with what Proxmox is telling me but the buff/cache is saying 4473 MB.

Zpool ram consumption 14GB

Proxmox OS 4GB

Cache 4.4GB

I'm assuming the consistent caching is replication and updating disks. That makes it 22.4GB so I'm still missing some ram usage somewhere.

Overall I'm trying to understand why is this consuming so much ram? I intend to make some servers in the future and want to spec them out appropriately but it feels like I'm going into the 200+GB of ram just to service a couple quick VMs.

If this is the norm then does anyone know the rate at which I need to increase ram to GB of data replication?