Hi everybody,

I have a set of 4 ssd drives configured in raidz1.

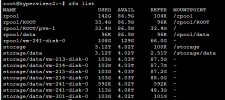

here is the config:

As we are running out of space, I thought I could add another drive to the raidz but it looks not to be the right thing to do.

I am thinking of taking 1 disk off the raidz1 and create a new mirror pool with an additional disk, as we only have one disk slot remainig on the server

what would be the best solution ? what are your suggestions ?

I have a set of 4 ssd drives configured in raidz1.

here is the config:

Code:

storage 4.75T 411G 140K /storage

storage/data 4.75T 411G 1.44T /storage/data

storage/data/subvol-100-disk-0 2.38T 411G 1.93T /storage/data/subvol-100-disk-0

storage/data/subvol-171-disk-0 952G 411G 887G /storage/data/subvol-171-disk-0

As we are running out of space, I thought I could add another drive to the raidz but it looks not to be the right thing to do.

I am thinking of taking 1 disk off the raidz1 and create a new mirror pool with an additional disk, as we only have one disk slot remainig on the server

what would be the best solution ? what are your suggestions ?

Last edited: