Due to other posts online stating that RAID1 ZFS requires gobs of system memory, I plan to put my VMs in an RAID1 LVM setup instead. I would then mount this RAID1 LV as a directory in datacenter storage. Is this an acceptable method of running VMs in a production environment?

----

Testing PVE under virtualbox:

I created the RAID1 LV to a size of 5G (PV: 2 disks of 20GB ea = 5GB used, 15GB unused). I then created the ext4 filesystem on the LV.

The method I used to add the LV as a directory in datacenter storage (I am not sure if the following is correct but it seems to work):

- kpartx -a /dev/vg/lv (I believe this step maps the LV to /dev/mapper/lv)

- mount /dev/mapper/lv /mnt/lv

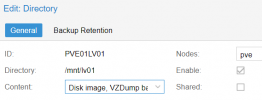

- I then went to datacenter storage and added /mnt/lv as a directory and selected all content type

----

Instead of reading the directory as the 5GiB LV that it is based on, it reads it as 18.4GiB (the entire VG including non allocated space). Oddly when I first added the directory it stated correctly 5GiB, however on PVE reboot it changes to 18.4GiB.

Is this expected behavior?

----

Testing PVE under virtualbox:

I created the RAID1 LV to a size of 5G (PV: 2 disks of 20GB ea = 5GB used, 15GB unused). I then created the ext4 filesystem on the LV.

The method I used to add the LV as a directory in datacenter storage (I am not sure if the following is correct but it seems to work):

- kpartx -a /dev/vg/lv (I believe this step maps the LV to /dev/mapper/lv)

- mount /dev/mapper/lv /mnt/lv

- I then went to datacenter storage and added /mnt/lv as a directory and selected all content type

----

Instead of reading the directory as the 5GiB LV that it is based on, it reads it as 18.4GiB (the entire VG including non allocated space). Oddly when I first added the directory it stated correctly 5GiB, however on PVE reboot it changes to 18.4GiB.

Is this expected behavior?

Last edited: