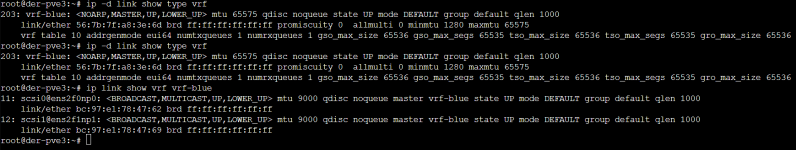

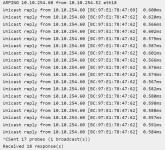

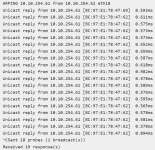

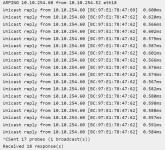

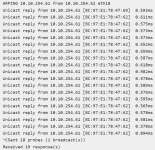

Spoke with Pure, they ran an `arping` from the array. Looks like there's no flapping (after the initial ping) on the array side.

Going to see if support knows of if there is any logic that prevents it from flapping.

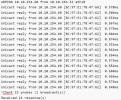

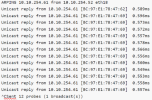

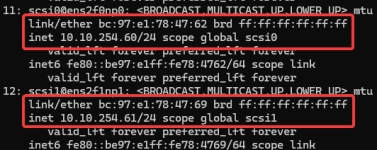

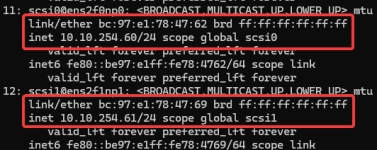

It looks like when arping either interface, it returns the MAC for the first interface

Going to see if support knows of if there is any logic that prevents it from flapping.

It looks like when arping either interface, it returns the MAC for the first interface

Last edited: