I just set up my first ever VM, a test Ubuntu Server LTS install.

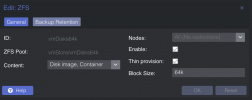

Underlying storage system is a ZFS mirrored pair of SATA SSDs.

I enabled SSD Emulation to try to force the VM to recognize the attached virtual disk as an SSD.

tl;dr I cannot enable TRIM within the VM and I'm not sure I've correctly set up the virtual OS storage drive to be treated as an SSD. Is this right?

I would guess that this has something to do with LU Thin Provisioning being on (my VM storage is sparse enabled), but that's just a guess.

I thought I'd done okay:

The guide tells me to be sure to enable TRIM support, and when I went to do that, I realized something's a bit off.

Underlying storage system is a ZFS mirrored pair of SATA SSDs.

I enabled SSD Emulation to try to force the VM to recognize the attached virtual disk as an SSD.

tl;dr I cannot enable TRIM within the VM and I'm not sure I've correctly set up the virtual OS storage drive to be treated as an SSD. Is this right?

I would guess that this has something to do with LU Thin Provisioning being on (my VM storage is sparse enabled), but that's just a guess.

I thought I'd done okay:

The guide tells me to be sure to enable TRIM support, and when I went to do that, I realized something's a bit off.

- LSHW thinks the disk is a 5400 RPM HDD

- Smartctl sees an SSD with a 512 byte block size (my SSDs are set to ashift=13, and Proxmox's dataset for this VM disk defines volblocksize=64k ), with "LU is thin provisioned." Vendor is QEMU, and Revision is 2.5+.

- Hdparm -I sees a non-removable ATA device with 512 byte sectors, no trim or SMART support, and 0 logical/cylinders/heads/sectors per track.