I'm wondering from where takes UI it's informations?

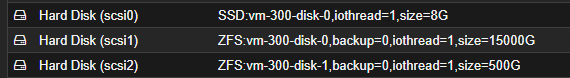

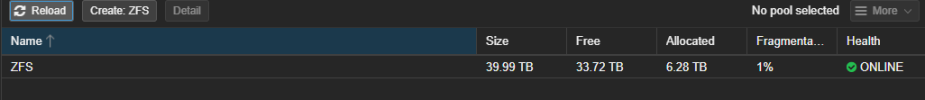

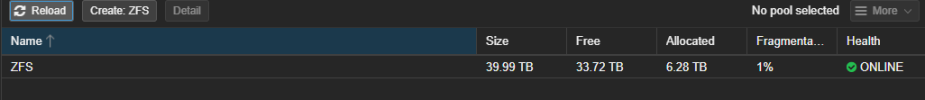

because pool shows me this:

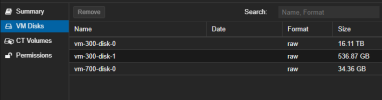

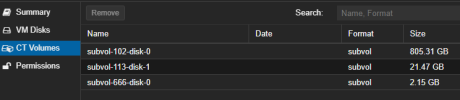

but UI shows this:

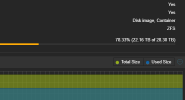

what would be the cause? eg. can it be somehow forced to update regarding reality?

because pool shows me this:

Code:

root@ragnar:~# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

ZFS 36.4T 5.72T 30.7T - - 1% 15% 1.00x ONLINE -

root@ragnar:~#but UI shows this:

what would be the cause? eg. can it be somehow forced to update regarding reality?

Last edited: