Hi,

I'm having a bit of a problem since I set up my 2 node cluster.

THe issue happened first with 6.4, then I upgraded to 7.0 both node, no problem with the upgrade but the issue is still there.

I have a main node with a couple of VM and I added a second node a couple of week ago.

The 2nd node is not always ON, I have a cron that set corum vote to 1 when the second node is offline.

Since I did that, from time to time qmeventd shutdown my VM and I can't figure out why.

I have VM 100, 101, 102, 103, and 105 running on this node. As you can see in the log below all of them are being shutdown by qmeventd

This is not happening every day. I had the issue twice already. But every time this is happening around 3/4am.

I don't have anything scheduled on the node, no cron no reboot no backup.

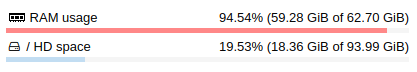

I have checked dmesg, and I don't have out of memory logs. In the graph, I have about 1 fourth of the total ram available on the host before the issue happened.

I have a lot of these:

I don't know if this is related.

I'll set up a cron to collect syslog and dmesg during that time.

Any ideas?

thanks

Veeh

Full Syslog for that time frame:

I'm having a bit of a problem since I set up my 2 node cluster.

THe issue happened first with 6.4, then I upgraded to 7.0 both node, no problem with the upgrade but the issue is still there.

I have a main node with a couple of VM and I added a second node a couple of week ago.

The 2nd node is not always ON, I have a cron that set corum vote to 1 when the second node is offline.

Since I did that, from time to time qmeventd shutdown my VM and I can't figure out why.

I have VM 100, 101, 102, 103, and 105 running on this node. As you can see in the log below all of them are being shutdown by qmeventd

Code:

Jul 9 03:01:15 stmx QEMU[8103]: kvm: terminating on signal 15 from pid 3347 (/usr/sbin/qmeventd)

Jul 9 03:01:16 stmx qmeventd[1662521]: Starting cleanup for 103

Jul 9 03:01:16 stmx qmeventd[1662521]: Finished cleanup for 103

Jul 9 03:02:03 stmx QEMU[4733]: kvm: terminating on signal 15 from pid 3347 (/usr/sbin/qmeventd)

Jul 9 03:02:04 stmx qmeventd[1664620]: Starting cleanup for 105

Jul 9 03:02:04 stmx qmeventd[1664620]: Finished cleanup for 105

Jul 9 03:03:04 stmx QEMU[4432]: kvm: terminating on signal 15 from pid 3347 (/usr/sbin/qmeventd)

Jul 9 03:03:05 stmx qmeventd[1668332]: Starting cleanup for 102

Jul 9 03:03:05 stmx qmeventd[1668332]: Finished cleanup for 102

Jul 9 03:04:05 stmx QEMU[4183]: kvm: terminating on signal 15 from pid 3347 (/usr/sbin/qmeventd)

Jul 9 03:04:06 stmx qmeventd[1670989]: Starting cleanup for 100

Jul 9 03:04:06 stmx qmeventd[1670989]: Finished cleanup for 100

Jul 9 03:05:04 stmx QEMU[4089]: kvm: terminating on signal 15 from pid 3347 (/usr/sbin/qmeventd)

Jul 9 03:05:05 stmx qmeventd[1673387]: Starting cleanup for 101

Jul 9 03:05:05 stmx qmeventd[1673387]: Finished cleanup for 101This is not happening every day. I had the issue twice already. But every time this is happening around 3/4am.

I don't have anything scheduled on the node, no cron no reboot no backup.

I have checked dmesg, and I don't have out of memory logs. In the graph, I have about 1 fourth of the total ram available on the host before the issue happened.

I have a lot of these:

Code:

[ 0.052608] PM: hibernation: Registered nosave memory: [mem 0xfed20000-0xfedfffff]

[ 0.052609] PM: hibernation: Registered nosave memory: [mem 0xfee00000-0xfee00fff]

[ 0.052609] PM: hibernation: Registered nosave memory: [mem 0xfee01000-0xfeffffff]

[ 0.052610] PM: hibernation: Registered nosave memory: [mem 0xff000000-0xffffffff]I don't know if this is related.

I'll set up a cron to collect syslog and dmesg during that time.

Any ideas?

thanks

Veeh

Full Syslog for that time frame:

Code:

Jul 9 03:01:00 stmx systemd[1]: Starting Proxmox VE replication runner...

Jul 9 03:01:01 stmx systemd[1]: pvesr.service: Succeeded.

Jul 9 03:01:01 stmx systemd[1]: Finished Proxmox VE replication runner.

Jul 9 03:01:15 stmx QEMU[8103]: kvm: terminating on signal 15 from pid 3347 (/usr/sbin/qmeventd)

Jul 9 03:01:15 stmx kernel: [46796.252312] zd272: p1 p2 p3

Jul 9 03:01:15 stmx kernel: [46796.282063] zd352: p1

Jul 9 03:01:15 stmx systemd[1]: Stopping LVM event activation on device 230:353...

Jul 9 03:01:15 stmx systemd[1]: Stopping LVM event activation on device 230:275...

Jul 9 03:01:15 stmx systemd[1]: Requested transaction contradicts existing jobs: Resource deadlock avoided

Jul 9 03:01:15 stmx systemd[1]: Requested transaction contradicts existing jobs: Resource deadlock avoided

Jul 9 03:01:15 stmx lvm[1662467]: pvscan[1662467] /dev/zd272p3 excluded by filters: device is rejected by filter config.

Jul 9 03:01:15 stmx lvm[1662442]: pvscan[1662442] /dev/zd352p1 excluded by filters: device is rejected by filter config.

Jul 9 03:01:15 stmx systemd[1]: lvm2-pvscan@230:275.service: Succeeded.

Jul 9 03:01:15 stmx systemd[1]: Stopped LVM event activation on device 230:275.

Jul 9 03:01:15 stmx systemd[1]: lvm2-pvscan@230:353.service: Succeeded.

Jul 9 03:01:15 stmx systemd[1]: Stopped LVM event activation on device 230:353.

Jul 9 03:01:15 stmx kernel: [46796.451319] vmbr1: port 5(tap103i0) entered disabled state

Jul 9 03:01:15 stmx systemd[1]: 103.scope: Succeeded.

Jul 9 03:01:15 stmx systemd[1]: 103.scope: Consumed 3h 57min 23.624s CPU time.

Jul 9 03:01:16 stmx qmeventd[1662521]: Starting cleanup for 103

Jul 9 03:01:16 stmx qmeventd[1662521]: Finished cleanup for 103

Jul 9 03:01:27 stmx pmxcfs[3649]: [dcdb] notice: data verification successful

Jul 9 03:01:41 stmx smartd[3346]: Device: /dev/sdd [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 66 to 60

Jul 9 03:02:00 stmx systemd[1]: Starting Proxmox VE replication runner...

Jul 9 03:02:01 stmx systemd[1]: pvesr.service: Succeeded.

Jul 9 03:02:01 stmx systemd[1]: Finished Proxmox VE replication runner.

Jul 9 03:02:03 stmx QEMU[4733]: kvm: terminating on signal 15 from pid 3347 (/usr/sbin/qmeventd)

Jul 9 03:02:03 stmx kernel: [46844.904203] zd368: p1 p2 p3

Jul 9 03:02:03 stmx kernel: [46844.906585] zd304: p1

Jul 9 03:02:03 stmx systemd[1]: Stopping LVM event activation on device 230:305...

Jul 9 03:02:03 stmx systemd[1]: Stopping LVM event activation on device 230:371...

Jul 9 03:02:03 stmx systemd[1]: Requested transaction contradicts existing jobs: Resource deadlock avoided

Jul 9 03:02:03 stmx systemd[1]: Requested transaction contradicts existing jobs: Resource deadlock avoided

Jul 9 03:02:03 stmx lvm[1664551]: pvscan[1664551] /dev/zd304p1 excluded by filters: device is rejected by filter config.

Jul 9 03:02:03 stmx lvm[1664586]: pvscan[1664586] /dev/zd368p3 excluded by filters: device is rejected by filter config.

Jul 9 03:02:03 stmx systemd[1]: lvm2-pvscan@230:305.service: Succeeded.

Jul 9 03:02:03 stmx systemd[1]: Stopped LVM event activation on device 230:305.

Jul 9 03:02:03 stmx systemd[1]: lvm2-pvscan@230:371.service: Succeeded.

Jul 9 03:02:03 stmx systemd[1]: Stopped LVM event activation on device 230:371.

Jul 9 03:02:04 stmx kernel: [46845.060500] vmbr1: port 4(tap105i0) entered disabled state

Jul 9 03:02:04 stmx systemd[1]: 105.scope: Succeeded.

Jul 9 03:02:04 stmx systemd[1]: 105.scope: Consumed 14min 9.979s CPU time.

Jul 9 03:02:04 stmx qmeventd[1664620]: Starting cleanup for 105

Jul 9 03:02:04 stmx qmeventd[1664620]: Finished cleanup for 105

Jul 9 03:03:00 stmx systemd[1]: Starting Proxmox VE replication runner...

Jul 9 03:03:01 stmx systemd[1]: pvesr.service: Succeeded.

Jul 9 03:03:01 stmx systemd[1]: Finished Proxmox VE replication runner.

Jul 9 03:03:04 stmx QEMU[4432]: kvm: terminating on signal 15 from pid 3347 (/usr/sbin/qmeventd)

Jul 9 03:03:05 stmx kernel: [46906.045003] zd384: p1 p2 p3

Jul 9 03:03:05 stmx systemd[1]: Stopping LVM event activation on device 230:387...

Jul 9 03:03:05 stmx systemd[1]: Requested transaction contradicts existing jobs: Resource deadlock avoided

Jul 9 03:03:05 stmx lvm[1668267]: pvscan[1668267] /dev/zd384p3 excluded by filters: device is rejected by filter config.

Jul 9 03:03:05 stmx systemd[1]: lvm2-pvscan@230:387.service: Succeeded.

Jul 9 03:03:05 stmx systemd[1]: Stopped LVM event activation on device 230:387.

Jul 9 03:03:05 stmx kernel: [46906.203878] vmbr1: port 3(tap102i0) entered disabled state

Jul 9 03:03:05 stmx systemd[1]: 102.scope: Succeeded.

Jul 9 03:03:05 stmx systemd[1]: 102.scope: Consumed 15min 26.002s CPU time.

Jul 9 03:03:05 stmx qmeventd[1668332]: Starting cleanup for 102

Jul 9 03:03:05 stmx qmeventd[1668332]: Finished cleanup for 102

Jul 9 03:04:00 stmx systemd[1]: Starting Proxmox VE replication runner...

Jul 9 03:04:01 stmx systemd[1]: pvesr.service: Succeeded.

Jul 9 03:04:01 stmx systemd[1]: Finished Proxmox VE replication runner.

Jul 9 03:04:05 stmx QEMU[4183]: kvm: terminating on signal 15 from pid 3347 (/usr/sbin/qmeventd)

Jul 9 03:04:05 stmx kernel: [46966.778875] zd240: p1 p2 p3

Jul 9 03:04:05 stmx systemd[1]: Stopping LVM event activation on device 230:243...

Jul 9 03:04:05 stmx systemd[1]: Requested transaction contradicts existing jobs: Resource deadlock avoided

Jul 9 03:04:05 stmx lvm[1670928]: pvscan[1670928] /dev/zd240p3 excluded by filters: device is rejected by filter config.

Jul 9 03:04:05 stmx systemd[1]: lvm2-pvscan@230:243.service: Succeeded.

Jul 9 03:04:05 stmx systemd[1]: Stopped LVM event activation on device 230:243.

Jul 9 03:04:05 stmx kernel: [46966.947179] vmbr1: port 2(tap100i0) entered disabled state

Jul 9 03:04:06 stmx kernel: [46967.069822] device enp1s0 left promiscuous mode

Jul 9 03:04:06 stmx systemd[1]: 100.scope: Succeeded.

Jul 9 03:04:06 stmx systemd[1]: 100.scope: Consumed 14min 1.119s CPU time.

Jul 9 03:04:06 stmx qmeventd[1670989]: Starting cleanup for 100

Jul 9 03:04:06 stmx qmeventd[1670989]: Finished cleanup for 100

Jul 9 03:04:16 stmx pvestatd[3785]: storage 'Backup' is not online

Jul 9 03:04:16 stmx pvestatd[3785]: status update time (5.068 seconds)

Jul 9 03:04:26 stmx pvestatd[3785]: storage 'Backup' is not online

Jul 9 03:04:26 stmx pvestatd[3785]: status update time (5.060 seconds)

Jul 9 03:04:33 stmx pvestatd[3785]: storage 'Backup' is not online

Jul 9 03:04:42 stmx pvestatd[3785]: storage 'Backup' is not online

Jul 9 03:04:51 stmx pvestatd[3785]: storage 'Backup' is not online

Jul 9 03:05:00 stmx systemd[1]: Starting Proxmox VE replication runner...

Jul 9 03:05:01 stmx systemd[1]: pvesr.service: Succeeded.

Jul 9 03:05:01 stmx systemd[1]: Finished Proxmox VE replication runner.

Jul 9 03:05:03 stmx pvestatd[3785]: storage 'Backup' is not online

Jul 9 03:05:04 stmx QEMU[4089]: kvm: terminating on signal 15 from pid 3347 (/usr/sbin/qmeventd)

Jul 9 03:05:04 stmx kernel: [47025.405412] zd160: p1 p2 p3

Jul 9 03:05:04 stmx systemd[1]: Stopping LVM event activation on device 230:163...

Jul 9 03:05:04 stmx systemd[1]: Requested transaction contradicts existing jobs: Resource deadlock avoided

Jul 9 03:05:04 stmx lvm[1673335]: pvscan[1673335] /dev/zd160p3 excluded by filters: device is rejected by filter config.

Jul 9 03:05:04 stmx kernel: [47025.560887] vmbr0: port 2(tap101i0) entered disabled state

Jul 9 03:05:04 stmx systemd[1]: lvm2-pvscan@230:163.service: Succeeded.

Jul 9 03:05:04 stmx systemd[1]: Stopped LVM event activation on device 230:163.

Jul 9 03:05:04 stmx kernel: [47025.616930] device enp0s25 left promiscuous mode

Jul 9 03:05:04 stmx systemd[1]: 101.scope: Succeeded.

Jul 9 03:05:04 stmx systemd[1]: 101.scope: Consumed 9min 25.226s CPU time.

Jul 9 03:05:05 stmx qmeventd[1673387]: Starting cleanup for 101

Jul 9 03:05:05 stmx qmeventd[1673387]: Finished cleanup for 101

Jul 9 03:05:13 stmx pvestatd[3785]: storage 'Backup' is not online

Jul 9 03:05:22 stmx pvestatd[3785]: storage 'Backup' is not online

Jul 9 03:05:31 stmx pvestatd[3785]: storage 'Backup' is not online

Jul 9 03:05:43 stmx pvestatd[3785]: storage 'Backup' is not online

Jul 9 03:05:53 stmx pvestatd[3785]: storage 'Backup' is not online

Last edited: