Hi, I'm using the qm remote-migrate command to migrate VMs from one unclustered host to another over LAN.

Running PVE 7, every package is up to date:

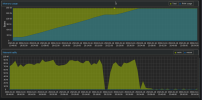

Slowly but surely, on the target host (the one the VM is being migrated to), this one "pveproxy worker" process creeps up in RAM usage until it's using all available RAM, at which point the migration halts and I have to manually terminate it. Screenshots below are from a currently running migration I've started after I reinstalled Proxmox to make sure it's not a configuration issue on my end.

I can't seem to get the process arguments either:

I've done this same migration process before and haven't noticed this problem. They were smaller disks (I'm migrating big >1TB disks now) on a higher RAM system (768GB, this one has 192)

Edit: Server is on LVM, not ZFS. I don't think it's a kernel disk cache issue, clearing the RAM cache manually doesn't help. Right now, top reports:

Running PVE 7, every package is up to date:

Code:

proxmox-ve: 7.4-1 (running kernel: 5.15.131-2-pve)

pve-manager: 7.4-17 (running version: 7.4-17/513c62be)

pve-kernel-5.15: 7.4-9

pve-kernel-5.15.131-2-pve: 5.15.131-3

pve-kernel-5.15.102-1-pve: 5.15.102-1

ceph-fuse: 15.2.17-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx4

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4.1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.4-2

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-3

libpve-rs-perl: 0.7.7

libpve-storage-perl: 7.4-3

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.4.4-1

proxmox-backup-file-restore: 2.4.4-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.7.3

pve-cluster: 7.3-3

pve-container: 4.4-6

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-4~bpo11+1

pve-firewall: 4.3-5

pve-firmware: 3.6-6

pve-ha-manager: 3.6.1

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-2

qemu-server: 7.4-4

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.14-pve1Slowly but surely, on the target host (the one the VM is being migrated to), this one "pveproxy worker" process creeps up in RAM usage until it's using all available RAM, at which point the migration halts and I have to manually terminate it. Screenshots below are from a currently running migration I've started after I reinstalled Proxmox to make sure it's not a configuration issue on my end.

I can't seem to get the process arguments either:

Code:

root@host:~# ps -p 1286 -o args --no-headers

pveproxy workerI've done this same migration process before and haven't noticed this problem. They were smaller disks (I'm migrating big >1TB disks now) on a higher RAM system (768GB, this one has 192)

Edit: Server is on LVM, not ZFS. I don't think it's a kernel disk cache issue, clearing the RAM cache manually doesn't help. Right now, top reports:

Code:

MiB Mem : 193370.6 total, 75423.4 free, 114605.2 used, 3342.1 buff/cache

Last edited: