Hi,

I know there are a lot of threads regarding high SWAP usage, but I can't find a good explanation and/or problem solving. So I request help to understand the high SWAP usage and how to fix this. I gathered some information below and can't see any over-usage of the QEMU VM or the PVE host, so I do not know why the SWAP is used so aggressively.

Much thanks in advance for any help!

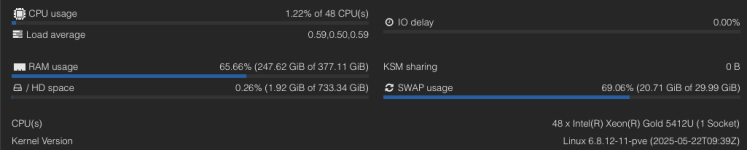

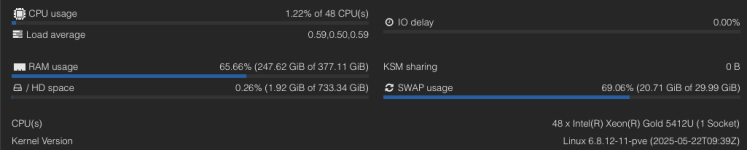

1) Current situation: SWAP is over 20GB

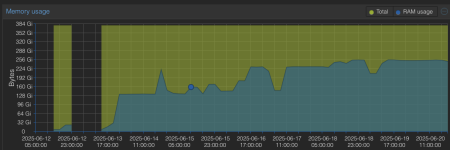

2) Overview of the RAM usage of the

3) SWAP is a mirror

4)

5) ZFS ARC is limited at startup via

6) Identify process with high SWAP usages: its

7) Overview of the QEMU VM

8) I'm not sure if this is relevant, because I am not familiar with cgroup

I know there are a lot of threads regarding high SWAP usage, but I can't find a good explanation and/or problem solving. So I request help to understand the high SWAP usage and how to fix this. I gathered some information below and can't see any over-usage of the QEMU VM or the PVE host, so I do not know why the SWAP is used so aggressively.

Much thanks in advance for any help!

1) Current situation: SWAP is over 20GB

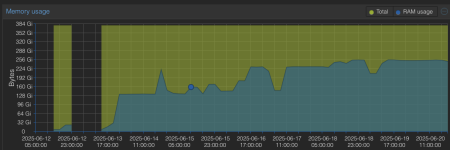

2) Overview of the RAM usage of the

PVE host for "Week (maximum)" do not show an overallocation of RAM

3) SWAP is a mirror

Code:

root@pve:~# vgdisplay swap0

--- Volume group ---

VG Name swap0

System ID

Format lvm2

Metadata Areas 2

Metadata Sequence No 3

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 1

Max PV 0

Cur PV 2

Act PV 2

VG Size 59.99 GiB

PE Size 4.00 MiB

Total PE 15358

Alloc PE / Size 15358 / 59.99 GiB

Free PE / Size 0 / 0

root@pve:~# swapon

NAME TYPE SIZE USED PRIO

/dev/dm-4 partition 30G 20.7G -24)

swappiness is set to 1 at startup via /etc/sysctl.d

Code:

root@pve:~# cat /proc/sys/vm/swappiness

15) ZFS ARC is limited at startup via

/etc/sysctl.d

Code:

root@pve:~# cat /sys/module/zfs/parameters/zfs_arc_max

17179869184

root@pve:~# arcstat

time read ddread ddh% dmread dmh% pread ph% size c avail

22:28:13 4 4 100 0 0 0 0 16G 16G 116G6) Identify process with high SWAP usages: its

/usr/bin/kvm and QEMU VM 151

Code:

root@pve:~# /root/show_swap_usage.sh

SWAP PID COMMAND

17.31 GB PID: 268197 /usr/bin/kvm -id 151 -name...

1.13 GB PID: 1435137 /usr/bin/kvm -id 161 -name...

0.46 GB PID: 265915 /usr/bin/kvm -id 100 -name...

0.39 GB PID: 269074 /usr/bin/kvm -id 999 -name...

0.34 GB PID: 266530 /usr/bin/kvm -id 110 -name...

0.13 GB PID: 752520 /usr/bin/kvm -id 152 -name...

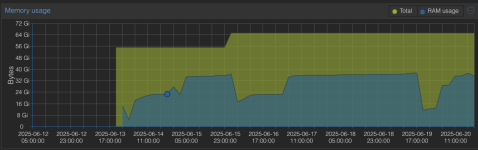

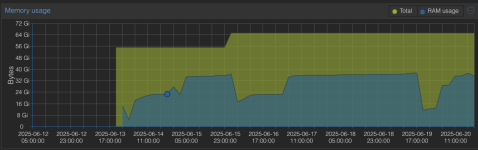

0.13 GB PID: 1631307 /usr/bin/kvm -id 150 -name...7) Overview of the QEMU VM

151 "Week (maximum)" do not show an overallocation of RAM

8) I'm not sure if this is relevant, because I am not familiar with cgroup

Code:

root@pve:/sys/fs/cgroup/system.slice# ll memory.swap.*

-r--r--r-- 1 root root 0 Jun 20 22:06 memory.swap.current

-r--r--r-- 1 root root 0 Jun 20 22:06 memory.swap.events

-rw-r--r-- 1 root root 0 Jun 20 22:06 memory.swap.high

-rw-r--r-- 1 root root 0 Jun 13 11:27 memory.swap.max

-r--r--r-- 1 root root 0 Jun 20 22:06 memory.swap.peak

root@pve:/sys/fs/cgroup/system.slice# cat memory.swap.*

572837888

high 0

max 0

fail 0

max

max

575606784

root@pve:/sys/fs/cgroup/qemu.slice/151.scope# ll memory.swap.*

-r--r--r-- 1 root root 0 Jun 20 22:04 memory.swap.current

-r--r--r-- 1 root root 0 Jun 20 22:04 memory.swap.events

-rw-r--r-- 1 root root 0 Jun 20 22:04 memory.swap.high

-rw-r--r-- 1 root root 0 Jun 16 00:32 memory.swap.max

-r--r--r-- 1 root root 0 Jun 20 22:04 memory.swap.peak

root@pve:/sys/fs/cgroup/qemu.slice/151.scope# cat memory.swap.*

18597539840

high 0

max 0

fail 0

max

max

18597543936

Code:

proxmox-ve: 8.4.0 (running kernel: 6.8.12-11-pve)

pve-manager: 8.4.1 (running version: 8.4.1/2a5fa54a8503f96d)

proxmox-kernel-helper: 8.1.1

proxmox-kernel-6.8.12-11-pve-signed: 6.8.12-11

proxmox-kernel-6.8: 6.8.12-11

proxmox-kernel-6.8.12-9-pve-signed: 6.8.12-9

ceph-fuse: 17.2.8-pve2

corosync: 3.1.9-pve1

criu: 3.17.1-2+deb12u1

frr-pythontools: 10.2.2-1+pve1

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx11

intel-microcode: 3.20250512.1~deb12u1

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-5

libknet1: 1.30-pve2

libproxmox-acme-perl: 1.6.0

libproxmox-backup-qemu0: 1.5.1

libproxmox-rs-perl: 0.3.5

libpve-access-control: 8.2.2

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.1.0

libpve-cluster-perl: 8.1.0

libpve-common-perl: 8.3.1

libpve-guest-common-perl: 5.2.2

libpve-http-server-perl: 5.2.2

libpve-network-perl: 0.11.2

libpve-rs-perl: 0.9.4

libpve-storage-perl: 8.3.6

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.6.0-2

proxmox-backup-client: 3.4.1-1

proxmox-backup-file-restore: 3.4.1-1

proxmox-firewall: 0.7.1

proxmox-kernel-helper: 8.1.1

proxmox-mail-forward: 0.3.2

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.7

proxmox-widget-toolkit: 4.3.11

pve-cluster: 8.1.0

pve-container: 5.2.6

pve-docs: 8.4.0

pve-edk2-firmware: 4.2025.02-3

pve-esxi-import-tools: 0.7.4

pve-firewall: 5.1.1

pve-firmware: 3.15-4

pve-ha-manager: 4.0.7

pve-i18n: 3.4.4

pve-qemu-kvm: 9.2.0-5

pve-xtermjs: 5.5.0-2

qemu-server: 8.3.12

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.7-pve2

Last edited: