kinda feels like this has been done to death but im struggling to find my EXACT issue, so if ive missed a post that fixes this, sorry...

my proxmox is BTRFS (ext4) disk setup (no LVM, no ZFS, which a lot of the posts seem to be).

i have a Debian VM running my docker build, i initially set this up with a 128Gb scsi0 disk (why 128Gb i do not know)

i want to shrink this to 32gb. i currently have the qcow2 on my BTRFS pool storage which is a spinning platter RAID Array of HDDs, id like it on my `local-btrfs` SSD for obvious reasons. my ssd is only 256Gb, so this one VM alone would use 50% of available.

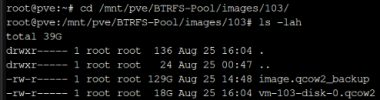

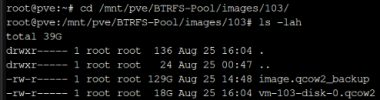

`ls -lah` of dir gives 18Gb file size after following `fstrim -av` from within running VM, `dd if=/dev/zero of=/mytempfile && rm -f /mytempfile` and the `qemu-img convert...` commands listed at https://pve.proxmox.com/wiki/Shrink_Qcow2_Disk_Files

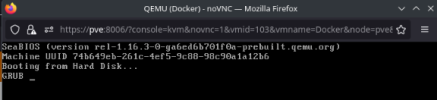

VM quite happily boots from this qcow2 file and acts "normally", however, VM > Hardware tab and disk from within running VM shows disk size to be 128Gb.

Any advice warmly recieved it may be a case of user error. im happy to accept that.

my other options are to attempt clonezilla, again, to attempt from large disk to small disk.

and my least favoured... rebuild the vm with fresh debian, and either `cp` file across or start from scratch with docker...

i have a lot of it documented so wouldnt be hard, just time consuming.

my proxmox is BTRFS (ext4) disk setup (no LVM, no ZFS, which a lot of the posts seem to be).

i have a Debian VM running my docker build, i initially set this up with a 128Gb scsi0 disk (why 128Gb i do not know)

i want to shrink this to 32gb. i currently have the qcow2 on my BTRFS pool storage which is a spinning platter RAID Array of HDDs, id like it on my `local-btrfs` SSD for obvious reasons. my ssd is only 256Gb, so this one VM alone would use 50% of available.

`ls -lah` of dir gives 18Gb file size after following `fstrim -av` from within running VM, `dd if=/dev/zero of=/mytempfile && rm -f /mytempfile` and the `qemu-img convert...` commands listed at https://pve.proxmox.com/wiki/Shrink_Qcow2_Disk_Files

VM quite happily boots from this qcow2 file and acts "normally", however, VM > Hardware tab and disk from within running VM shows disk size to be 128Gb.

Any advice warmly recieved it may be a case of user error. im happy to accept that.

my other options are to attempt clonezilla, again, to attempt from large disk to small disk.

and my least favoured... rebuild the vm with fresh debian, and either `cp` file across or start from scratch with docker...

i have a lot of it documented so wouldnt be hard, just time consuming.