inside proxmox cli it shows

/dev/nvme0n1p1 3.2T 40G 3.2T 2% /mnt/pve/VM-Disk

/dev/nvme1n1p1 3.2T 14G 3.0T 1% /mnt/pve/VM-Disk2

which are my 2 main storage disks

and here is the config file for the vm

balloon: 2048

boot: order=scsi0;net0

cores: 6

memory: 49150

meta: creation-qemu=6.2.0,ctime=1663515855

name: eth2-prox

net0: vmxnet3=B6:27:39:95:88:F0,bridge=vmbr0

numa: 0

onboot: 1

ostype: l26

parent: fixed04092024

scsi0: VM-Disk3:103/vm-103-disk-0.qcow2,discard=on,size=50G

scsi1: VM-Disk3:103/vm-103-disk-1.qcow2,backup=0,cache=writethrough,discard=on,size=2000G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=68370242-2d1a-4375-8ab1-bf71f80f2dac

sockets: 3

startup: up=5

vmgenid: dfc8e04e-c59a-4ca4-9e12-8e65a21c96fe

[fixed04092024]

#After Spectrum Internet Issues

balloon: 2048

boot: order=scsi0;net0

cores: 6

memory: 49150

meta: creation-qemu=6.2.0,ctime=1663515855

name: eth2-prox

net0: vmxnet3=B6:27:39:95:88:F0,bridge=vmbr0

numa: 0

onboot: 1

ostype: l26

scsi0: VM-Disk3:103/vm-103-disk-0.qcow2,discard=on,size=50G

scsi1: VM-Disk3:103/vm-103-disk-1.qcow2,backup=0,cache=writethrough,discard=on,size=2000G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=68370242-2d1a-4375-8ab1-bf71f80f2dac

snapstate: delete

snaptime: 1712710238

sockets: 3

startup: up=5

vmgenid: dfc8e04e-c59a-4ca4-9e12-8e65a21c96fe

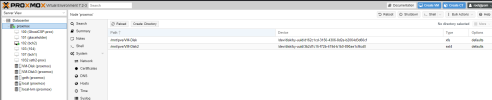

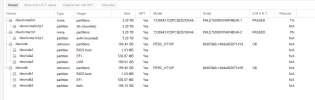

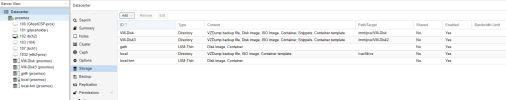

I provided snapshots inside the gui do you can understand the disks unfortunately it looks like where the images were is mounted

heres the lsblk as well

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 185.8G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 512M 0 part /boot/efi

└─sda3 8:3 0 185.2G 0 part

├─pve-swap 253:0 0 24G 0 lvm [SWAP]

├─pve-root 253:1 0 46.3G 0 lvm /

├─pve-data_tmeta 253:2 0 1G 0 lvm

│ └─pve-data-tpool 253:4 0 97G 0 lvm

│ └─pve-data 253:5 0 97G 1 lvm

└─pve-data_tdata 253:3 0 97G 0 lvm

└─pve-data-tpool 253:4 0 97G 0 lvm

└─pve-data 253:5 0 97G 1 lvm

sdb 8:16 0 185.8G 0 disk

├─sdb1 8:17 0 1007K 0 part

├─sdb2 8:18 0 512M 0 part

└─sdb3 8:19 0 184.5G 0 part

sr0 11:0 1 1024M 0 rom

nvme1n1 259:0 0 2.9T 0 disk

└─nvme1n1p1 259:2 0 2.9T 0 part /mnt/pve/VM-Disk2

nvme0n1 259:1 0 2.9T 0 disk

└─nvme0n1p1 259:3 0 2.9T 0 part /mnt/pve/VM-Disk

and fdisk

Disk /dev/nvme1n1: 2.91 TiB, 3200631791616 bytes, 6251233968 sectors

Disk model: 7335943:ICDPC5ED2ORA6.4T

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 48C896CD-6C5B-45BF-8334-03481C64856F

Device Start End Sectors Size Type

/dev/nvme1n1p1 2048 6251233934 6251231887 2.9T Linux filesystem

Disk /dev/nvme0n1: 2.91 TiB, 3200631791616 bytes, 6251233968 sectors

Disk model: 7335943:ICDPC5ED2ORA6.4T

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 7ACC2B1F-4FE6-479F-B119-1F3B7011EB58

Device Start End Sectors Size Type

/dev/nvme0n1p1 2048 6251233934 6251231887 2.9T Linux filesystem

Disk /dev/sda: 185.75 GiB, 199447543808 bytes, 389545984 sectors

Disk model: PERC H710P

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 6B032A2B-4307-4B9C-B386-C03D60025859

Device Start End Sectors Size Type

/dev/sda1 34 2047 2014 1007K BIOS boot

/dev/sda2 2048 1050623 1048576 512M EFI System

/dev/sda3 1050624 389545950 388495327 185.2G Linux LVM

Disk /dev/sdb: 185.75 GiB, 199447543808 bytes, 389545984 sectors

Disk model: PERC H710P

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: CA124B59-7DDF-453B-96A4-29B27C5C110E

Device Start End Sectors Size Type

/dev/sdb1 34 2047 2014 1007K BIOS boot

/dev/sdb2 2048 1050623 1048576 512M EFI System

/dev/sdb3 1050624 387973120 386922497 184.5G Linux filesystem

Disk /dev/mapper/pve-swap: 24 GiB, 25769803776 bytes, 50331648 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/pve-root: 46.25 GiB, 49660559360 bytes, 96993280 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes