Hello everyone,

As part of a migration from VMWARE to Proxmox (with hardware replacement) of a Dell PE R7525 (which will be backed up), we purchased and assembled a server composed of:

SP5 SuperMicro H14SSL-N motherboard (last bios & BMC)

EPYC 9475F 48C / 96C HT processor

12x DDR5 ECC REG 6400

FSP Pro Twins 2x930w power supply

4x NVME Gen5 Enterprise (PLP) Kingston DC3000ME 7.86 TB (spec: https://www.kingston.com/en/ssd/dc3000me-data-centre-solid-state-drive? Capacity=7.68tb )

Cooling AIO + 4x Fan 140 Noctua IPPC + 3x Fan 120 Noctua IPPC + 5 Fan 80 Arctic P8 MAX

3x PCIe X710 Intel

The NVME are connected directly to the motherboard on 2 MCIOI8 ports 8x each (I tested the 3 present on the motherboard) and are negotiated in 4x each and Gen5.

First installation of Proxmox in RAID10 ZFS, I got quite disapting results from a Windows VM (fresh install). I spent a few hours trying to find suitable tweaks but without success....

I then installed Windows 11 directly on the server to pass the NVME individually to the Crystaldiskmark and the results on each of the NVMEs corresponded well to what could be expected.

I then reinstalled Proxmox by putting the OS on an M2 Onboard so that I could test on different scenarios.

VM configured on SCSI controller and I tried different configurations (No cache, writeback, directsync - natives, thread, uring....)

I also tested to modify ZFS by the way:

Direct=always

Primarycache=metadata

Atime=off

I also tried to create a ZFS volume with a single NVME and the results are also bad from the VM.

What I did to get the speed / IOPS results:

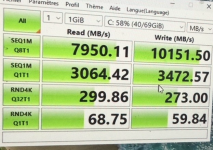

On the Windows VM, a Crystaldiskmark bench, the best I managed for the moment to get in ZFS RAID10 by putting the options: no cache / threads / IOthread ON:

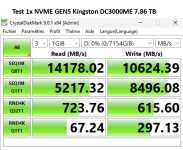

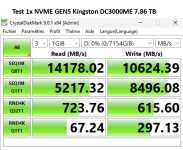

On this capture, it is a benchmark from Win 11 installed on the server host directly (only one NVME no RAID):

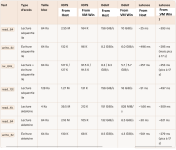

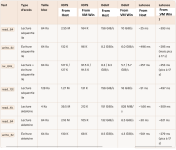

I also launched an FIO from the Windows VM and I also took the opportunity to launch it from the host's zvol and here are the results:

To carry out the tests I used the configuration proposed by a member of the forum (thank to him) and available here: https://gist.github.com/NiceRath/f938a400e3b2c8997ff0556cced27b30

Only the ioengine has been modified on Windows by "=windowsaio"

Even if I had in mind that the ZFS was not totally performance-oriented, I really find that we are very low... As an example, a Crystaldiskmark launched from a VM of our current R7525 on RAID PERC card with 4x NVME Gen4 of 2021 is much more performer.

I must have missed something...

I am taking your advice to improve throughput / IOPS / Latency

Thank you for your help.

As part of a migration from VMWARE to Proxmox (with hardware replacement) of a Dell PE R7525 (which will be backed up), we purchased and assembled a server composed of:

SP5 SuperMicro H14SSL-N motherboard (last bios & BMC)

EPYC 9475F 48C / 96C HT processor

12x DDR5 ECC REG 6400

FSP Pro Twins 2x930w power supply

4x NVME Gen5 Enterprise (PLP) Kingston DC3000ME 7.86 TB (spec: https://www.kingston.com/en/ssd/dc3000me-data-centre-solid-state-drive? Capacity=7.68tb )

Cooling AIO + 4x Fan 140 Noctua IPPC + 3x Fan 120 Noctua IPPC + 5 Fan 80 Arctic P8 MAX

3x PCIe X710 Intel

The NVME are connected directly to the motherboard on 2 MCIOI8 ports 8x each (I tested the 3 present on the motherboard) and are negotiated in 4x each and Gen5.

First installation of Proxmox in RAID10 ZFS, I got quite disapting results from a Windows VM (fresh install). I spent a few hours trying to find suitable tweaks but without success....

I then installed Windows 11 directly on the server to pass the NVME individually to the Crystaldiskmark and the results on each of the NVMEs corresponded well to what could be expected.

I then reinstalled Proxmox by putting the OS on an M2 Onboard so that I could test on different scenarios.

VM configured on SCSI controller and I tried different configurations (No cache, writeback, directsync - natives, thread, uring....)

I also tested to modify ZFS by the way:

Direct=always

Primarycache=metadata

Atime=off

I also tried to create a ZFS volume with a single NVME and the results are also bad from the VM.

What I did to get the speed / IOPS results:

On the Windows VM, a Crystaldiskmark bench, the best I managed for the moment to get in ZFS RAID10 by putting the options: no cache / threads / IOthread ON:

On this capture, it is a benchmark from Win 11 installed on the server host directly (only one NVME no RAID):

I also launched an FIO from the Windows VM and I also took the opportunity to launch it from the host's zvol and here are the results:

To carry out the tests I used the configuration proposed by a member of the forum (thank to him) and available here: https://gist.github.com/NiceRath/f938a400e3b2c8997ff0556cced27b30

Only the ioengine has been modified on Windows by "=windowsaio"

Even if I had in mind that the ZFS was not totally performance-oriented, I really find that we are very low... As an example, a Crystaldiskmark launched from a VM of our current R7525 on RAID PERC card with 4x NVME Gen4 of 2021 is much more performer.

I must have missed something...

I am taking your advice to improve throughput / IOPS / Latency

Thank you for your help.