Hi,

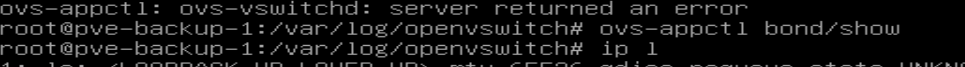

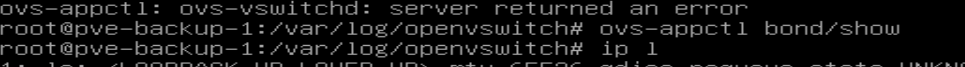

server has ens1f* interfaces down after PVE reboot. Both interfaces are bonded and used via openvswitch-switch.

The first half shows ens1f* down and one VM starting (tap10110). The second half shows both links still down and VM failed to start, so tap iface is removed. All bond/OVS interfaces are missing.

10:15 - login available, no network

10:19 - systemctl restart networking

So, manual networking restart via systemctl works, but why it doesn't work after boot directly?

server has ens1f* interfaces down after PVE reboot. Both interfaces are bonded and used via openvswitch-switch.

The first half shows ens1f* down and one VM starting (tap10110). The second half shows both links still down and VM failed to start, so tap iface is removed. All bond/OVS interfaces are missing.

Code:

proxmox-ve: 7.3-1 (running kernel: 5.15.85-1-pve)

pve-manager: 7.3-6 (running version: 7.3-6/723bb6ec)

pve-kernel-helper: 7.3-4

pve-kernel-5.15: 7.3-2

pve-kernel-5.13: 7.1-9

pve-kernel-5.15.85-1-pve: 5.15.85-1

pve-kernel-5.15.60-1-pve: 5.15.60-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph-fuse: 14.2.21-1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.3

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.3-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-2

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-1

lxcfs: 5.0.3-pve1

novnc-pve: 1.3.0-3

openvswitch-switch: 2.15.0+ds1-2+deb11u2.1

proxmox-backup-client: 2.3.3-1

proxmox-backup-file-restore: 2.3.3-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.5

pve-cluster: 7.3-2

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.6-3

pve-ha-manager: 3.5.1

pve-i18n: 2.8-2

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1

root@pve-backup-1:~# cat /etc/network/interfaces

auto lo

iface lo inet loopback

iface eno1 inet manual

iface eno2 inet manual

iface eno3 inet manual

iface eno4 inet manual

auto ens1f0

iface ens1f0 inet manual

auto ens1f1

iface ens1f1 inet manual

auto bond0

iface bond0 inet manual

ovs_bridge vmbr0

ovs_type OVSBond

ovs_bonds ens1f0 ens1f1

ovs_options bond_mode=balance-tcp lacp=active

auto vmbr0

iface vmbr0 inet manual

ovs_type OVSBridge

ovs_ports bond0 mgmt mgmt4 nfs_backup

auto mgmt

iface mgmt inet6 static

address IPv6

netmask 64

gateway IPv6_GW

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_options tag=VLANID

#pve-backup-1 node management

auto mgmt4

iface mgmt4 inet static

address IPv4

netmask 255.255.255.0

gateway IPv4_GW

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_options tag=VLANID

#pve-backup-1 node management

auto nfs_backup

iface nfs_backup inet6 static

address IPv6

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_options tag=VLANID

commen6 nfs backup

#nfs backup pve

Code:

root@pve-backup-1:~# aptitude search ifupdown

p ifupdown - high level tools to configure network interfaces

i ifupdown2

root@pve-backup-1:~# aptitude search iproute

i iproute2

root@pve-backup-1:~# aptitude search ifenslave

p ifenslave10:15 - login available, no network

10:19 - systemctl restart networking

Code:

Feb 24 10:15:35 pve-backup-1 kernel: [ 2.878263] i40e: Copyright (c) 2013 - 2019 Intel Corporation.

Feb 24 10:15:35 pve-backup-1 kernel: [ 2.895736] i40e 0000:08:00.0: fw 5.0.40043 api 1.5 nvm 5.05 0x80002927 1.1313.0 [8086:1572] [8086:0007]

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.146649] i40e 0000:08:00.0: MAC address: 3c:fd:fe:ab:18:e4

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.146846] i40e 0000:08:00.0: FW LLDP is disabled

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.146849] i40e 0000:08:00.0: FW LLDP is disabled, attempting SW DCB

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.166845] i40e 0000:08:00.0: SW DCB initialization succeeded.

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.199026] i40e 0000:08:00.0 eth0: NIC Link is Up, 10 Gbps Full Duplex, Flow Control: None

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.217758] i40e 0000:08:00.0: PCI-Express: Speed 8.0GT/s Width x8

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.253562] i40e 0000:08:00.0: Features: PF-id[0] VFs: 64 VSIs: 66 QP: 24 RSS FD_ATR FD_SB NTUPLE DCB VxLAN Geneve PTP VEPA

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.266770] i40e 0000:08:00.1: fw 5.0.40043 api 1.5 nvm 5.05 0x80002927 1.1313.0 [8086:1572] [8086:0007]

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.501339] i40e 0000:08:00.1: MAC address: 3c:fd:fe:ab:18:e5

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.501531] i40e 0000:08:00.1: FW LLDP is disabled

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.501532] i40e 0000:08:00.1: FW LLDP is disabled, attempting SW DCB

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.522398] i40e 0000:08:00.1: SW DCB initialization succeeded.

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.562701] i40e 0000:08:00.1: PCI-Express: Speed 8.0GT/s Width x8

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.597639] i40e 0000:08:00.1: Features: PF-id[1] VFs: 64 VSIs: 66 QP: 24 RSS FD_ATR FD_SB NTUPLE DCB VxLAN Geneve PTP VEPA

Feb 24 10:15:35 pve-backup-1 kernel: [ 5.150914] i40e 0000:08:00.0 ens1f0: renamed from eth0

Feb 24 10:15:35 pve-backup-1 kernel: [ 5.241462] i40e 0000:08:00.1 ens1f1: renamed from eth3

Feb 24 10:15:35 pve-backup-1 lldpd[1048]: i40e driver detected for ens1f0, disabling LLDP in firmware

Feb 24 10:15:35 pve-backup-1 kernel: [ 12.921210] i40e 0000:08:00.0: Stop LLDP AQ command failed =0x1

Feb 24 10:15:35 pve-backup-1 lldpd[1048]: i40e driver detected for ens1f1, disabling LLDP in firmware

Feb 24 10:15:35 pve-backup-1 kernel: [ 13.009199] i40e 0000:08:00.1: Stop LLDP AQ command failed =0x1

Feb 23 15:39:54 pve-backup-1 lxcfs[950]: api_extensions:

Feb 23 15:39:54 pve-backup-1 kernel: [ 1.496254] ACPI: Added _OSI(3.0 _SCP Extensions)

Feb 23 15:39:54 pve-backup-1 kernel: [ 3.195116] sd 1:1:0:0: [sda] Mode Sense: 73 00 00 08

Feb 23 15:39:54 pve-backup-1 kernel: [ 3.718618] mpt2sas_cm0: sense pool(0x(____ptrval____)) - dma(0x116b00000): depth(8059), element_size(96), pool_size (755 kB)

Feb 23 15:39:54 pve-backup-1 kernel: [ 3.718622] mpt2sas_cm0: sense pool(0x(____ptrval____))- dma(0x116b00000): depth(8059),element_size(96), pool_size(0 kB)

Feb 23 15:39:54 pve-backup-1 kernel: [ 5.144964] i40e 0000:08:00.0 ens1f0: renamed from eth0

Feb 23 15:39:54 pve-backup-1 kernel: [ 5.160347] i40e 0000:08:00.1 ens1f1: renamed from eth2

Feb 23 15:39:54 pve-backup-1 kernel: [ 10.570950] znvpair: module license 'CDDL' taints kernel.

Feb 23 15:39:54 pve-backup-1 kernel: [ 11.248037] ipmi_si IPI0001:00: The BMC does not support clearing the recv irq bit, compensating, but the BMC needs to be fixed.

Feb 23 15:39:54 pve-backup-1 lldpd[1037]: i40e driver detected for ens1f0, disabling LLDP in firmware

Feb 23 15:39:54 pve-backup-1 lldpd[1037]: i40e driver detected for ens1f1, disabling LLDP in firmware

Feb 23 15:44:12 pve-backup-1 kernel: [ 270.261688] i40e 0000:08:00.1 ens1f1: NIC Link is Up, 10 Gbps Full Duplex, Flow Control: None

Feb 23 15:46:19 pve-backup-1 kernel: [ 397.873195] IPv6: ADDRCONF(NETDEV_CHANGE): ens1f0: link becomes ready

Feb 23 15:46:20 pve-backup-1 ovs-vsctl: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl -- --may-exist --fake-iface add-bond vmbr0 bond0 ens1f0 ens1f1 -- --if-exists clear port bond0 bond_active_slave bond_mode cvlans external_ids lacp mac other_config qos tag trunks vlan_mode -- --if-exists clear interface bond0 mtu_request external-ids other_config options -- set Port bond0 bond_mode=balance-tcp lacp=active

Feb 23 15:46:20 pve-backup-1 kernel: [ 398.417147] device ens1f0 entered promiscuous mode

Feb 23 15:46:20 pve-backup-1 kernel: [ 398.417634] device ens1f1 entered promiscuous mode

Feb 23 15:46:20 pve-backup-1 kernel: [ 398.880251] IPv6: ADDRCONF(NETDEV_CHANGE): ens1f1: link becomes ready

Feb 24 10:15:35 pve-backup-1 kernel: [ 1.497002] ACPI: Added _OSI(3.0 _SCP Extensions)

Feb 24 10:15:35 pve-backup-1 lxcfs[962]: api_extensions:

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.217071] sd 1:1:0:0: [sda] Mode Sense: 73 00 00 08

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.748765] mpt2sas_cm0: sense pool(0x(____ptrval____)) - dma(0x117100000): depth(8059), element_size(96), pool_size (755 kB)

Feb 24 10:15:35 pve-backup-1 kernel: [ 3.748770] mpt2sas_cm0: sense pool(0x(____ptrval____))- dma(0x117100000): depth(8059),element_size(96), pool_size(0 kB)

Feb 24 10:15:35 pve-backup-1 kernel: [ 5.150914] i40e 0000:08:00.0 ens1f0: renamed from eth0

Feb 24 10:15:35 pve-backup-1 kernel: [ 5.241462] i40e 0000:08:00.1 ens1f1: renamed from eth3

Feb 24 10:15:35 pve-backup-1 kernel: [ 10.381413] znvpair: module license 'CDDL' taints kernel.

Feb 24 10:15:35 pve-backup-1 kernel: [ 11.012765] ipmi_si IPI0001:00: The BMC does not support clearing the recv irq bit, compensating, but the BMC needs to be fixed.

Feb 24 10:15:35 pve-backup-1 lldpd[1048]: i40e driver detected for ens1f0, disabling LLDP in firmware

Feb 24 10:15:35 pve-backup-1 lldpd[1048]: i40e driver detected for ens1f1, disabling LLDP in firmware

Feb 24 10:19:07 pve-backup-1 kernel: [ 224.518178] IPv6: ADDRCONF(NETDEV_CHANGE): ens1f0: link becomes ready

Feb 24 10:19:07 pve-backup-1 ovs-vsctl: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl -- --may-exist --fake-iface add-bond vmbr0 bond0 ens1f0 ens1f1 -- --if-exists clear port bond0 bond_active_slave bond_mode cvlans external_ids lacp mac other_config qos tag trunks vlan_mode -- --if-exists clear interface bond0 mtu_request external-ids other_config options -- set Port bond0 bond_mode=balance-tcp lacp=active

Feb 24 10:19:07 pve-backup-1 kernel: [ 225.091034] device ens1f1 entered promiscuous mode

Feb 24 10:19:07 pve-backup-1 kernel: [ 225.091548] device ens1f0 entered promiscuous mode

Code:

2023-02-24T09:15:35.347Z|00001|vlog|INFO|opened log file /var/log/openvswitch/ovs-vswitchd.log

2023-02-24T09:15:35.371Z|00002|ovs_numa|INFO|Discovered 12 CPU cores on NUMA node 0

2023-02-24T09:15:35.371Z|00003|ovs_numa|INFO|Discovered 12 CPU cores on NUMA node 1

2023-02-24T09:15:35.371Z|00004|ovs_numa|INFO|Discovered 2 NUMA nodes and 24 CPU cores

2023-02-24T09:15:35.371Z|00005|reconnect|INFO|unix:/var/run/openvswitch/db.sock: connecting...

2023-02-24T09:15:35.372Z|00006|reconnect|INFO|unix:/var/run/openvswitch/db.sock: connected

2023-02-24T09:15:35.377Z|00007|bridge|INFO|ovs-vswitchd (Open vSwitch) 2.15.0

2023-02-24T09:15:47.614Z|00008|memory|INFO|14156 kB peak resident set size after 12.3 seconds

2023-02-24T09:18:46.863Z|00001|vlog|INFO|opened log file /var/log/openvswitch/ovs-vswitchd.log

2023-02-24T09:18:46.865Z|00002|ovs_numa|INFO|Discovered 12 CPU cores on NUMA node 0

2023-02-24T09:18:46.865Z|00003|ovs_numa|INFO|Discovered 12 CPU cores on NUMA node 1

2023-02-24T09:18:46.865Z|00004|ovs_numa|INFO|Discovered 2 NUMA nodes and 24 CPU cores

2023-02-24T09:18:46.865Z|00005|reconnect|INFO|unix:/var/run/openvswitch/db.sock: connecting...

2023-02-24T09:18:46.865Z|00006|reconnect|INFO|unix:/var/run/openvswitch/db.sock: connected

2023-02-24T09:18:46.871Z|00007|bridge|INFO|ovs-vswitchd (Open vSwitch) 2.15.0

2023-02-24T09:19:07.265Z|00008|memory|INFO|13940 kB peak resident set size after 20.4 seconds

2023-02-24T09:19:07.589Z|00009|ofproto_dpif|INFO|system@ovs-system: Datapath supports recirculation

2023-02-24T09:19:07.589Z|00010|ofproto_dpif|INFO|system@ovs-system: VLAN header stack length probed as 2

2023-02-24T09:19:07.589Z|00011|ofproto_dpif|INFO|system@ovs-system: MPLS label stack length probed as 3

2023-02-24T09:19:07.589Z|00012|ofproto_dpif|INFO|system@ovs-system: Datapath supports truncate action

2023-02-24T09:19:07.590Z|00013|ofproto_dpif|INFO|system@ovs-system: Datapath supports unique flow ids

2023-02-24T09:19:07.590Z|00014|ofproto_dpif|INFO|system@ovs-system: Datapath supports clone action

2023-02-24T09:19:07.590Z|00015|ofproto_dpif|INFO|system@ovs-system: Max sample nesting level probed as 10

2023-02-24T09:19:07.590Z|00016|ofproto_dpif|INFO|system@ovs-system: Datapath supports eventmask in conntrack action

2023-02-24T09:19:07.590Z|00017|ofproto_dpif|INFO|system@ovs-system: Datapath supports ct_clear action

2023-02-24T09:19:07.590Z|00018|ofproto_dpif|INFO|system@ovs-system: Max dp_hash algorithm probed to be 0

2023-02-24T09:19:07.590Z|00019|ofproto_dpif|INFO|system@ovs-system: Datapath supports check_pkt_len action

2023-02-24T09:19:07.590Z|00020|ofproto_dpif|INFO|system@ovs-system: Datapath supports timeout policy in conntrack action

2023-02-24T09:19:07.590Z|00021|ofproto_dpif|INFO|system@ovs-system: Datapath supports ct_state

2023-02-24T09:19:07.590Z|00022|ofproto_dpif|INFO|system@ovs-system: Datapath supports ct_zone

2023-02-24T09:19:07.590Z|00023|ofproto_dpif|INFO|system@ovs-system: Datapath supports ct_mark

2023-02-24T09:19:07.590Z|00024|ofproto_dpif|INFO|system@ovs-system: Datapath supports ct_label

2023-02-24T09:19:07.591Z|00025|ofproto_dpif|INFO|system@ovs-system: Datapath supports ct_state_nat

2023-02-24T09:19:07.591Z|00026|ofproto_dpif|INFO|system@ovs-system: Datapath supports ct_orig_tuple

2023-02-24T09:19:07.591Z|00027|ofproto_dpif|INFO|system@ovs-system: Datapath supports ct_orig_tuple6

2023-02-24T09:19:07.591Z|00028|ofproto_dpif|INFO|system@ovs-system: Datapath does not support IPv6 ND Extensions

2023-02-24T09:19:07.713Z|00029|bridge|INFO|bridge vmbr0: added interface vmbr0 on port 65534

2023-02-24T09:19:07.713Z|00030|bridge|INFO|bridge vmbr0: using datapath ID 0000b6343173d644

2023-02-24T09:19:07.713Z|00031|connmgr|INFO|vmbr0: added service controller "punix:/var/run/openvswitch/vmbr0.mgmt"

2023-02-24T09:19:07.838Z|00032|bridge|INFO|bridge vmbr0: added interface ens1f1 on port 1

2023-02-24T09:19:07.839Z|00033|bridge|INFO|bridge vmbr0: added interface ens1f0 on port 2

2023-02-24T09:19:07.843Z|00034|bridge|INFO|bridge vmbr0: using datapath ID 00003cfdfeab18e4

2023-02-24T09:19:07.843Z|00035|bond|INFO|member ens1f0: enabled

2023-02-24T09:19:07.845Z|00036|bond|INFO|member ens1f0: link state down

2023-02-24T09:19:07.845Z|00037|bond|INFO|member ens1f0: disabled

2023-02-24T09:19:07.856Z|00001|ofproto_dpif_xlate(handler6)|WARN|received packet on unknown port 3 on bridge vmbr0 while processing pkt_mark=0xd4,skb_priority=0x7,icmp6,in_port=3,vlan_tci=0x0000,dl_src=46:3a:2c:a4:c3:e0,dl_dst=33:33:00:00:00:16,ipv6_src=::,ipv6_dst=ff02::16,ipv6_label=0x00000,nw_tos=0,nw_ecn=0,nw_ttl=1,icmp_type=143,icmp_code=0

2023-02-24T09:19:07.943Z|00038|bridge|INFO|bridge vmbr0: added interface mgmt on port 4

2023-02-24T09:19:08.058Z|00039|bridge|INFO|bridge vmbr0: added interface mgmt4 on port 5

2023-02-24T09:19:08.160Z|00040|bridge|INFO|bridge vmbr0: added interface nfs_backup on port 6

2023-02-24T09:19:08.696Z|00001|ofproto_dpif_xlate(handler7)|WARN|received packet on unknown port 3 on bridge vmbr0 while processing icmp6,in_port=3,vlan_tci=0x0000,dl_src=46:3a:2c:a4:c3:e0,dl_dst=33:33:ff:a4:c3:e0,ipv6_src=::,ipv6_dst=ff02::1:ffa4:c3e0,ipv6_label=0x00000,nw_tos=0,nw_ecn=0,nw_ttl=255,icmp_type=135,icmp_code=0,nd_target=fe80::443a:2cff:fea4:c3e0,nd_sll=00:00:00:00:00:00,nd_tll=00:00:00:00:00:00

2023-02-24T09:19:08.952Z|00041|bond|INFO|member ens1f0: link state up

2023-02-24T09:19:08.952Z|00042|bond|INFO|member ens1f0: enabledSo, manual networking restart via systemctl works, but why it doesn't work after boot directly?

Attachments

Last edited: