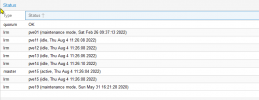

quorum OK

master pve15 (active, Fri Aug 5 06:48:17 2022)

lrm pve01 (maintenance mode, Sat Feb 26 09:37:13 2022)

lrm pve11 (idle, Fri Aug 5 06:48:17 2022)

lrm pve12 (idle, Fri Aug 5 06:48:19 2022)

lrm pve13 (idle, Fri Aug 5 06:48:20 2022)

lrm pve14 (idle, Fri Aug 5 06:48:17 2022)

lrm pve15 (idle, Fri Aug 5 06:48:17 2022)

lrm pve19 (maintenance mode, Sun May 31 16:21:28 2020)

full cluster state:

{

"lrm_status" : {

"pve01" : {

"mode" : "maintenance",

"results" : {},

"state" : "wait_for_agent_lock",

"timestamp" : 1645886233

},

"pve11" : {

"mode" : "active",

"results" : {},

"state" : "wait_for_agent_lock",

"timestamp" : 1659696497

},

"pve12" : {

"mode" : "active",

"results" : {},

"state" : "wait_for_agent_lock",

"timestamp" : 1659696499

},

"pve13" : {

"mode" : "active",

"results" : {},

"state" : "wait_for_agent_lock",

"timestamp" : 1659696500

},

"pve14" : {

"mode" : "active",

"results" : {},

"state" : "wait_for_agent_lock",

"timestamp" : 1659696497

},

"pve15" : {

"mode" : "active",

"results" : {},

"state" : "wait_for_agent_lock",

"timestamp" : 1659696497

},

"pve19" : {

"mode" : "maintenance",

"results" : {},

"state" : "wait_for_agent_lock",

"timestamp" : 1590956488

}

},

"manager_status" : {

"master_node" : "pve15",

"node_status" : {

"pve01" : "maintenance",

"pve11" : "online",

"pve12" : "online",

"pve13" : "online",

"pve14" : "online",

"pve15" : "online",

"pve19" : "maintenance"

},

"service_status" : {},

"timestamp" : 1659696497

},

"quorum" : {

"node" : "pve15",

"quorate" : "1"

}

}

cat /etc/pve/ha/manager_status

{"timestamp":1659696527,"master_node":"pve15","service_status":{},"node_status":{"pve11":"online","pve15":"online","pve14":"online","pve12":"online","pve13":"online","pve19":"maintenance","pve01":"maintenance"}}

cat /etc/pve/nodes/pve19/lrm_status

{"mode":"maintenance","timestamp":1590956488,"state":"wait_for_agent_lock","results":{}}

cat /etc/pve/nodes/pve01/lrm_status

{"mode":"maintenance","timestamp":1645886233,"results":{},"state":"wait_for_agent_lock"}