We are testing PVE5.2 HA feature in LAB setup. Can anyone had successfully setup this ?

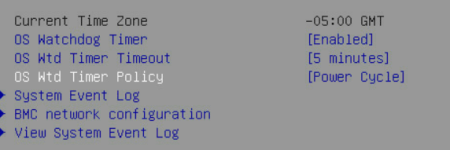

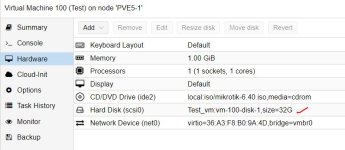

Earlier we were using PVE3.4 with SNMP fencing, but for PVE5.2 we understand that external fencing will not support. So we are trying to test with hardware watchdog. I couldn't find any clear documents to setup this.

Please help me, How to configure watchdog fencing ?

1. Like in PVE3.4 do we need to edit the cluster config. file to add watch dog fencing details.

2. If not, how to configure/load the watchdog

3. What is the watchdog action I have to set Power off or reboot ? I think we should set the action power off to avoid the fence node to re-join the cluster

4. What is the recommended time to set in watchdog to perform the above action

5. If hardware watchdog is not available, how to set fence time / reset time in softdog.

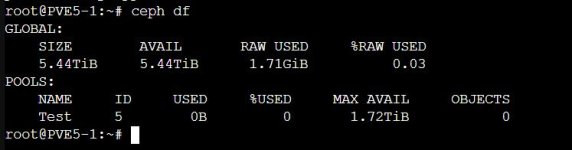

6. Where can I set the fence wait time. We have seen in SNMP fencing it took around 30sec to block the ceph port.

7. Why we have to enable "Power ON" in advance power management on board BIOS

Earlier we were using PVE3.4 with SNMP fencing, but for PVE5.2 we understand that external fencing will not support. So we are trying to test with hardware watchdog. I couldn't find any clear documents to setup this.

Please help me, How to configure watchdog fencing ?

1. Like in PVE3.4 do we need to edit the cluster config. file to add watch dog fencing details.

2. If not, how to configure/load the watchdog

3. What is the watchdog action I have to set Power off or reboot ? I think we should set the action power off to avoid the fence node to re-join the cluster

4. What is the recommended time to set in watchdog to perform the above action

5. If hardware watchdog is not available, how to set fence time / reset time in softdog.

6. Where can I set the fence wait time. We have seen in SNMP fencing it took around 30sec to block the ceph port.

7. Why we have to enable "Power ON" in advance power management on board BIOS

Last edited: