Dear Support,

when to show storage ( ex : Clone VM or Create VM ), it will be very slow to displaying Storage

sometimes it manages to show storage, but more often it timeouts.

but no problem about performance, ALL VM start and runing perfectly.

Slow and Time out

I'm check the output of TASK VIEWER : CLone VM

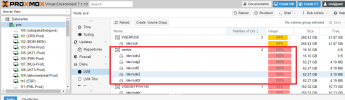

i don't remember when to add VG 'Centos',

I'm sure none of the VMs use a 'centos' VG.

and I don't know if the 'slow displaying storage' problem is related or not with this 'CENTOS' VG

what i need to check and do ?

Thanks you

when to show storage ( ex : Clone VM or Create VM ), it will be very slow to displaying Storage

sometimes it manages to show storage, but more often it timeouts.

but no problem about performance, ALL VM start and runing perfectly.

Slow and Time out

I'm check the output of TASK VIEWER : CLone VM

Code:

INFO: starting new backup job: vzdump 109 --node pve --storage NFSONNAS --mode stop --remove 0 --compress lzo

INFO: Starting Backup of VM 109 (qemu)

INFO: Backup started at 2022-04-10 19:00:15

INFO: status = stopped

INFO: backup mode: stop

INFO: ionice priority: 7

INFO: VM Name: dokumenlokal-Prod

INFO: include disk 'sata0' 'LVM_DOKUMENLOKAL:vm-109-disk-0' 32G

WARNING: VG name centos is used by VGs c58zAh-303M-eXjh-MXFZ-MdWc-osha-6DfHpc and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs ast6HD-G8kN-PNXB-qf2V-8Zle-X7qW-4JLgwO and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs bsUPc2-oSdi-zmMg-hlyN-wLpE-ReXl-p7b8Tn and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs 0d9sxJ-F0uC-JOcY-q5yC-SqRb-fq8M-8P3epp and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: Not using device /dev/sdo2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: Not using device /dev/sdp2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

WARNING: VG name centos is used by VGs c58zAh-303M-eXjh-MXFZ-MdWc-osha-6DfHpc and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs ast6HD-G8kN-PNXB-qf2V-8Zle-X7qW-4JLgwO and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs bsUPc2-oSdi-zmMg-hlyN-wLpE-ReXl-p7b8Tn and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs 0d9sxJ-F0uC-JOcY-q5yC-SqRb-fq8M-8P3epp and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: Not using device /dev/sdo2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: Not using device /dev/sdp2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

WARNING: VG name centos is used by VGs c58zAh-303M-eXjh-MXFZ-MdWc-osha-6DfHpc and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs ast6HD-G8kN-PNXB-qf2V-8Zle-X7qW-4JLgwO and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs bsUPc2-oSdi-zmMg-hlyN-wLpE-ReXl-p7b8Tn and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs 0d9sxJ-F0uC-JOcY-q5yC-SqRb-fq8M-8P3epp and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: Not using device /dev/sdo2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: Not using device /dev/sdp2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

WARNING: VG name centos is used by VGs c58zAh-303M-eXjh-MXFZ-MdWc-osha-6DfHpc and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs ast6HD-G8kN-PNXB-qf2V-8Zle-X7qW-4JLgwO and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs bsUPc2-oSdi-zmMg-hlyN-wLpE-ReXl-p7b8Tn and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs 0d9sxJ-F0uC-JOcY-q5yC-SqRb-fq8M-8P3epp and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: Not using device /dev/sdo2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: Not using device /dev/sdp2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

INFO: pending configuration changes found (not included into backup)

INFO: creating vzdump archive '/mnt/pve/NFSONNAS/dump/vzdump-qemu-109-2022_04_10-19_00_15.vma.lzo'

INFO: starting kvm to execute backup task

WARNING: VG name centos is used by VGs c58zAh-303M-eXjh-MXFZ-MdWc-osha-6DfHpc and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs ast6HD-G8kN-PNXB-qf2V-8Zle-X7qW-4JLgwO and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs bsUPc2-oSdi-zmMg-hlyN-wLpE-ReXl-p7b8Tn and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs 0d9sxJ-F0uC-JOcY-q5yC-SqRb-fq8M-8P3epp and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: Not using device /dev/sdo2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: Not using device /dev/sdp2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

WARNING: VG name centos is used by VGs c58zAh-303M-eXjh-MXFZ-MdWc-osha-6DfHpc and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs ast6HD-G8kN-PNXB-qf2V-8Zle-X7qW-4JLgwO and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs bsUPc2-oSdi-zmMg-hlyN-wLpE-ReXl-p7b8Tn and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs 0d9sxJ-F0uC-JOcY-q5yC-SqRb-fq8M-8P3epp and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: Not using device /dev/sdo2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: Not using device /dev/sdp2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

WARNING: VG name centos is used by VGs c58zAh-303M-eXjh-MXFZ-MdWc-osha-6DfHpc and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs ast6HD-G8kN-PNXB-qf2V-8Zle-X7qW-4JLgwO and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs bsUPc2-oSdi-zmMg-hlyN-wLpE-ReXl-p7b8Tn and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs 0d9sxJ-F0uC-JOcY-q5yC-SqRb-fq8M-8P3epp and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: Not using device /dev/sdo2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: Not using device /dev/sdp2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

INFO: started backup task '3eca11d0-602c-4fe1-bbba-d597800d84a5'

INFO: 3% (1.2 GiB of 32.0 GiB) in 3s, read: 416.9 MiB/s, write: 101.0 MiB/s

INFO: 8% (2.7 GiB of 32.0 GiB) in 6s, read: 488.5 MiB/s, write: 6.1 MiB/s

INFO: 10% (3.4 GiB of 32.0 GiB) in 9s, read: 241.4 MiB/s, write: 119.3 MiB/s

INFO: 12% (3.9 GiB of 32.0 GiB) in 12s, read: 190.9 MiB/s, write: 165.5 MiB/s

INFO: 13% (4.4 GiB of 32.0 GiB) in 15s, read: 168.0 MiB/s, write: 166.5 MiB/s

INFO: 15% (4.9 GiB of 32.0 GiB) in 18s, read: 164.3 MiB/s, write: 164.3 MiB/s

INFO: 19% (6.2 GiB of 32.0 GiB) in 21s, read: 429.8 MiB/s, write: 45.7 MiB/s

INFO: 24% (7.8 GiB of 32.0 GiB) in 24s, read: 547.7 MiB/s, write: 0 B/s

INFO: 30% (9.6 GiB of 32.0 GiB) in 27s, read: 631.0 MiB/s, write: 0 B/s

INFO: 33% (10.6 GiB of 32.0 GiB) in 30s, read: 335.8 MiB/s, write: 106.1 MiB/s

INFO: 34% (11.0 GiB of 32.0 GiB) in 45s, read: 30.4 MiB/s, write: 29.3 MiB/s

INFO: 35% (11.4 GiB of 32.0 GiB) in 48s, read: 119.6 MiB/s, write: 118.9 MiB/s

INFO: 36% (11.8 GiB of 32.0 GiB) in 51s, read: 129.3 MiB/s, write: 129.3 MiB/s

INFO: 37% (11.9 GiB of 32.0 GiB) in 54s, read: 50.8 MiB/s, write: 50.8 MiB/s

INFO: 39% (12.6 GiB of 32.0 GiB) in 57s, read: 228.3 MiB/s, write: 49.8 MiB/s

INFO: 44% (14.2 GiB of 32.0 GiB) in 1m, read: 537.0 MiB/s, write: 0 B/s

INFO: 48% (15.6 GiB of 32.0 GiB) in 1m 3s, read: 492.7 MiB/s, write: 0 B/s

INFO: 54% (17.4 GiB of 32.0 GiB) in 1m 6s, read: 608.0 MiB/s, write: 0 B/s

INFO: 56% (18.0 GiB of 32.0 GiB) in 1m 9s, read: 211.2 MiB/s, write: 158.9 MiB/s

INFO: 58% (18.6 GiB of 32.0 GiB) in 1m 12s, read: 192.1 MiB/s, write: 192.1 MiB/s

INFO: 59% (19.1 GiB of 32.0 GiB) in 1m 15s, read: 179.0 MiB/s, write: 143.8 MiB/s

INFO: 64% (20.6 GiB of 32.0 GiB) in 1m 18s, read: 516.3 MiB/s, write: 0 B/s

INFO: 68% (22.1 GiB of 32.0 GiB) in 1m 21s, read: 505.3 MiB/s, write: 0 B/s

INFO: 73% (23.6 GiB of 32.0 GiB) in 1m 24s, read: 521.0 MiB/s, write: 0 B/s

INFO: 77% (24.9 GiB of 32.0 GiB) in 1m 27s, read: 442.4 MiB/s, write: 48.8 MiB/s

INFO: 79% (25.5 GiB of 32.0 GiB) in 1m 30s, read: 190.9 MiB/s, write: 184.5 MiB/s

INFO: 81% (26.0 GiB of 32.0 GiB) in 1m 33s, read: 172.8 MiB/s, write: 172.7 MiB/s

INFO: 82% (26.5 GiB of 32.0 GiB) in 1m 46s, read: 41.2 MiB/s, write: 18.6 MiB/s

INFO: 87% (27.9 GiB of 32.0 GiB) in 1m 49s, read: 493.7 MiB/s, write: 0 B/s

INFO: 92% (29.6 GiB of 32.0 GiB) in 1m 52s, read: 568.0 MiB/s, write: 0 B/s

INFO: 97% (31.4 GiB of 32.0 GiB) in 1m 55s, read: 597.7 MiB/s, write: 0 B/s

INFO: 100% (32.0 GiB of 32.0 GiB) in 1m 57s, read: 332.5 MiB/s, write: 0 B/s

INFO: backup is sparse: 25.11 GiB (78%) total zero data

INFO: transferred 32.00 GiB in 117 seconds (280.1 MiB/s)

INFO: stopping kvm after backup task

WARNING: VG name centos is used by VGs c58zAh-303M-eXjh-MXFZ-MdWc-osha-6DfHpc and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs ast6HD-G8kN-PNXB-qf2V-8Zle-X7qW-4JLgwO and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs bsUPc2-oSdi-zmMg-hlyN-wLpE-ReXl-p7b8Tn and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: VG name centos is used by VGs 0d9sxJ-F0uC-JOcY-q5yC-SqRb-fq8M-8P3epp and ozs095-LgHW-0urh-iEmQ-R6pj-BMVQ-Dhhxot.

Fix duplicate VG names with vgrename uuid, a device filter, or system IDs.

WARNING: Not using device /dev/sdo2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: Not using device /dev/sdp2 for PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

WARNING: PV 7gOuyw-ZxcU-02A3-r39M-fDoy-66uT-787enB prefers device /dev/sdn2 because device was seen first.

INFO: archive file size: 3.92GB

INFO: Finished Backup of VM 109 (00:03:02)

INFO: Backup finished at 2022-04-10 19:03:17

INFO: Backup job finished successfully

TASK OKi don't remember when to add VG 'Centos',

I'm sure none of the VMs use a 'centos' VG.

and I don't know if the 'slow displaying storage' problem is related or not with this 'CENTOS' VG

what i need to check and do ?

Thanks you