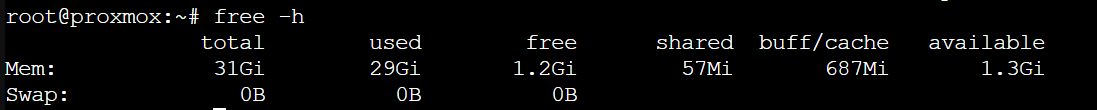

Hey I have a home server Virtual Environment 8.3.2 running few containers and VMs. I have noticed from node summary Im running out of memory. (29/32 GB used)

Though I cant identify why as my VMs are consuming just a few gigs, ZFS arc chache is on 4 gigs.

Running

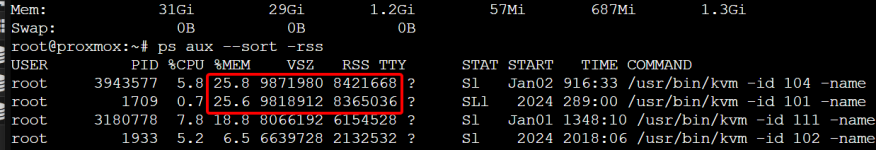

on my node console show VM 104 and 101 are using 25% -> 8GB

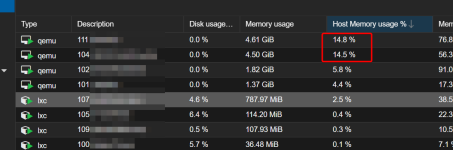

The VMs summary show something different though.

sceen from VM101 free -h

Where is the issue then?

Though I cant identify why as my VMs are consuming just a few gigs, ZFS arc chache is on 4 gigs.

Running

Code:

ps aux --sort -rss

The VMs summary show something different though.

sceen from VM101 free -h

Where is the issue then?