# PVE Host crashes with NMI on Guest reboot: Nvidia H100 PCIE GPU passthrough

Hello! This Is my first post in this forum, i hope it it satisfies the quality and level of specificity required for this place.

Unfortunately, i couldn't find a set of guidelines of what should / must be included in a post.

## Situation

A single VM was created, it has the PCIE Device Mapped properly. The Vendor, Subvendor, Device and Subdevice IDs have been set Manually to the original Device values.

### PVE Host

Machine Name: ThinkSystem SR665 V3

CPU: AMD EPYC 9124

RAM: 128G DDR5

GPU: NVIDIA H100 VBIOS Version: 96.00.30.00.01

NIC: Intel(R) E810-DA2

OS: PVE8.1.4 / kernel: 6.5.13

The Nessecary steps for PCIE / GPU Passthroughs have been taking, including:

Additionally, the Following Grub parameters have been set:

Every available Firmware upgrade was applied, an upgrade for the H100 should be available, but it must be obtained through a ticket with NVIDIA, which is still pending.

### Guest VM

The Guest VM Is running Ubuntu 22.04 with Docker 24, and Nvidia Driver 535.154.05

It has 100G RAM and 16 CPU Cores of the native CPU Type.

The Card is not selected as primary GPU, because it is not set up to display any video output.

Setting the Device and Vendor IDs manually has been attempted, but did not result in any change.

## Problem

On reboot of the Guest (or shutdown + normal boot), the PVE Host crashes with a NMI error. There are AER errors in the console even when the card appears to be working (before the crash, but the error code is an unspecific Hardware error

## Logs

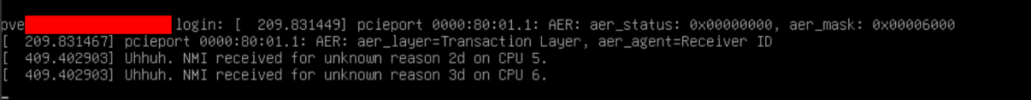

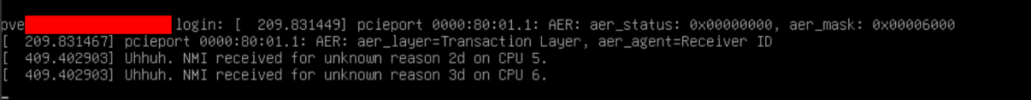

PVE Host dmesg: AER errors like these appear on first startup and sometimes while the guest is in operation. These do not lead to a crash.

Guest dmesg: The Driver initially says the card is unsupported, but after a couple of minutes, it starts working

pveversion:

Any Help on the Issue would be greatly appreciated. I would not be surprised if it was just a small oversight on my end.

Thanks!

Hello! This Is my first post in this forum, i hope it it satisfies the quality and level of specificity required for this place.

Unfortunately, i couldn't find a set of guidelines of what should / must be included in a post.

## Situation

A single VM was created, it has the PCIE Device Mapped properly. The Vendor, Subvendor, Device and Subdevice IDs have been set Manually to the original Device values.

### PVE Host

Machine Name: ThinkSystem SR665 V3

CPU: AMD EPYC 9124

RAM: 128G DDR5

GPU: NVIDIA H100 VBIOS Version: 96.00.30.00.01

NIC: Intel(R) E810-DA2

OS: PVE8.1.4 / kernel: 6.5.13

The Nessecary steps for PCIE / GPU Passthroughs have been taking, including:

- Enable Kernel Modules

-

Code:

root@pve:~# cat /etc/modules # /etc/modules: kernel modules to load at boot time. # # This file contains the names of kernel modules that should be loaded # at boot time, one per line. Lines beginning with "#" are ignored. # Parameters can be specified after the module name. vfio vfio_iommu_type1 vfio_pci vfio_virqfd root@pve:~# lsmod | grep vfio vfio_pci 16384 1 vfio_pci_core 86016 1 vfio_pci irqbypass 12288 10 vfio_pci_core,kvm vfio_iommu_type1 49152 1 vfio 57344 7 vfio_pci_core,vfio_iommu_type1,vfio_pci iommufd 77824 1 vfio

-

- Ensure IOMMU is enabled

-

Code:

root@pve:~# dmesg | grep -e DMAR -e IOMMU [ 1.716312] pci 0000:c0:00.2: AMD-Vi: IOMMU performance counters supported [ 1.719147] pci 0000:80:00.2: AMD-Vi: IOMMU performance counters supported [ 1.721295] pci 0000:00:00.2: AMD-Vi: IOMMU performance counters supported [ 1.725334] pci 0000:40:00.2: AMD-Vi: IOMMU performance counters supported [ 1.728167] pci 0000:c0:00.2: AMD-Vi: Found IOMMU cap 0x40 [ 1.728179] pci 0000:80:00.2: AMD-Vi: Found IOMMU cap 0x40 [ 1.728187] pci 0000:00:00.2: AMD-Vi: Found IOMMU cap 0x40 [ 1.728195] pci 0000:40:00.2: AMD-Vi: Found IOMMU cap 0x40 [ 1.728888] perf/amd_iommu: Detected AMD IOMMU #0 (2 banks, 4 counters/bank). [ 1.728893] perf/amd_iommu: Detected AMD IOMMU #1 (2 banks, 4 counters/bank). [ 1.728897] perf/amd_iommu: Detected AMD IOMMU #2 (2 banks, 4 counters/bank). [ 1.728902] perf/amd_iommu: Detected AMD IOMMU #3 (2 banks, 4 counters/bank).

-

- Ensure card is in its own IOMMU group

-

(only card in group 20)Code:

root@pve:~# pvesh get /nodes/pve/hardware/pci --pci-class-blacklist "" | grep -P "^20" │ 0x030200 │ 0x2331 │ 0000:81:00.0 │ 20 │ 0x10de │ GH100 [H100 PCIe] │ │ 0x1626 │ │ 0x10de │ NVIDIA Corporation │ NVIDIA Corporation

-

- Blacklist drivers

-

Code:

root@pve:~# cat /etc/modprobe.d/blacklist.conf blacklist nouveau blacklist nvidia*

-

Additionally, the Following Grub parameters have been set:

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on processor.max_cstate=0"Every available Firmware upgrade was applied, an upgrade for the H100 should be available, but it must be obtained through a ticket with NVIDIA, which is still pending.

### Guest VM

The Guest VM Is running Ubuntu 22.04 with Docker 24, and Nvidia Driver 535.154.05

It has 100G RAM and 16 CPU Cores of the native CPU Type.

The Card is not selected as primary GPU, because it is not set up to display any video output.

Setting the Device and Vendor IDs manually has been attempted, but did not result in any change.

Code:

==============NVSMI LOG==============

Timestamp : Mon Mar 18 15:59:26 2024

Driver Version : 535.154.05

CUDA Version : 12.2

Attached GPUs : 1

GPU 00000000:01:00.0

Product Name : NVIDIA H100 PCIe

Product Brand : NVIDIA

Product Architecture : Hopper

Display Mode : Enabled

Display Active : Disabled

Persistence Mode : Enabled

Addressing Mode : None

MIG Mode

Current : Disabled

Pending : Disabled

Accounting Mode : Disabled

Accounting Mode Buffer Size : 4000

Driver Model

Current : N/A

Pending : N/A

Serial Number : x

GPU UUID : x

Minor Number : 0

VBIOS Version : 96.00.30.00.01

MultiGPU Board : No

Board ID : 0x100

Board Part Number : x

GPU Part Number : x

FRU Part Number : N/A

Module ID : 4

Inforom Version

Image Version : 1010.0200.00.02

OEM Object : 2.1

ECC Object : 7.16

Power Management Object : N/A

Inforom BBX Object Flush

Latest Timestamp : N/A

Latest Duration : N/A

GPU Operation Mode

Current : N/A

Pending : N/A

GSP Firmware Version : 535.154.05

GPU Virtualization Mode

Virtualization Mode : Pass-Through

Host VGPU Mode : N/A

GPU Reset Status

Reset Required : No

Drain and Reset Recommended : No

IBMNPU

Relaxed Ordering Mode : N/A

PCI

Bus : 0x01

Device : 0x00

Domain : 0x0000

Device Id : 0x233110DE

Bus Id : 00000000:01:00.0

Sub System Id : 0x162610DE

GPU Link Info

PCIe Generation

Max : 5

Current : 5

Device Current : 5

Device Max : 5

Host Max : N/A

Link Width

Max : 16x

Current : 16x

Bridge Chip

Type : N/A

Firmware : N/A

Replays Since Reset : 0

Replay Number Rollovers : 0

Tx Throughput : 925 KB/s

Rx Throughput : 781 KB/s

Atomic Caps Inbound : N/A

Atomic Caps Outbound : N/A

Fan Speed : N/A

Performance State : P0

Clocks Event Reasons

Idle : Active

Applications Clocks Setting : Not Active

SW Power Cap : Not Active

HW Slowdown : Not Active

HW Thermal Slowdown : Not Active

HW Power Brake Slowdown : Not Active

Sync Boost : Not Active

SW Thermal Slowdown : Not Active

Display Clock Setting : Not Active

FB Memory Usage

Total : 81559 MiB

Reserved : 551 MiB

Used : 0 MiB

Free : 81007 MiB

BAR1 Memory Usage

Total : 131072 MiB

Used : 1 MiB

Free : 131071 MiB

Conf Compute Protected Memory Usage

Total : 0 MiB

Used : 0 MiB

Free : 0 MiB

Compute Mode : Default

[...]## Problem

On reboot of the Guest (or shutdown + normal boot), the PVE Host crashes with a NMI error. There are AER errors in the console even when the card appears to be working (before the crash, but the error code is an unspecific Hardware error

## Logs

PVE Host dmesg: AER errors like these appear on first startup and sometimes while the guest is in operation. These do not lead to a crash.

Code:

[ +0.000008] {1}[Hardware Error]: Hardware error from APEI Generic Hardware Error Source: 512

[ +0.000007] {1}[Hardware Error]: It has been corrected by h/w and requires no further action

[ +0.000002] {1}[Hardware Error]: event severity: corrected

[ +0.000002] {1}[Hardware Error]: Error 0, type: corrected

[ +0.000001] {1}[Hardware Error]: fru_text: PcieError

[ +0.000002] {1}[Hardware Error]: section_type: PCIe error

[ +0.000000] {1}[Hardware Error]: port_type: 4, root port

[ +0.000001] {1}[Hardware Error]: version: 0.2

[ +0.000001] {1}[Hardware Error]: command: 0x0407, status: 0x0010

[ +0.000002] {1}[Hardware Error]: device_id: 0000:80:01.1

[ +0.000001] {1}[Hardware Error]: slot: 2

[ +0.000001] {1}[Hardware Error]: secondary_bus: 0x81

[ +0.000001] {1}[Hardware Error]: vendor_id: 0x1022, device_id: 0x14ab

[ +0.000001] {1}[Hardware Error]: class_code: 060400

[ +0.000002] {1}[Hardware Error]: bridge: secondary_status: 0x2000, control: 0x0012

[ +0.000014] ice 0000:41:00.0: 2500 msecs passed between update to cached PHC time

[ +0.000055] pcieport 0000:80:01.1: AER: aer_status: 0x00000000, aer_mask: 0x00006000

[ +0.000028] pcieport 0000:80:01.1: AER: aer_layer=Transaction Layer, aer_agent=Receiver IDGuest dmesg: The Driver initially says the card is unsupported, but after a couple of minutes, it starts working

Code:

NVRM: installed in this system is not supported by the

NVRM: NVIDIA 535.154.05 driver release.

NVRM: Please see 'Appendix A - Supported NVIDIA GPU Products'

NVRM: in this release's README, available on the operating system

NVRM: specific graphics driver download page at www.nvidia.com.

[ +0.007817] nvidia: probe of 0000:01:00.0 failed with error -1

[ +0.000041] NVRM: The NVIDIA probe routine failed for 1 device(s).

[ +0.000002] NVRM: None of the NVIDIA devices were initialized.

[ +0.000303] nvidia-nvlink: Unregistered Nvlink Core, major device number 235

[ +0.181452] nvidia-nvlink: Nvlink Core is being initialized, major device number 235

[ +0.000005] NVRM: The NVIDIA GPU 0000:01:00.0 (PCI ID: 10de:2331)

NVRM: installed in this system is not supported by the

NVRM: NVIDIA 535.154.05 driver release.

NVRM: Please see 'Appendix A - Supported NVIDIA GPU Products'

NVRM: in this release's README, available on the operating system

NVRM: specific graphics driver download page at www.nvidia.com.

[ +0.006175] nvidia: probe of 0000:01:00.0 failed with error -1

[ +0.000041] NVRM: The NVIDIA probe routine failed for 1 device(s).

[ +0.000001] NVRM: None of the NVIDIA devices were initialized.

[ +0.000287] nvidia-nvlink: Unregistered Nvlink Core, major device number 235

[ +0.173524] nvidia-nvlink: Nvlink Core is being initialized, major device number 235

[ +0.000005] NVRM: The NVIDIA GPU 0000:01:00.0 (PCI ID: 10de:2331)

NVRM: installed in this system is not supported by the

NVRM: NVIDIA 535.154.05 driver release.

NVRM: Please see 'Appendix A - Supported NVIDIA GPU Products'

NVRM: in this release's README, available on the operating system

NVRM: specific graphics driver download page at www.nvidia.com.

[ +0.006134] nvidia: probe of 0000:01:00.0 failed with error -1

[ +0.000038] NVRM: The NVIDIA probe routine failed for 1 device(s).

[ +0.000001] NVRM: None of the NVIDIA devices were initialized.

[ +0.000272] nvidia-nvlink: Unregistered Nvlink Core, major device number 235

[ +0.176418] nvidia-nvlink: Nvlink Core is being initialized, major device number 235

[ +2.359117] nvidia 0000:01:00.0: enabling device (0000 -> 0002)

[ +0.011114] NVRM: loading NVIDIA UNIX x86_64 Kernel Module 535.154.05 Thu Dec 28 15:37:48 UTC 2023

[ +0.004135] nvidia-modeset: Loading NVIDIA Kernel Mode Setting Driver for UNIX platforms 535.154.05 Thu Dec 28 15:51:29 UTC 2023

[ +0.004197] [drm] [nvidia-drm] [GPU ID 0x00000100] Loading driver

[ +3.335324] [drm] Initialized nvidia-drm 0.0.0 20160202 for 0000:01:00.0 on minor 1

[ +0.004855] nvidia_uvm: module uses symbols from proprietary module nvidia, inheriting taint.

[ +0.002424] nvidia-uvm: Loaded the UVM driver, major device number 511.

[ +0.006168] [drm] [nvidia-drm] [GPU ID 0x00000100] Unloading driver

[ +0.025928] nvidia-uvm: Unloaded the UVM driver.

[ +0.046850] [drm] [nvidia-drm] [GPU ID 0x00000100] Loading driver

[ +0.004050] [drm] Initialized nvidia-drm 0.0.0 20160202 for 0000:01:00.0 on minor 1

[ +0.002462] nvidia_uvm: module uses symbols from proprietary module nvidia, inheriting taint.

[ +0.002443] nvidia-uvm: Loaded the UVM driver, major device number 511.

[ +0.006312] [drm] [nvidia-drm] [GPU ID 0x00000100] Unloading driver

[ +0.023896] nvidia-modeset: Unloading

[ +0.049933] nvidia-uvm: Unloaded the UVM driver.

[ +0.029724] nvidia-modeset: Loading NVIDIA Kernel Mode Setting Driver for UNIX platforms 535.154.05 Thu Dec 28 15:51:29 UTC 2023

[ +0.002803] [drm] [nvidia-drm] [GPU ID 0x00000100] Loading driver

[ +0.004434] [drm] Initialized nvidia-drm 0.0.0 20160202 for 0000:01:00.0 on minor 1

[ +0.002363] nvidia_uvm: module uses symbols from proprietary module nvidia, inheriting taint.

[ +0.002414] nvidia-uvm: Loaded the UVM driver, major device number 511.

[ +0.005759] [drm] [nvidia-drm] [GPU ID 0x00000100] Unloading driver

[ +0.026558] nvidia-modeset: Unloading

[ +0.030146] nvidia-uvm: Unloaded the UVM driver.pveversion:

Code:

root@pve:~# pveversion -v

proxmox-ve: 8.1.0 (running kernel: 6.5.13-1-pve)

pve-manager: 8.1.4 (running version: 8.1.4/ec5affc9e41f1d79)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.5.13-1-pve-signed: 6.5.13-1

proxmox-kernel-6.5: 6.5.13-1

proxmox-kernel-6.5.11-4-pve-signed: 6.5.11-4

ceph-fuse: 17.2.7-pve1

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.2

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.1.1

libpve-guest-common-perl: 5.0.6

libpve-http-server-perl: 5.0.5

libpve-network-perl: 0.9.5

libpve-rs-perl: 0.8.8

libpve-storage-perl: 8.1.0

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve4

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.1.4-1

proxmox-backup-file-restore: 3.1.4-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.5

proxmox-widget-toolkit: 4.1.4

pve-cluster: 8.0.5

pve-container: 5.0.8

pve-docs: 8.1.4

pve-edk2-firmware: 4.2023.08-4

pve-firewall: 5.0.3

pve-firmware: 3.9-2

pve-ha-manager: 4.0.3

pve-i18n: 3.2.1

pve-qemu-kvm: 8.1.5-3

pve-xtermjs: 5.3.0-3

qemu-server: 8.0.10

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.2-pve2Any Help on the Issue would be greatly appreciated. I would not be surprised if it was just a small oversight on my end.

Thanks!

Last edited: