Hey guys,

after I was able with the help of this forum and googling to get rid of all my issues with Proxmox HA Cluster over Thunderbolt 4 and an issue belonging to NTP that hold me back on enabling CEPH propery... I'm now working with my new cluster.

After my first LXC/VM was setup I tryed to disable the Networkport on my switch for PVE02 and wanted to see how HA is working on Proxmox. I had small experience on VMWare how much time it takes there, so pretty new stuff for me.

My HA failover (migrate a LXC to an other node after it was turned red, not available in the webgui) took about 5 minutes. Is this normal or is there a way to speed those things up? A normal test on rightclick migrate to an other node was done in a few seconds without any loss of ping during the process.

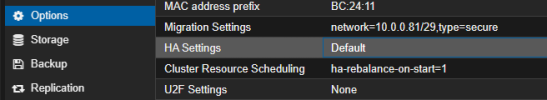

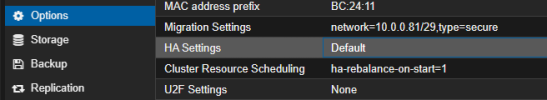

My setup is as follows: Network for Ceph (public + cluster) and Migration is the thunderbolt 4 network (10.0.0.81/29).

Also tried the "type=insecure", doesn't make any difference.

The default NIC over ethernet is used for the vmbr0 to have access to my containers.

After I thought 4-5 times about my HA failover test... the Thunderbolt cable connection wasn't turned off while the switchport for vmbr0 was... so maybe proxmox wasn't realy sure if the node was lost or not, because the ceph connection was still there (public/cluster/migration network).

I guess someone here can clear this up if this is working as intended. On monday I can "pull" a powercable for an absolute real test if needed.

after I was able with the help of this forum and googling to get rid of all my issues with Proxmox HA Cluster over Thunderbolt 4 and an issue belonging to NTP that hold me back on enabling CEPH propery... I'm now working with my new cluster.

After my first LXC/VM was setup I tryed to disable the Networkport on my switch for PVE02 and wanted to see how HA is working on Proxmox. I had small experience on VMWare how much time it takes there, so pretty new stuff for me.

My HA failover (migrate a LXC to an other node after it was turned red, not available in the webgui) took about 5 minutes. Is this normal or is there a way to speed those things up? A normal test on rightclick migrate to an other node was done in a few seconds without any loss of ping during the process.

My setup is as follows: Network for Ceph (public + cluster) and Migration is the thunderbolt 4 network (10.0.0.81/29).

Also tried the "type=insecure", doesn't make any difference.

The default NIC over ethernet is used for the vmbr0 to have access to my containers.

After I thought 4-5 times about my HA failover test... the Thunderbolt cable connection wasn't turned off while the switchport for vmbr0 was... so maybe proxmox wasn't realy sure if the node was lost or not, because the ceph connection was still there (public/cluster/migration network).

I guess someone here can clear this up if this is working as intended. On monday I can "pull" a powercable for an absolute real test if needed.