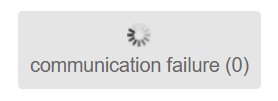

Seems like it could be a network problem... however, that is the only part of the proxmox UI that has so far caused me any issues. I have been able to upload a number of ISO images from my workstation to the host with no (noticeable?) issues. As I was writing, I uploaded the proxmox datacenter manager ISO to the host, according to the status it took 11.3s to upload 1.22GB of data.

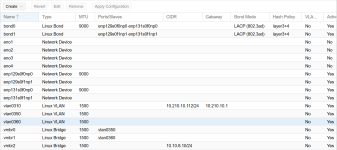

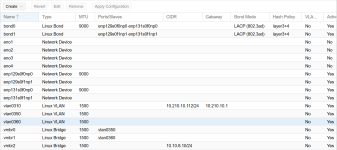

There is a VM deployed with a network device connected to

vmbr0 (part of

bond1), accessing the Proxmox API via a second network device connected to

vmbr2 (

vmbr2 has no external connectivity). The VM runs a web app accessed via

vmbr0, the end user accessing the web app has (up until this point) reported no issues.

More than willing to try things to figure out what is going on.

Information (some details have been modified to protect the innocent):

Proxmox:

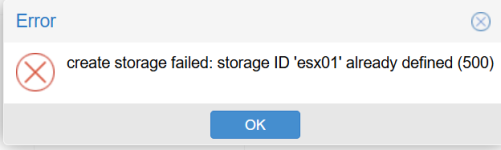

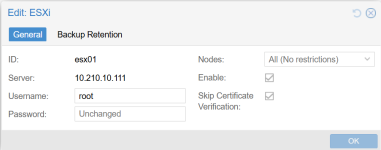

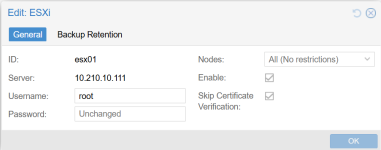

ESXi storage configuration

IP address configuration:

NOTE: I connect to

https://10.210.10.112:8006 to access the management webUI on pve03

pve03 Interface state:

NOTE:

bond0 is down

Code:

root@pve03:/usr/libexec/pve-esxi-import-tools# ethtool bond0 | grep -P "Speed|Link"

Speed: Unknown!

Link detected: no

root@pve03:/usr/libexec/pve-esxi-import-tools# ethtool bond1 | grep -P "Speed|Link"

Speed: 20000Mb/s

Link detected: yes

esx01 host:

NOTES:

I can reach the ESXi webUI (not VCenter) via

https://10.210.10.111

vmnic0 is connected to a switch, but no traffic is using the interface

Code:

[root@esx01:~] esxcli network ip interface ipv4 get

Name IPv4 Address IPv4 Netmask IPv4 Broadcast Address Type Gateway DHCP DNS

---- ------------- ------------- -------------- ------------ ----------- --------

vmk0 10.210.10.111 255.255.255.0 10.210.10.255 STATIC 10.210.10.1 false

vmk1 10.249.0.111 255.255.255.0 10.249.0.255 STATIC 10.210.10.1 false

vmk2 10.249.2.111 255.255.255.0 10.249.2.255 STATIC 10.210.10.1 false

[root@esx01:~] esxcli network nic list

Name PCI Device Driver Admin Status Link Status Speed Duplex MAC Address MTU Description

------ ------------ ------ ------------ ----------- ----- ------ ----------------- ---- -----------

vmnic0 0000:01:00.0 ntg3 Up Up 1000 Full 80:18:44:e7:7a:64 1500 Broadcom Corporation NetXtreme BCM5720 Gigabit Ethernet

vmnic1 0000:01:00.1 ntg3 Up Down 0 Half 80:18:44:e7:7a:65 1500 Broadcom Corporation NetXtreme BCM5720 Gigabit Ethernet

vmnic2 0000:02:00.0 ntg3 Up Down 0 Half 80:18:44:e7:7a:66 1500 Broadcom Corporation NetXtreme BCM5720 Gigabit Ethernet

vmnic3 0000:02:00.1 ntg3 Up Down 0 Half 80:18:44:e7:7a:67 1500 Broadcom Corporation NetXtreme BCM5720 Gigabit Ethernet

vmnic4 0000:81:00.0 i40en Up Up 10000 Full 6c:fe:54:34:b8:70 9000 Intel(R) Ethernet Controller X710 for 10GbE SFP+

vmnic5 0000:81:00.1 i40en Up Up 10000 Full 6c:fe:54:34:b8:71 1500 Intel(R) Ethernet Controller X710 for 10GbE SFP+

vmnic6 0000:83:00.0 i40en Up Up 10000 Full 6c:fe:54:34:b7:90 9000 Intel(R) Ethernet Controller X710 for 10GbE SFP+

vmnic7 0000:83:00.1 i40en Up Up 10000 Full 6c:fe:54:34:b7:91 1500 Intel(R) Ethernet Controller X710 for 10GbE SFP+

Switch Interconnects:

swt05 - server connectivity

Code:

swt05# show running-config interface ethernet 1/24

interface Ethernet1/24

description TRUNK - esx01

switchport

switchport mode trunk

switchport trunk allowed vlan 300,310,350,360-364

mtu 9216

no shutdown

swt05# show running-config interface ethernet 1/26

interface Ethernet1/26

description TRUNK - Port-Channel26 - pve03

switchport

switchport mode trunk

switchport trunk allowed vlan 300,310,350,360-364

mtu 9216

channel-group 26 mode active

no shutdown

swt05# show running-config interface port-channel 26

interface port-channel26

description TRUNK - pve03

switchport

switchport mode trunk

switchport trunk allowed vlan 300,310,350,360-364

mtu 9216

vpc 26

swt05# show interface ethernet 1/24 | i MTU

MTU 9216 bytes, BW 10000000 Kbit , DLY 10 usec

swt05# show interface ethernet 1/26 | i MTU

MTU 9216 bytes, BW 10000000 Kbit , DLY 10 usec

swt05# show interface port-channel 26 | i MTU

MTU 9216 bytes, BW 10000000 Kbit , DLY 10 usec

swt06 - server connectivity

Code:

swt06# show running-config interface ethernet 1/24

interface Ethernet1/24

description TRUNK - esx01

switchport

switchport mode trunk

switchport trunk allowed vlan 300,310,350,360-364

mtu 9216

no shutdown

swt06# show running-config interface ethernet 1/26

interface Ethernet1/26

description TRUNK - Port-Channel26 - pve03

switchport

switchport mode trunk

switchport trunk allowed vlan 300,310,350,360-364

mtu 9216

channel-group 26 mode active

no shutdown

swt06# show running-config interface port-channel 26

interface port-channel26

description TRUNK - pve03

switchport

switchport mode trunk

switchport trunk allowed vlan 300,310,350,360-364

mtu 9216

vpc 26

swt06# show interface ethernet 1/24 | i MTU

MTU 9216 bytes, BW 10000000 Kbit , DLY 10 usec

swt06# show interface ethernet 1/26 | i MTU

MTU 9216 bytes, BW 10000000 Kbit , DLY 10 usec

swt06# show interface port-channel 26 | i MTU

MTU 9216 bytes, BW 10000000 Kbit , DLY 10 usec

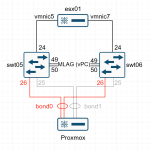

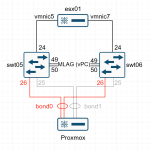

switch MLAG (vPC) connectivity

swt05

Code:

swt05# show running-config interface port-channel 1

interface port-channel1

switchport

switchport mode trunk

spanning-tree port type network

vpc peer-link

swt05# show running-config interface ethernet 1/49-50

interface Ethernet1/49

description vPC Link

switchport

switchport mode trunk

channel-group 1 mode active

no shutdown

interface Ethernet1/50

description vPC Link

switchport

switchport mode trunk

channel-group 1 mode active

no shutdown

swt05# show interface ethernet 1/49 | i MTU

MTU 9216 bytes, BW 100000000 Kbit , DLY 10 usec

swt05# show interface ethernet 1/50 | i MTU

MTU 9216 bytes, BW 100000000 Kbit , DLY 10 usec

swt05# show interface port-channel 1 | i MTU

MTU 9216 bytes, BW 200000000 Kbit , DLY 10 usec

swt06

Code:

swt06# show running-config interface port-channel 1

interface port-channel1

switchport

switchport mode trunk

spanning-tree port type network

vpc peer-link

swt06# show running-config interface ethernet 1/49

interface Ethernet1/49

description vPC Link

switchport

switchport mode trunk

channel-group 1 mode active

no shutdown

swt06# show running-config interface ethernet 1/50

interface Ethernet1/50

description vPC Link

switchport

switchport mode trunk

channel-group 1 mode active

no shutdown

swt06# show interface port-channel 1 | i MTU

MTU 9216 bytes, BW 200000000 Kbit , DLY 10 usec

swt06# show interface ethernet 1/49 | i MTU

MTU 9216 bytes, BW 100000000 Kbit , DLY 10 usec

swt06# show interface ethernet 1/50 | i MTU

MTU 9216 bytes, BW 100000000 Kbit , DLY 10 usec

Connectivity diagram for servers

Some MTU testing between hosts:

esx01 -> pve03

Code:

[root@esx01:~] ping -d -s 1472 10.210.10.112

PING 10.210.10.112 (10.210.10.112): 1472 data bytes

1480 bytes from 10.210.10.112: icmp_seq=0 ttl=64 time=0.150 ms

1480 bytes from 10.210.10.112: icmp_seq=1 ttl=64 time=0.138 ms

1480 bytes from 10.210.10.112: icmp_seq=2 ttl=64 time=0.164 ms

--- 10.210.10.112 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.138/0.151/0.164 ms

[root@esx01:~] ping -d -s 1473 10.210.10.112

PING 10.210.10.112 (10.210.10.112): 1473 data bytes

sendto() failed (Message too long)

sendto() failed (Message too long)

sendto() failed (Message too long)

--- 10.210.10.112 ping statistics ---

3 packets transmitted, 0 packets received, 100% packet loss

pve03 -> esx01

Code:

root@pve03:~# ping -M do -s 1472 10.210.10.111

PING 10.210.10.111 (10.210.10.111) 1472(1500) bytes of data.

1480 bytes from 10.210.10.111: icmp_seq=1 ttl=64 time=0.136 ms

1480 bytes from 10.210.10.111: icmp_seq=2 ttl=64 time=0.148 ms

1480 bytes from 10.210.10.111: icmp_seq=3 ttl=64 time=0.102 ms

^C

--- 10.210.10.111 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2051ms

rtt min/avg/max/mdev = 0.102/0.128/0.148/0.019 ms

root@pve03:~# ping -M do -s 1473 10.210.10.111

PING 10.210.10.111 (10.210.10.111) 1473(1501) bytes of data.

ping: local error: message too long, mtu=1500

ping: local error: message too long, mtu=1500

ping: local error: message too long, mtu=1500

ping: local error: message too long, mtu=1500

^C

--- 10.210.10.111 ping statistics ---

4 packets transmitted, 0 received, +4 errors, 100% packet loss, time 3094ms

Workstation - my workstation (172.30.32.19) is 5 hops from the servers.

workstation -> pve03

Code:

PS C:\> ping -f -l 1472 10.210.10.111

Pinging 10.210.10.111 with 1472 bytes of data:

Reply from 10.210.10.111: bytes=1472 time=3ms TTL=60

Reply from 10.210.10.111: bytes=1472 time=4ms TTL=60

Reply from 10.210.10.111: bytes=1472 time=2ms TTL=60

Reply from 10.210.10.111: bytes=1472 time=6ms TTL=60

Ping statistics for 10.210.10.111:

Packets: Sent = 4, Received = 4, Lost = 0 (0% loss),

Approximate round trip times in milli-seconds:

Minimum = 2ms, Maximum = 6ms, Average = 3ms

PS C:\> ping -f -l 1473 10.210.10.111

Pinging 10.210.10.111 with 1473 bytes of data:

Packet needs to be fragmented but DF set.

Packet needs to be fragmented but DF set.

Packet needs to be fragmented but DF set.

Packet needs to be fragmented but DF set.

Ping statistics for 10.210.10.111:

Packets: Sent = 4, Received = 0, Lost = 4 (100% loss),

Due to firewall rules on the workstation (which I can't modify), the server can't ping the workstation however we can at least try.

pve03 -> workstation

Code:

root@pve03:~# ping -M do -s 1472 -c 3 -W 1 172.30.32.19

PING 172.30.32.19 (172.30.32.19) 1472(1500) bytes of data.

--- 172.30.32.19 ping statistics ---

3 packets transmitted, 0 received, 100% packet loss, time 2079ms

root@pve03:~# ping -M do -s 1473 -c 3 -W 1 172.30.32.19

PING 172.30.32.19 (172.30.32.19) 1473(1501) bytes of data.

ping: local error: message too long, mtu=1500

ping: local error: message too long, mtu=1500

ping: local error: message too long, mtu=1500

--- 172.30.32.19 ping statistics ---

3 packets transmitted, 0 received, +3 errors, 100% packet loss, time 2077ms

I can however reach my workstations first hop from pve03

Code:

root@pve03:~# ping -M do -s 1472 -c 3 -W 1 172.30.32.1

PING 172.30.32.1 (172.30.32.1) 1472(1500) bytes of data.

1480 bytes from 172.30.32.1: icmp_seq=1 ttl=61 time=1.02 ms

1480 bytes from 172.30.32.1: icmp_seq=2 ttl=61 time=0.960 ms

1480 bytes from 172.30.32.1: icmp_seq=3 ttl=61 time=0.964 ms

--- 172.30.32.1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2002ms

rtt min/avg/max/mdev = 0.960/0.981/1.019/0.026 ms

root@pve03:~# ping -M do -s 1473 -c 3 -W 1 172.30.32.1

PING 172.30.32.1 (172.30.32.1) 1473(1501) bytes of data.

ping: local error: message too long, mtu=1500

ping: local error: message too long, mtu=1500

ping: local error: message too long, mtu=1500

--- 172.30.32.1 ping statistics ---

3 packets transmitted, 0 received, +3 errors, 100% packet loss, time 2075ms