Hi community,

Recently we started observing weird behavior as follows:

- VMs are migrated out of of the cluster node (1/7)

- norecover and norebalace OSD flags are set

- The node (pve12) is shut down for HW maintenance (ram and battery replacement)

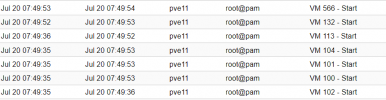

- Random number of VMs are rebooted on another node pve11 (HA is starting them)

Looking for any suggestions what may be causing this.

The latest changes we made were in the ceph config, disabling debug logs and osd_memory_target=64g.

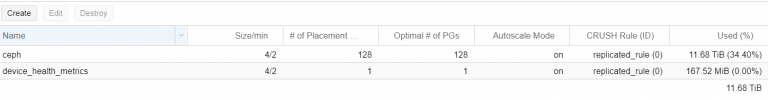

Current ceph config is attached.

I cannot see anything in the cep logs nor in the system logs of the node.

Well I see that this happening but no explanation why. Also attached.

Looking for comment.

Many thanks,

hepo

Recently we started observing weird behavior as follows:

- VMs are migrated out of of the cluster node (1/7)

- norecover and norebalace OSD flags are set

- The node (pve12) is shut down for HW maintenance (ram and battery replacement)

- Random number of VMs are rebooted on another node pve11 (HA is starting them)

Looking for any suggestions what may be causing this.

The latest changes we made were in the ceph config, disabling debug logs and osd_memory_target=64g.

Current ceph config is attached.

I cannot see anything in the cep logs nor in the system logs of the node.

Well I see that this happening but no explanation why. Also attached.

Looking for comment.

Many thanks,

hepo