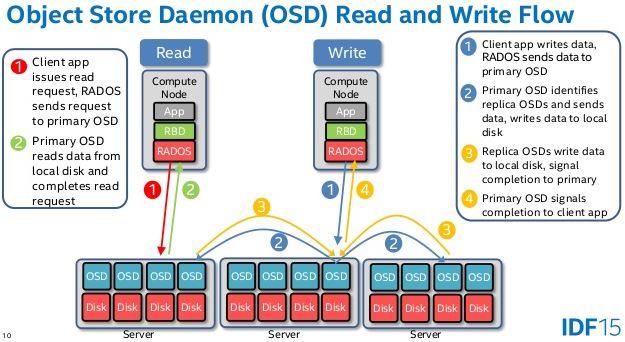

During the test, it was found that there were 3 OSDs running in CEPH, one OSD network was interrupted or the host was powered off and restarted. All VMs using CEPH need to wait for the OSD heartbeat to be used normally.

In the production environment is not very friendly, in addition to modifying the heartbeat time, is there a better solution?

In the production environment is not very friendly, in addition to modifying the heartbeat time, is there a better solution?