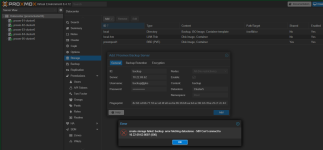

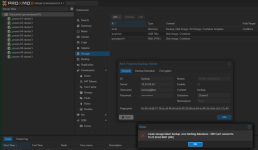

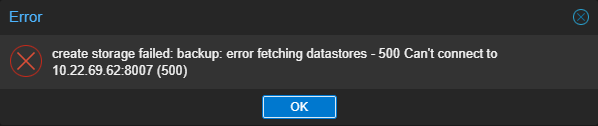

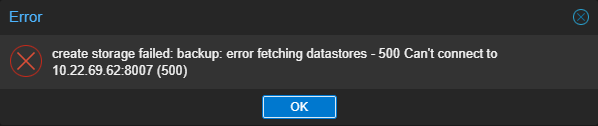

Hello, I have several Proxmox virtual environment clusters (8.4) with VMs and I'd like to backup those to one PBS (4.0.15). First pve-cluster1 can be connected to PBS ok, but no luck with other clusters?

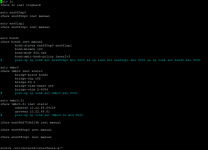

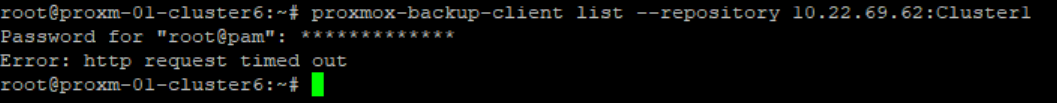

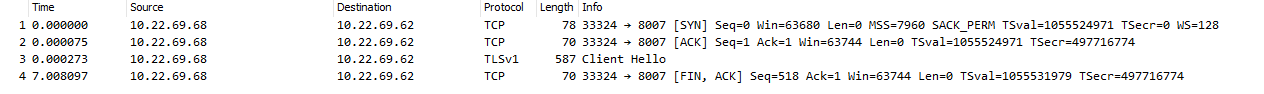

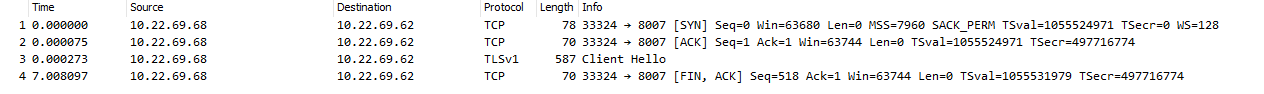

Seems that in PBS there is no firewall blocking this connectivity, any idea why this is not working? tcpdump from PBS (10.22.69.62) shows that pbs is not answering pve (10.22.69.68) requests:

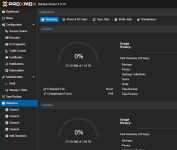

In PBS side, storage disks are logically separated to different disks:

root@pbs:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 446.6G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 1G 0 part

└─sda3 8:3 0 445.6G 0 part

├─pbs-swap 252:4 0 8G 0 lvm [SWAP]

└─pbs-root 252:5 0 421.6G 0 lvm /

sdb 8:16 0 14T 0 disk

├─data-cluster1 252:0 0 1.4T 0 lvm /cluster1

├─data-cluster3 252:1 0 3T 0 lvm /cluster3

├─data-cluster4 252:2 0 3T 0 lvm /cluster4

└─data-cluster5 252:3 0 3T 0 lvm /cluster5

BR

Jonas

Seems that in PBS there is no firewall blocking this connectivity, any idea why this is not working? tcpdump from PBS (10.22.69.62) shows that pbs is not answering pve (10.22.69.68) requests:

In PBS side, storage disks are logically separated to different disks:

root@pbs:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 446.6G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 1G 0 part

└─sda3 8:3 0 445.6G 0 part

├─pbs-swap 252:4 0 8G 0 lvm [SWAP]

└─pbs-root 252:5 0 421.6G 0 lvm /

sdb 8:16 0 14T 0 disk

├─data-cluster1 252:0 0 1.4T 0 lvm /cluster1

├─data-cluster3 252:1 0 3T 0 lvm /cluster3

├─data-cluster4 252:2 0 3T 0 lvm /cluster4

└─data-cluster5 252:3 0 3T 0 lvm /cluster5

BR

Jonas