After a lot of trial and error, I was finally able to get Windows Clustering working under PVE with Ceph as the backend storage

The way I was able to get this to work is using Storage Spaces Direct (I know this is a Datacenter only feature) but there were a couple things I had to do to allow storage spaces direct to provision the storage properly:

Now that we've gone through how this works, I wanted to go over a few things I tried that ultimately didn't work, and why it didn't work, to hopefully spur some discussion around how Proxmox/KVM could improve to support this in a way that works in Windows Server Standard, as Storage Spaces Direct is a Windows Server Datacenter only option

Failed Attempt 1: Shared Ceph Disk

The first method I tried was creating a ceph disk in one VM, then manually adding that disk to another VM's config file. This allowed the disk to show up in both VMs, however when I attempted to add the disk in the cluster I was informed that it could not be done because SCSI persistent reservations were not supported. After some exhaustive research I determined the SCSI adapter options in KVM simply don't support persistent reservations unless you're directly passing a SCSI disk through to the VM.

Failed Attempt 2: Ceph iSCSI

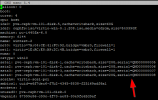

I've gotta admit this one looked promising. I installed the ceph-iscsi package and fought with the scripts enough to get everything set up like so (as shown from gwcli):

I added the iSCSI storage to Windows and it got a little further in the storage verification, meaning it appeared that the iSCSI service appeared to support persistent reservations, but when the cluster verifications actually attempted to use it, it failed.

When I started digging into this I found that the PetaSAN project has overcome this obstacle. Their platform runs on Ceph and is able to share iSCSI to Windows Clusters with support for SCSI persistent reservations.

The reason I'm posting the failures is that I'd like to gauge the PVE team and community's desire to add additional functionality on top of the existing Ceph infrastructure builtin into PVE. I know PVE's primary purpose is virtualization and containerization, but with the Ceph platform underpinning PVE's hyperconvergence it would be nice to have the capability of utilizing some of the more advanced features available with the use of Ceph. Some examples:

The way I was able to get this to work is using Storage Spaces Direct (I know this is a Datacenter only feature) but there were a couple things I had to do to allow storage spaces direct to provision the storage properly:

- Add the disks as SATA disks. SCSI disks presented an incompatibility which caused storage spaces to refuse to use the disks.

- You'll need a minimum of 4 disks of equivalent size added for this to work. You can have up to 6 sata disks (sata0-sata5)

- Add a 'serial' parameter that is unique to each disk in the cluster on each node. To do this you have to manually edit the KVM config file in /etc/pve/nodes/{NODE_NAME}/qemu-server/{VM_ID}.conf like this:

- Once the storage is added you can create the cluster. Once the cluster is created it needs to have storage spaces direct enabled and all of your disks added. Run the following powershell command to do this:

- Enable-ClusterS2D -SkipEligibilityChecks -Verbose

- Once storage spaces direct has been enabled you can proceed to add a volume and set up the rest of the cluster.

Now that we've gone through how this works, I wanted to go over a few things I tried that ultimately didn't work, and why it didn't work, to hopefully spur some discussion around how Proxmox/KVM could improve to support this in a way that works in Windows Server Standard, as Storage Spaces Direct is a Windows Server Datacenter only option

Failed Attempt 1: Shared Ceph Disk

The first method I tried was creating a ceph disk in one VM, then manually adding that disk to another VM's config file. This allowed the disk to show up in both VMs, however when I attempted to add the disk in the cluster I was informed that it could not be done because SCSI persistent reservations were not supported. After some exhaustive research I determined the SCSI adapter options in KVM simply don't support persistent reservations unless you're directly passing a SCSI disk through to the VM.

Failed Attempt 2: Ceph iSCSI

I've gotta admit this one looked promising. I installed the ceph-iscsi package and fought with the scripts enough to get everything set up like so (as shown from gwcli):

I added the iSCSI storage to Windows and it got a little further in the storage verification, meaning it appeared that the iSCSI service appeared to support persistent reservations, but when the cluster verifications actually attempted to use it, it failed.

When I started digging into this I found that the PetaSAN project has overcome this obstacle. Their platform runs on Ceph and is able to share iSCSI to Windows Clusters with support for SCSI persistent reservations.

The reason I'm posting the failures is that I'd like to gauge the PVE team and community's desire to add additional functionality on top of the existing Ceph infrastructure builtin into PVE. I know PVE's primary purpose is virtualization and containerization, but with the Ceph platform underpinning PVE's hyperconvergence it would be nice to have the capability of utilizing some of the more advanced features available with the use of Ceph. Some examples:

- iSCSI sharing of Ceph storage for use with other Hypervisors like HyperV and VMWare, as well as high availability clustering services in Windows and Linux

- These all require persisitent reservations to function properly

- This could allow for an easier conversion path to PVE

- See: PetaSAN, they have a kernel modification that allows persistent reservations using Ceph

- Multi-Server NFS sharing

- See: PetaSAN

- Future: Multi-Server CIFS sharing/scale-out file server

- Samba hasn't yet implemented the necessary SMBv3 protocol magic to enable scale-out file server yet, but it has been funded for development

- Samba already has Ceph support

Last edited: