In short, I am a PVE & SAN storage N00b. MPIO not working (but configured according to vendor specs, AFAIK [details below])

Here are my settings:

I dont believe I have MPIO setup correctly and not sure why. My /etc/multipath.conf looks like:

I added the following 2 lines to the bottom of my /etc/iscsi/iscsid.conf according to this guide:

iscsiadm -m session

lsblk shows only local storage:

I know there is more I am not showing, but I'm not sure what would be helpful. PLease let me know and I'll replay asap.

Thank you!!

Here are my settings:

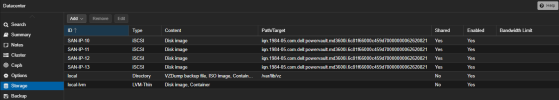

- PVE version 8.0.4 on a Dell R710 Host with 6 NICs. 2 NICs are plugged into my iSCSI switch stack with following settings:

- Dell PowerVault MD3600i SAN with 2 controllers (with 2 NICs each) plugged in to iSCSI switch stack.

- Each interface configured on the iSCSi network

- 172.16.0.10, 11, 12, & 13/24

- Each interface configured on the iSCSi network

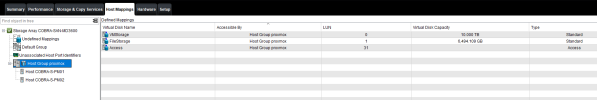

- I have successfully added all 4 paths in datacenter > storage

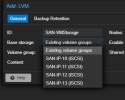

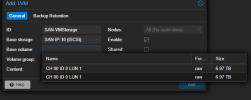

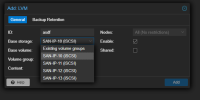

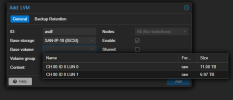

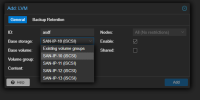

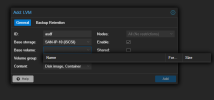

- Trying to add an LVM I cannot get it to see any base volumes

I dont believe I have MPIO setup correctly and not sure why. My /etc/multipath.conf looks like:

Code:

root@COBRA-S-PM01:~# cat /etc/multipath.conf

defaults {

find_multipaths yes

polling_interval 2

path_selector "round-robin 0"

path_grouping_policy multibus

rr_min_io 100

failback immediate

no_path_retry queue

user_friendly_names yes

}

blacklist {

wwid .*

}

blacklist_exceptions {

wwid "36C81F66000C459D70000000062620821"

}

devices {

device {

vendor "DELL"

product "MD36xxi"

path_grouping_policy group_by_prio

prio rdac

path_checker rdac

path_selector "round-robin 0"

hardware_handler "1 rdac"

failback immediate

features "2 pg_init_retries 50"

no_path_retry 30

rr_min_io 100

}

}

multipaths {

multipath {

wwid "36C81F66000C459D70000000062620821"

alias md36xxi

}

}I added the following 2 lines to the bottom of my /etc/iscsi/iscsid.conf according to this guide:

Code:

node.startup = automatic

node.session.timeo.replacement_timeout = 15iscsiadm -m session

Code:

# iscsiadm -m session

tcp: [1] 172.16.0.13:3260,2 iqn.1984-05.com.dell:powervault.md3600i.6c81f66000c459d70000000062620821 (non-flash)

tcp: [2] 172.16.0.11:3260,1 iqn.1984-05.com.dell:powervault.md3600i.6c81f66000c459d70000000062620821 (non-flash)

tcp: [3] 172.16.0.10:3260,1 iqn.1984-05.com.dell:powervault.md3600i.6c81f66000c459d70000000062620821 (non-flash)

tcp: [4] 172.16.0.12:3260,2 iqn.1984-05.com.dell:powervault.md3600i.6c81f66000c459d70000000062620821 (non-flash)

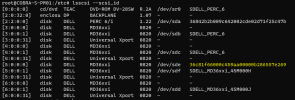

root@COBRA-S-PM01:~#lsblk shows only local storage:

Code:

root@COBRA-S-PM01:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 136.1G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 1G 0 part

└─sda3 8:3 0 135.1G 0 part

├─pve-swap 253:0 0 8G 0 lvm [SWAP]

├─pve-root 253:1 0 43.8G 0 lvm /

├─pve-data_tmeta 253:2 0 1G 0 lvm

│ └─pve-data 253:4 0 65.3G 0 lvm

└─pve-data_tdata 253:3 0 65.3G 0 lvm

└─pve-data 253:4 0 65.3G 0 lvm

sr0 11:0 1 1024M 0 romI know there is more I am not showing, but I'm not sure what would be helpful. PLease let me know and I'll replay asap.

Thank you!!

Last edited: