Backstory, I installed proxmox and a windows 10 vm a while back. It was rock solid for quite a while. I decided i wanted to host something else on the win10 vm, so I went to increase the amount of storage allocated to the VM, and fumbling around with the GUI managed to give the VM more storage than actually exists in the pool. That combined with the fact that microsoft are morons, an install that was supposed to be 140gb ended up adding like 370gb to my storage, filled up the partition completely and windows 10 couldn't function in that condition and the VM stopped booting. I hadn't made a snapshot so i figured the best solution was to add storage. I got a 2tb hard drive to add to my existing 500gb ssd, and after doing some research, found out the right commands to extend the storage pool to include that drive. The windows 10 vm was able to boot, and annoyingly the drive occupied space dropped by over 200gb once the installation finished(again, microsoft are morons). All was fine at that point it seemed, though a couple of times the win10 vm crashed for no apparent reason. Haven't even had a chance to look into why yet.

Then yesterday the power company was doing maintenance and cut our power while I was sleeping. They apparently knocked on the door once. Anyway when power came back on, proxmox now will not boot. This is what I get.

Found volume group "pve" using metadata type lvm2

4 logical volume(s) in volume group "pve" now active

/dev/mapper/pve-root: recovering journal

/dev/mapper/pve-root: clean, 49658/6291456 files, 2465140/25165824 blocks

[ TIME ] Timed out waiting for device /dev/disk/by-label/Storage.

[DEPEND] Dependency failed for /mnt/data.

[DEPEND] Dependency failed for Local File Systems.

[DEPEND] Dependency failed for File System Check on /dev/disk/by-label/Storage.

You are in emergency mode. After logging in, type "journal -xb" to view system logs, "systemctl reboot" to reboot, "systemctl default" or "exit" to boot into default mode.

Give root password for maintenance

(or press Control-D to continue):

Continue or boot into default both just eventually come back to this screen.

The method i found online, that I used to extend the existing volume was....

partition drive and set LVM 8e flag

create new physical volume with pvcreate /dev/<sdb1

extend default VG with vgextend pve /dev/sdb1

extend data logical volume with lvextend /dev/mapper/pve-data /dev/sdb1

verify your FS is clean with fsck -nv /dev/mapper/pve-data

resize your FS with resize2fs -F /dev/mapper/pve-data

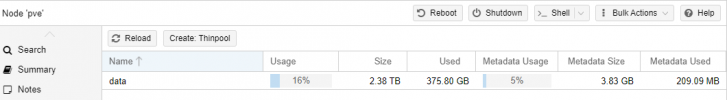

attached are screenshots of what the result of this set of instructions was.

Then yesterday the power company was doing maintenance and cut our power while I was sleeping. They apparently knocked on the door once. Anyway when power came back on, proxmox now will not boot. This is what I get.

Found volume group "pve" using metadata type lvm2

4 logical volume(s) in volume group "pve" now active

/dev/mapper/pve-root: recovering journal

/dev/mapper/pve-root: clean, 49658/6291456 files, 2465140/25165824 blocks

[ TIME ] Timed out waiting for device /dev/disk/by-label/Storage.

[DEPEND] Dependency failed for /mnt/data.

[DEPEND] Dependency failed for Local File Systems.

[DEPEND] Dependency failed for File System Check on /dev/disk/by-label/Storage.

You are in emergency mode. After logging in, type "journal -xb" to view system logs, "systemctl reboot" to reboot, "systemctl default" or "exit" to boot into default mode.

Give root password for maintenance

(or press Control-D to continue):

Continue or boot into default both just eventually come back to this screen.

The method i found online, that I used to extend the existing volume was....

partition drive and set LVM 8e flag

create new physical volume with pvcreate /dev/<sdb1

extend default VG with vgextend pve /dev/sdb1

extend data logical volume with lvextend /dev/mapper/pve-data /dev/sdb1

verify your FS is clean with fsck -nv /dev/mapper/pve-data

resize your FS with resize2fs -F /dev/mapper/pve-data

attached are screenshots of what the result of this set of instructions was.

Attachments

Last edited: