Hello,

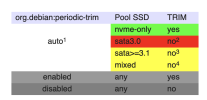

Using a general "how to get the best performance/life out of SSDs on ZFS" guide, I set `autotrim=on` on both my rpool and my vmStore1 pool.

Then I became aware that Proxmox includes a cron job to do this weekly:

Question: Do I need to have autotrim enabled with this cron job in place? If I don't need it, and have it enabled, does that cause problems/excess disk wear?

Currently: I think I can disable autotrim because Proxmox is doing both TRIM and SCRUB weekly? But I don't know how to read cron jobs entirely, and I'm not sure if it's TRIMming/SCRUBbing on all pools or just rpool.

On Arch-based systems I use `fstrim.timer` to set automatic SSD TRIM. I've not seen it done with a cron job before, and none of those systems use ZFS, so I'd really appreciate any advice.

Thanks!

Using a general "how to get the best performance/life out of SSDs on ZFS" guide, I set `autotrim=on` on both my rpool and my vmStore1 pool.

Then I became aware that Proxmox includes a cron job to do this weekly:

/etc/cron.d# cat zfsutils-linux

PATH=/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin

# TRIM the first Sunday of every month.

24 0 1-7 * * root if [ $(date +\%w) -eq 0 ] && [ -x /usr/lib/zfs-linux/trim ]; then /usr/lib/zfs-linux/trim; fi

# Scrub the second Sunday of every month.

24 0 8-14 * * root if [ $(date +\%w) -eq 0 ] && [ -x /usr/lib/zfs-linux/scrub ]; then /usr/lib/zfs-linux/scrub; fiQuestion: Do I need to have autotrim enabled with this cron job in place? If I don't need it, and have it enabled, does that cause problems/excess disk wear?

Currently: I think I can disable autotrim because Proxmox is doing both TRIM and SCRUB weekly? But I don't know how to read cron jobs entirely, and I'm not sure if it's TRIMming/SCRUBbing on all pools or just rpool.

On Arch-based systems I use `fstrim.timer` to set automatic SSD TRIM. I've not seen it done with a cron job before, and none of those systems use ZFS, so I'd really appreciate any advice.

Thanks!