I have 3 Proxmox nodes in a cluster, and I need to configure the 3PAR to the nodes

I'm using multipath now, but the LUNs are showing 4 times for all the nodes. Sometimes the lun is showing in one node but not in other nodes. How can I sync the LUNs automatically? Is there any way to solve this issue?

If exporting the 3Par lun to the Proxmox nodes, it is not showing until this command is executed: echo "- - -" | tee /sys/class/scsi_host/host*/scan (or) reboot the node. Why is it not showing automatically?

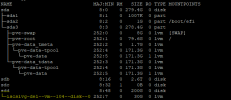

lsblk output:

sdw 65:96 0 500G 0 disk

└─mpathi 252:28 0 500G 0 mpath

└─mpathi-part1 252:29 0 500G 0 part

└─mpathj_testingdisk_vg-vm--103--disk--0 252:15 0 100G 0 lvm

sdx 65:112 0 500G 0 disk

└─mpathi 252:28 0 500G 0 mpath

└─mpathi-part1 252:29 0 500G 0 part

└─mpathj_testingdisk_vg-vm--103--disk--0 252:15 0 100G 0 lvm

sdy 65:128 0 500G 0 disk

└─mpathi 252:28 0 500G 0 mpath

└─mpathi-part1 252:29 0 500G 0 part

└─mpathj_testingdisk_vg-vm--103--disk--0 252:15 0 100G 0 lvm

sdz 65:144 0 500G 0 disk

└─mpathi 252:28 0 500G 0 mpath

└─mpathi-part1 252:29 0 500G 0 part

└─mpathj_testingdisk_vg-vm--103--disk--0 252:15 0 100G 0 lvm

multipath -ll output:

mpathi (360002ac0000000001600a61e0001d232) dm-28 3PARdata,VV

size=500G features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='service-time 0' prio=50 status=active

|- 2:0:0:3 sdw 65:96 active ready running

|- 2:0:1:3 sdx 65:112 active ready running

|- 3:0:0:3 sdy 65:128 active ready running

`- 3:0:1:3 sdz 65:144 active ready running

I'm using multipath now, but the LUNs are showing 4 times for all the nodes. Sometimes the lun is showing in one node but not in other nodes. How can I sync the LUNs automatically? Is there any way to solve this issue?

If exporting the 3Par lun to the Proxmox nodes, it is not showing until this command is executed: echo "- - -" | tee /sys/class/scsi_host/host*/scan (or) reboot the node. Why is it not showing automatically?

lsblk output:

sdw 65:96 0 500G 0 disk

└─mpathi 252:28 0 500G 0 mpath

└─mpathi-part1 252:29 0 500G 0 part

└─mpathj_testingdisk_vg-vm--103--disk--0 252:15 0 100G 0 lvm

sdx 65:112 0 500G 0 disk

└─mpathi 252:28 0 500G 0 mpath

└─mpathi-part1 252:29 0 500G 0 part

└─mpathj_testingdisk_vg-vm--103--disk--0 252:15 0 100G 0 lvm

sdy 65:128 0 500G 0 disk

└─mpathi 252:28 0 500G 0 mpath

└─mpathi-part1 252:29 0 500G 0 part

└─mpathj_testingdisk_vg-vm--103--disk--0 252:15 0 100G 0 lvm

sdz 65:144 0 500G 0 disk

└─mpathi 252:28 0 500G 0 mpath

└─mpathi-part1 252:29 0 500G 0 part

└─mpathj_testingdisk_vg-vm--103--disk--0 252:15 0 100G 0 lvm

multipath -ll output:

mpathi (360002ac0000000001600a61e0001d232) dm-28 3PARdata,VV

size=500G features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='service-time 0' prio=50 status=active

|- 2:0:0:3 sdw 65:96 active ready running

|- 2:0:1:3 sdx 65:112 active ready running

|- 3:0:0:3 sdy 65:128 active ready running

`- 3:0:1:3 sdz 65:144 active ready running