I have a Intel NUC7CJY which is currently running HAos with no issues but I cannot get immich to move the photo save location off the local disk of the NUC7CJY so I was thinking it might be a better option to run PROXMOX on the NUC7CJY and install HA and run immich in a docker? The only other thing I would need to setup would be Samba sharing for some of the USB drives I have connected to the NUC7CJY that are currently shared out using SambaNAS add-on for HA. From what I have watched on YouTube it looks like it is not hard to add sharing to PROXMOX and also it looks like all the above is not that processor intensive so the NUC7CJY should be able to handle the workload?

PROXMOX with HAos and immich and Cockpit for Samba Shares

- Thread starter Akore

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Thank you for the reply. I have been testing PROXMOX with Home Assistant and Immich and Cockpit as the Samba share on my old Lenovo T520 just to get my feet wet since this is my first time using PROXMOX. I am still testing things out but the PROXMOX server WebUI keeps crashing on me along with the 3 mentioned VMs as I also cannot access them when the PROXMOX WebUI crashes. I can log into the terminal on the T520 just fine so I need to do some more research to try and find out what might be causing it which might well be a hardware issue. I might just try installing everything on the NUC to see if it still locks up on its hardware.

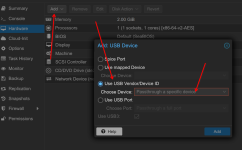

Your screenshot, is that from in HAos? I do not see that Hardware option under cockpit where I would like to do my Samba NAS sharing. I am hoping once I get the Samba NAS sharing working I can find away to have Immich point its storage to one of the Samba NAS shares in cockpit.

Your screenshot, is that from in HAos? I do not see that Hardware option under cockpit where I would like to do my Samba NAS sharing. I am hoping once I get the Samba NAS sharing working I can find away to have Immich point its storage to one of the Samba NAS shares in cockpit.

Last edited:

My screenshot is from a VM in Proxmox. The part shows where USB devices can be passed on to a VM, e.g. HAOS (which I also use).

Does the server have enough resources? Memory, storage space, CPU? Post the technical details of your server. And also your VM configurations.

For VMs:

For CTs:

I am still testing things out but the PROXMOX server WebUI keeps crashing on me along with the 3 mentioned VMs as I also cannot access them when the PROXMOX WebUI crashes.

Does the server have enough resources? Memory, storage space, CPU? Post the technical details of your server. And also your VM configurations.

For VMs:

Code:

qm config <VMID>For CTs:

Code:

pct config <VMID>Thank for helping Fireon. It mostly locks up during the night and I am then unable to ping the box but I can login and run a reboot command and it starts back up just fine. Below is the Summary of the Lenovo T520, HAos VM and the Cockpit CT and Immich CT:

Temp computer specs:

CPU usage 7.17% of 4 CPU(s)

IO delay 0.07%

Load average 0.25,0.35,0.64

RAM usage 57.30% (4.38 GiB of 7.64 GiB)

KSM sharing 0 B

/ HD space 5.20% (4.29 GiB of 82.48 GiB)

SWAP usage 0.00% (0 B of 7.64 GiB)

CPU(s) 4 x Intel(R) Core(TM) i5-2520M CPU @ 2.50GHz (1 Socket)

Kernel Version Linux 6.8.12-9-pve (2025-03-16T19:18Z)

Boot Mode EFI

Manager Version pve-manager/8.4.0/ec58e45e1bcdf2ac

Repository Status No Proxmox VE repository enable

HAos VM:

agent: 1

bios: ovmf

boot: order=scsi0

cores: 2

cpu: host

description: <div align='center'><a href='https%3A//Helper-Scripts.com' target='_blank' rel='noopener noreferrer'><img src='https%3A//raw.githubusercontent.com/community-scripts/ProxmoxVE/main/misc/images/logo-81x112.png'/></a>%0A%0A # Home Assistant OS%0A%0A <a href='https%3A//ko-fi.com/D1D7EP4GF'><img src='https%3A//img.shields.io/badge/☕-Buy me a coffee-blue' /></a>%0A </div>

efidisk0: local-lvm:vm-100-disk-0,efitype=4m,size=4M

localtime: 1

memory: 4096

meta: creation-qemu=9.2.0,ctime=1746622320

name: haos15.2

net0: virtio=02:8A:13:B3:8E:F2,bridge=vmbr0

onboot: 1

ostype: l26

scsi0: local-lvm:vm-100-disk-1,cache=writethrough,discard=on,size=32G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=3c71c079-8ce9-4a89-ad2b-12b3e90bcd08

tablet: 0

tags: community-script

usb0: host=13fd:0840

vmgenid: 338b2797-d4b9-4359-b84a-161fe99a2e3c

Immich CT:

arch: amd64

cores: 2

features: nesting=1

hostname: docker

memory: 2048

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=BC:24:11:8C:3A:73,ip=dhcp,type=veth

onboot: 1

ostype: ubuntu

rootfs: local-lvm:vm-101-disk-0,size=64G

swap: 2048

unprivileged: 1

Cockpit CT:

arch: amd64

cores: 2

description: <div align='center'>%0A <a href='https%3A//Helper-Scripts.com' target='_blank' rel='noopener noreferrer'>%0A <img src='https%3A//raw.githubusercontent.com/community-scripts/ProxmoxVE/main/misc/images/logo-81x112.png' alt='Logo' style='width%3A81px;height%3A112px;'/>%0A </a>%0A%0A <h2 style='font-size%3A 24px; margin%3A 20px 0;'>Cockpit LXC</h2>%0A%0A <p style='margin%3A 16px 0;'>%0A <a href='https%3A//ko-fi.com/community_scripts' target='_blank' rel='noopener noreferrer'>%0A <img src='https%3A//img.shields.io/badge/☕-Buy us a coffee-blue' alt='spend Coffee' />%0A </a>%0A </p>%0A %0A <span style='margin%3A 0 10px;'>%0A <i class="fa fa-github fa-fw" style="color%3A #f5f5f5;"></i>%0A <a href='https%3A//github.com/community-scripts/ProxmoxVE' target='_blank' rel='noopener noreferrer' style='text-decoration%3A none; color%3A #00617f;'>GitHub</a>%0A </span>%0A <span style='margin%3A 0 10px;'>%0A <i class="fa fa-comments fa-fw" style="color%3A #f5f5f5;"></i>%0A <a href='https%3A//github.com/community-scripts/ProxmoxVE/discussions' target='_blank' rel='noopener noreferrer' style='text-decoration%3A none; color%3A #00617f;'>Discussions</a>%0A </span>%0A <span style='margin%3A 0 10px;'>%0A <i class="fa fa-exclamation-circle fa-fw" style="color%3A #f5f5f5;"></i>%0A <a href='https%3A//github.com/community-scripts/ProxmoxVE/issues' target='_blank' rel='noopener noreferrer' style='text-decoration%3A none; color%3A #00617f;'>Issues</a>%0A </span>%0A</div>%0A

features: keyctl=1,nesting=1

hostname: cockpit

memory: 1024

net0: name=eth0,bridge=vmbr0,hwaddr=BC:24:11:44:A6:F4,ip=dhcp,type=veth

onboot: 1

ostype: debian

rootfs: local-lvm:vm-102-disk-0,size=4G

swap: 512

tags: community-script;monitoring;network

unprivileged: 1

Temp computer specs:

CPU usage 7.17% of 4 CPU(s)

IO delay 0.07%

Load average 0.25,0.35,0.64

RAM usage 57.30% (4.38 GiB of 7.64 GiB)

KSM sharing 0 B

/ HD space 5.20% (4.29 GiB of 82.48 GiB)

SWAP usage 0.00% (0 B of 7.64 GiB)

CPU(s) 4 x Intel(R) Core(TM) i5-2520M CPU @ 2.50GHz (1 Socket)

Kernel Version Linux 6.8.12-9-pve (2025-03-16T19:18Z)

Boot Mode EFI

Manager Version pve-manager/8.4.0/ec58e45e1bcdf2ac

Repository Status No Proxmox VE repository enable

HAos VM:

agent: 1

bios: ovmf

boot: order=scsi0

cores: 2

cpu: host

description: <div align='center'><a href='https%3A//Helper-Scripts.com' target='_blank' rel='noopener noreferrer'><img src='https%3A//raw.githubusercontent.com/community-scripts/ProxmoxVE/main/misc/images/logo-81x112.png'/></a>%0A%0A # Home Assistant OS%0A%0A <a href='https%3A//ko-fi.com/D1D7EP4GF'><img src='https%3A//img.shields.io/badge/☕-Buy me a coffee-blue' /></a>%0A </div>

efidisk0: local-lvm:vm-100-disk-0,efitype=4m,size=4M

localtime: 1

memory: 4096

meta: creation-qemu=9.2.0,ctime=1746622320

name: haos15.2

net0: virtio=02:8A:13:B3:8E:F2,bridge=vmbr0

onboot: 1

ostype: l26

scsi0: local-lvm:vm-100-disk-1,cache=writethrough,discard=on,size=32G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=3c71c079-8ce9-4a89-ad2b-12b3e90bcd08

tablet: 0

tags: community-script

usb0: host=13fd:0840

vmgenid: 338b2797-d4b9-4359-b84a-161fe99a2e3c

Immich CT:

arch: amd64

cores: 2

features: nesting=1

hostname: docker

memory: 2048

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=BC:24:11:8C:3A:73,ip=dhcp,type=veth

onboot: 1

ostype: ubuntu

rootfs: local-lvm:vm-101-disk-0,size=64G

swap: 2048

unprivileged: 1

Cockpit CT:

arch: amd64

cores: 2

description: <div align='center'>%0A <a href='https%3A//Helper-Scripts.com' target='_blank' rel='noopener noreferrer'>%0A <img src='https%3A//raw.githubusercontent.com/community-scripts/ProxmoxVE/main/misc/images/logo-81x112.png' alt='Logo' style='width%3A81px;height%3A112px;'/>%0A </a>%0A%0A <h2 style='font-size%3A 24px; margin%3A 20px 0;'>Cockpit LXC</h2>%0A%0A <p style='margin%3A 16px 0;'>%0A <a href='https%3A//ko-fi.com/community_scripts' target='_blank' rel='noopener noreferrer'>%0A <img src='https%3A//img.shields.io/badge/☕-Buy us a coffee-blue' alt='spend Coffee' />%0A </a>%0A </p>%0A %0A <span style='margin%3A 0 10px;'>%0A <i class="fa fa-github fa-fw" style="color%3A #f5f5f5;"></i>%0A <a href='https%3A//github.com/community-scripts/ProxmoxVE' target='_blank' rel='noopener noreferrer' style='text-decoration%3A none; color%3A #00617f;'>GitHub</a>%0A </span>%0A <span style='margin%3A 0 10px;'>%0A <i class="fa fa-comments fa-fw" style="color%3A #f5f5f5;"></i>%0A <a href='https%3A//github.com/community-scripts/ProxmoxVE/discussions' target='_blank' rel='noopener noreferrer' style='text-decoration%3A none; color%3A #00617f;'>Discussions</a>%0A </span>%0A <span style='margin%3A 0 10px;'>%0A <i class="fa fa-exclamation-circle fa-fw" style="color%3A #f5f5f5;"></i>%0A <a href='https%3A//github.com/community-scripts/ProxmoxVE/issues' target='_blank' rel='noopener noreferrer' style='text-decoration%3A none; color%3A #00617f;'>Issues</a>%0A </span>%0A</div>%0A

features: keyctl=1,nesting=1

hostname: cockpit

memory: 1024

net0: name=eth0,bridge=vmbr0,hwaddr=BC:24:11:44:A6:F4,ip=dhcp,type=veth

onboot: 1

ostype: debian

rootfs: local-lvm:vm-102-disk-0,size=4G

swap: 512

tags: community-script;monitoring;network

unprivileged: 1

Thanks for the information. Your Proxmox VE has a total of 8 GB memory + swap. That is really not much. Of that, 7 GB is allocated to the VMs. This leaves Proxmox itself with only 1 GB. Please also review the system requirements. If you have 8 GB of RAM available, I would allocate a maximum of 4 GB to the VMs. This will keep the system running smoothly.

Take a look at zram-tools. It allows you to compress your physical RAM, thereby getting more out of it. I set it to 50% on mini servers. It runs stable.

If that still works, it's fine. What does the system load look like at that time? How much RAM is being used, CPU, load? Post the output from that point in time:

For you to check if the server is not responding:

If the RAM usage is OK, send us the journal extract from the time when the problem occurred. We may be able to see more there. Please adjust date and time.

Take a look at zram-tools. It allows you to compress your physical RAM, thereby getting more out of it. I set it to 50% on mini servers. It runs stable.

Thank for helping Fireon. It mostly locks up during the night and I am then unable to ping the box but I can login and run a reboot command and it starts back up just fine. Below is the Summary of the Lenovo T520, HAos VM and the Cockpit CT and Immich CT:

If that still works, it's fine. What does the system load look like at that time? How much RAM is being used, CPU, load? Post the output from that point in time:

Code:

head /proc/pressure/*For you to check if the server is not responding:

Code:

top

Code:

htopIf the RAM usage is OK, send us the journal extract from the time when the problem occurred. We may be able to see more there. Please adjust date and time.

Code:

journalctl --since "2025-05-01 00:00" --until "2025-05-03 16:00" > "$(hostname)-journal.log"Thank you again Fireon.

Since it only seems to lockup at night it is hard to say what is going on at the time. It could just be a hardware issue as the T520 laptop is very old and it had gone through a lot of abuse (dropped, partial water damage, etc.) so I am not that concerned yet as I will be moving everything to a Intel NUC7JY Computer Intel PENTIUM SILVER J5040 2.0GHz 8GB as soon as I go back to the states and pick it up and bring it back with my to Colombia.

That said I did install the zram tools that you suggested and have it going so perhaps that will help the problem some. I set it to 50% as you suggested and I get this for the output when I type free -h:

root@ajtest:~# free -h

total used free shared buff/cache available

Mem: 7.6Gi 4.6Gi 1.1Gi 90Mi 2.3Gi 3.0Gi

Swap: 7.9Gi 0B 7.9Gi

This is usually what I see when it crashes if I see anything at all as most of the time I can not access the GUI nor ping the box:

And of course wouldn't you know it the WebGUI crashed as I will doing this so I got you some good info:

Since it only seems to lockup at night it is hard to say what is going on at the time. It could just be a hardware issue as the T520 laptop is very old and it had gone through a lot of abuse (dropped, partial water damage, etc.) so I am not that concerned yet as I will be moving everything to a Intel NUC7JY Computer Intel PENTIUM SILVER J5040 2.0GHz 8GB as soon as I go back to the states and pick it up and bring it back with my to Colombia.

That said I did install the zram tools that you suggested and have it going so perhaps that will help the problem some. I set it to 50% as you suggested and I get this for the output when I type free -h:

root@ajtest:~# free -h

total used free shared buff/cache available

Mem: 7.6Gi 4.6Gi 1.1Gi 90Mi 2.3Gi 3.0Gi

Swap: 7.9Gi 0B 7.9Gi

This is usually what I see when it crashes if I see anything at all as most of the time I can not access the GUI nor ping the box:

And of course wouldn't you know it the WebGUI crashed as I will doing this so I got you some good info:

Thank for the link! Yeah I thought it was very strange that network would just drop off and just unplugging the cable and plugging it back would bring it back online. Swapped out cable and changes ports and same thing. Thought maybe nic in old T520 laptop was going bad. Glad to read that I am not losing my mind..

Since it only seems to lockup at night it is hard to say what is going on at the time. It could just be a hardware issue as the T520 laptop is very old and it had gone through a lot of abuse (dropped, partial water damage, etc.) so I am not that concerned yet as I will be moving everything to a Intel NUC7JY Computer Intel PENTIUM SILVER J5040 2.0GHz 8GB as soon as I go back to the states and pick it up and bring it back with my to Colombia.

Maybe it's time to get something new

That said I did install the zram tools that you suggested and have it going so perhaps that will help the problem some. I set it to 50% as you suggested and I get this for the output when I type free -h:

root@ajtest:~# free -h

total used free shared buff/cache available

Mem: 7.6Gi 4.6Gi 1.1Gi 90Mi 2.3Gi 3.0Gi

Swap: 7.9Gi 0B 7.9Gi

After increasing it to 50%, the service must also be restarted.

Code:

systemctl restart zramswap.serviceThen 4GB of compressed RAM will be allocated to your SWAP and used preferentially if RAM becomes scarce. Otherwise, it looks okay in terms of resources.

Thank you for the reply fireon, Yes my T520 is very old but, like I said this is just a test bed/trial run, as I have never setup PROXMOX before.

My HAos is still online and working just fine on a NUC7CJY all by itself but I want to do a little more with my server, so I did a eBay purchase of a NUC7PJY with an Intel® Pentium® Silver J5040 Processor 4M Cache, up to 3.20 GHz, that will give me 2 more cores. Unfortunately even that bump in processor still limits me to 8gb ram so hopefully your little ram trick will help with that issue. I take it I only have to do the setup of the Zram once and if I restart the system it will stick? I did reboot it after I set it up, Here is what I show as of now:

root@ajtest:~# PERCENT=50

root@ajtest:~# zramswap restart

<13>May 12 12:26:33 root: Stopping Zram

<13>May 12 12:26:33 root: Starting Zram

Setting up swapspace version 1, size = 256 MiB (268431360 bytes)

no label, UUID=c4a801f3-c16d-4476-bb5d-e17e329c2d4c

root@ajtest:~# free -h

total used free shared buff/cache available

Mem: 7.6Gi 4.8Gi 1.8Gi 55Mi 1.3Gi 2.8Gi

Swap: 7.9Gi 0B 7.9Gi

Does the above look correct?

Since I live in Colombia SA it will be a few weeks before I get the new NUC down to me but I leave tomorrow to head back to the states for 2 weeks so will be bringing it back with me.

MarkusKO, thank you for the link, So far my PROXMOX test bed on my T520 has not gone off-line for over 24 hours with the changes they recommended in that thread so that is a good sign as I can do further testing today to try and get everything working the way I would like before I move it over to my main new system on the NUC7PJYHN. If all goes well I can either sell the NUC7CJY or keep it for emergencies' or use as another test unit for playing around in.

My HAos is still online and working just fine on a NUC7CJY all by itself but I want to do a little more with my server, so I did a eBay purchase of a NUC7PJY with an Intel® Pentium® Silver J5040 Processor 4M Cache, up to 3.20 GHz, that will give me 2 more cores. Unfortunately even that bump in processor still limits me to 8gb ram so hopefully your little ram trick will help with that issue. I take it I only have to do the setup of the Zram once and if I restart the system it will stick? I did reboot it after I set it up, Here is what I show as of now:

root@ajtest:~# PERCENT=50

root@ajtest:~# zramswap restart

<13>May 12 12:26:33 root: Stopping Zram

<13>May 12 12:26:33 root: Starting Zram

Setting up swapspace version 1, size = 256 MiB (268431360 bytes)

no label, UUID=c4a801f3-c16d-4476-bb5d-e17e329c2d4c

root@ajtest:~# free -h

total used free shared buff/cache available

Mem: 7.6Gi 4.8Gi 1.8Gi 55Mi 1.3Gi 2.8Gi

Swap: 7.9Gi 0B 7.9Gi

Does the above look correct?

Since I live in Colombia SA it will be a few weeks before I get the new NUC down to me but I leave tomorrow to head back to the states for 2 weeks so will be bringing it back with me.

MarkusKO, thank you for the link, So far my PROXMOX test bed on my T520 has not gone off-line for over 24 hours with the changes they recommended in that thread so that is a good sign as I can do further testing today to try and get everything working the way I would like before I move it over to my main new system on the NUC7PJYHN. If all goes well I can either sell the NUC7CJY or keep it for emergencies' or use as another test unit for playing around in.

RAM usage 57.30% (4.38 GiB of 7.64 GiB)

SWAP usage 0.00% (0 B of 7.64 GiB)

Swap: 7.9Gi 0B 7.9Gi

Well, the available swap has increased, but not by 3.82 GB. You can configure the 50% here: "/etc/default/zramswap"

Code:

# Compression algorithm selection

# speed: lz4 > zstd > lzo

# compression: zstd > lzo > lz4

# This is not inclusive of all that is available in latest kernels

# See /sys/block/zram0/comp_algorithm (when zram module is loaded) to see

# what is currently set and available for your kernel[1]

# [1] https://github.com/torvalds/linux/blob/master/Documentation/blockdev/zram.txt#L86

#ALGO=lz4

# Specifies the amount of RAM that should be used for zram

# based on a percentage the total amount of available memory

# This takes precedence and overrides SIZE below

PERCENT=50

# Specifies a static amount of RAM that should be used for

# the ZRAM devices, this is in MiB

#SIZE=256

# Specifies the priority for the swap devices, see swapon(2)

# for more details. Higher number = higher priority

# This should probably be higher than hdd/ssd swaps.

#PRIORITY=100Is the service enabled and working correctly?

Code:

systemctl is-enabled zramswap.service

systemctl status zramswap.service● zramswap.service - Linux zramswap setup

Loaded: loaded (/lib/systemd/system/zramswap.service; enabled; preset: enabled)

Active: active (exited) since Mon 2025-05-12 12:14:36 -05; 20h ago

Docs: man:zramswap(8)

Process: 43593 ExecStart=/usr/sbin/zramswap start (code=exited, status=0/SUCCESS)

Main PID: 43593 (code=exited, status=0/SUCCESS)

CPU: 17ms

May 12 12:14:36 ajtest systemd[1]: Starting zramswap.service - Linux zramswap setup...

May 12 12:14:36 ajtest zramswap[43600]: Setting up swapspace version 1, size = 256 MiB (268431360 bytes)

May 12 12:14:36 ajtest zramswap[43600]: no label, UUID=927bdb53-3242-4066-a9ec-bbe6fe698c8b

May 12 12:14:36 ajtest systemd[1]: Finished zramswap.service - Linux zramswap setup.

Loaded: loaded (/lib/systemd/system/zramswap.service; enabled; preset: enabled)

Active: active (exited) since Mon 2025-05-12 12:14:36 -05; 20h ago

Docs: man:zramswap(8)

Process: 43593 ExecStart=/usr/sbin/zramswap start (code=exited, status=0/SUCCESS)

Main PID: 43593 (code=exited, status=0/SUCCESS)

CPU: 17ms

May 12 12:14:36 ajtest systemd[1]: Starting zramswap.service - Linux zramswap setup...

May 12 12:14:36 ajtest zramswap[43600]: Setting up swapspace version 1, size = 256 MiB (268431360 bytes)

May 12 12:14:36 ajtest zramswap[43600]: no label, UUID=927bdb53-3242-4066-a9ec-bbe6fe698c8b

May 12 12:14:36 ajtest systemd[1]: Finished zramswap.service - Linux zramswap setup.

May 12 12:14:36 ajtest zramswap[43600]: Setting up swapspace version 1, size = 256 MiB (268431360 bytes)

According to this, only 256 MB are allocated, which is the default setting after installation. If you have configured 50%, the service must be restarted. Restart the service again. Has anything changed?

And also post your config:

Code:

cat /etc/default/zramswap