Proxmox VMs went to 2 years old state after Node reboot

- Thread starter waheed usman

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Could anyone please help as we're in real state of panic as almost all settings and backup settings have been lost.

Hi,

please post the output of

What exactly do you mean with "backup settings" have been lost? Do you still have access to your backup storage?

please post the output of

pveversion -v and qm config <ID>, replacing <ID> with the one from an affected VM. Please also check the Task History of affected VMs in the UI to get more information.What exactly do you mean with "backup settings" have been lost? Do you still have access to your backup storage?

pveversion -v

proxmox-ve: 6.3-1 (running kernel: 5.4.106-1-pve)

pve-manager: 6.4-4 (running version: 6.4-4/337d6701)

pve-kernel-5.4: 6.4-1

pve-kernel-helper: 6.4-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.1.2-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.0.8

libproxmox-backup-qemu0: 1.0.3-1

libpve-access-control: 6.4-1

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-2

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-1

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

openvswitch-switch: 2.10.7+ds1-0+deb10u1

proxmox-backup-client: 1.1.5-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.5-3

pve-cluster: 6.4-1

pve-container: 3.3-5

pve-docs: 6.4-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-2

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-6

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-1

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.4-pve1

------------------------------------------------

qm config 10004

balloon: 7000

bootdisk: virtio0

cores: 3

ide2: none,media=cdrom

memory: 9000

name:xxxxxxx

net0: e1000=82:F2:1A:00:BD:E3,bridge=vmbr222,firewall=1,tag=71

net1: e1000=9A:29:59:99 A:4A,bridge=vmbr222,firewall=1,tag=72

A:4A,bridge=vmbr222,firewall=1,tag=72

net2: e1000=B2:0E:F7:EB:98:9C,bridge=vmbr222,firewall=1,link_down=1,tag=73

numa: 0

ostype: win10

scsihw: virtio-scsi-pci

smbios1: uuid=ab674678-7ba5-4641-bb43-7efeefd2397e

sockets: 1

virtio0: ZFS:vm-10004-disk-0,size=150G

vmgenid: 9710d741-e011-4926-a53a-844d12fb6feb

-------------------------

proxmox-ve: 6.3-1 (running kernel: 5.4.106-1-pve)

pve-manager: 6.4-4 (running version: 6.4-4/337d6701)

pve-kernel-5.4: 6.4-1

pve-kernel-helper: 6.4-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.1.2-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.0.8

libproxmox-backup-qemu0: 1.0.3-1

libpve-access-control: 6.4-1

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-2

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-1

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

openvswitch-switch: 2.10.7+ds1-0+deb10u1

proxmox-backup-client: 1.1.5-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.5-3

pve-cluster: 6.4-1

pve-container: 3.3-5

pve-docs: 6.4-1

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-2

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-6

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-1

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.4-pve1

------------------------------------------------

qm config 10004

balloon: 7000

bootdisk: virtio0

cores: 3

ide2: none,media=cdrom

memory: 9000

name:xxxxxxx

net0: e1000=82:F2:1A:00:BD:E3,bridge=vmbr222,firewall=1,tag=71

net1: e1000=9A:29:59:99

net2: e1000=B2:0E:F7:EB:98:9C,bridge=vmbr222,firewall=1,link_down=1,tag=73

numa: 0

ostype: win10

scsihw: virtio-scsi-pci

smbios1: uuid=ab674678-7ba5-4641-bb43-7efeefd2397e

sockets: 1

virtio0: ZFS:vm-10004-disk-0,size=150G

vmgenid: 9710d741-e011-4926-a53a-844d12fb6feb

-------------------------

The migration log indicates that the last replication was done on

and only 2.47M of data changed since then.

Can you check the task log for this VM on node

If the VM was replicated to multiple nodes, you might also want to check the ZFS snapshots on them (send them somewhere safe, mount the file systems on them) to see if they contain more recent copies of the VM data.

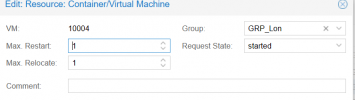

Was the guest part of a HA group and if yes, how is that group configured (

Code:

root@pve701 ~ # date -d@1627765363

Sat 31 Jul 2021 11:02:43 PM CESTCan you check the task log for this VM on node

UK-NODE-2 (select the node in the web UI then Task History and filter by VMID)?If the VM was replicated to multiple nodes, you might also want to check the ZFS snapshots on them (send them somewhere safe, mount the file systems on them) to see if they contain more recent copies of the VM data.

Was the guest part of a HA group and if yes, how is that group configured (

cat /etc/pve/ha/groups.cfg)?I think what might've happened is the following (you can check

journalctl -u pve-ha-crm.service if the recovery did happen like that):- UK-NODE1 went down

- The HA manager recovered the guests to UK-NODE-2

- But UK-NODE-2 only had the 1.5 year old state of the guests, because that was the last time the replication was run

- When migrating back the now "current" state (being the 1.5 year old state) was used, overwriting the disks on UK-NODE-1

proxmox-ve: 6.3-1

Sidenote: 6 is EOL: [1]. Consider upgrading to 7: [2].

[1] https://pve.proxmox.com/wiki/FAQ

[2] https://pve.proxmox.com/wiki/Upgrade_from_6.x_to_7.0