Hi

I know this subject has been brought up a couple of times already. But even then I've never managed to find a solution in all the different discussions.

First I'll explain the Setup.

I have a CEPH Cluster consisting of three nodes and one PBS node.

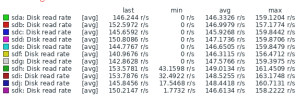

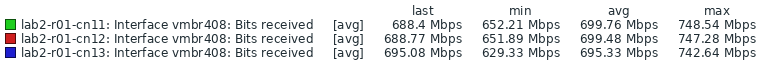

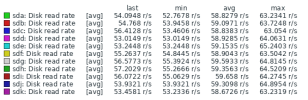

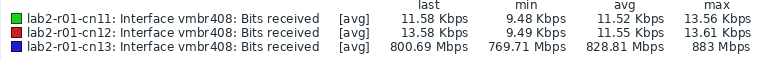

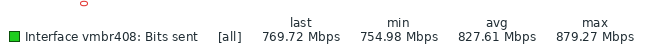

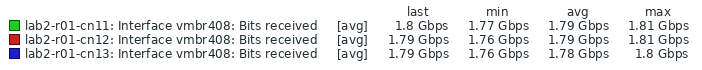

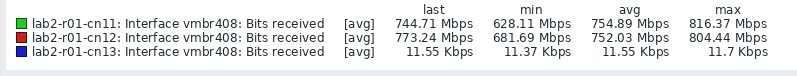

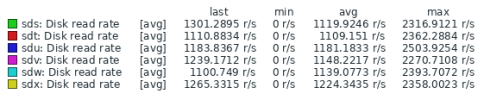

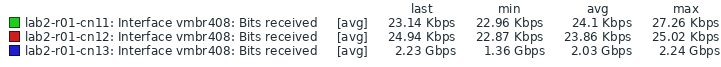

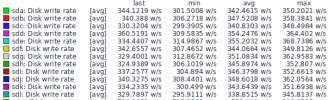

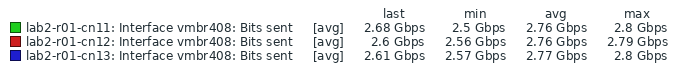

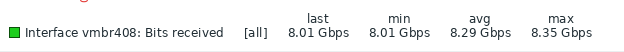

I've done some extensive performance testing from the CEPH Cluster to the PBS node and vice versa. What I've noticed though were slow restore speeds. While I can reach up to 8 Gbit/s transfer rates while doing backups from the Ceph Cluster, provided I backup with three nodes at the same time, during restores I only reach around 2.3 Gbit/S at most. Doing single VM recovery on a cluster I only reach around 800 Mbit/s transfer speeds.

Currently I'm running PVE8 and PBS3, but the versions odn't really matter. I've experienced this same behaviour with PVE7 and PBS3 and all the different sub-versions. Also, I'm aware that SSDs are better and also the recommended disks for the PBS, but even with SSDs I reach unsatisfactory results.

I hope that there are some ways to improve the restoration speed to sensible levels.

Thanks for the help and kind regards

Lail

I know this subject has been brought up a couple of times already. But even then I've never managed to find a solution in all the different discussions.

First I'll explain the Setup.

I have a CEPH Cluster consisting of three nodes and one PBS node.

- The CEPH Cluster consists of fairly new hardware with fast NVMe based SSD disks.

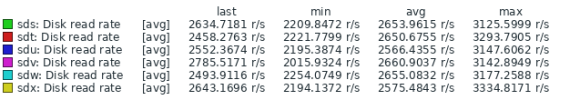

- The PBS Node consists of one older generation server consisting of 12x 12 TB SAS disks in a RAID10 Setup with vdev devices set up.

I've done some extensive performance testing from the CEPH Cluster to the PBS node and vice versa. What I've noticed though were slow restore speeds. While I can reach up to 8 Gbit/s transfer rates while doing backups from the Ceph Cluster, provided I backup with three nodes at the same time, during restores I only reach around 2.3 Gbit/S at most. Doing single VM recovery on a cluster I only reach around 800 Mbit/s transfer speeds.

Currently I'm running PVE8 and PBS3, but the versions odn't really matter. I've experienced this same behaviour with PVE7 and PBS3 and all the different sub-versions. Also, I'm aware that SSDs are better and also the recommended disks for the PBS, but even with SSDs I reach unsatisfactory results.

I hope that there are some ways to improve the restoration speed to sensible levels.

Thanks for the help and kind regards

Lail