I found a lot of searching and testing with people struggling on this, but I was able to make this work. I wanted to document my exact steps in the hope it helps someone else. I have a VM povisioned, working, with snapshots and migrations.

First, on TrueNAS Scale, I have a ZFS dataset with a bunch of space. Initially I wanted to create a zvol under it and limit the space for VM's, but interestingly this doesn't work, you get the error "parent is not a filesystem." I dunno, but mapping it directly to the dataset works, so keep that in mind; either make it's own dataset or expect your vm drives to be in the root of the dataset next to other storage. Record the exact name of the dataset for later, visible under "Path" in the details for the dataset.

Then, go to Shares, Block (iSCSI). Most of my config was sourced from here https://www.truenas.com/blog/iscsi-shares-on-truenas-freenas/ If you go through the Wizard, give the share a "Name", the extent type is "Device", The device should be your dataset which will be in the dropdown, the sharing platform should be "Modern OS: Extent block size 4k, TPC enabled, no Xen compat mode, SSD speed". I created the target outside of this wizard which you can see about just below.

First, click Add/Configure, where you'll get a series of tabs. On the "Target Global Configuration' tab, I created an iqn for Base Name; there is documentation out there for what it should be, but mine is "iqn.YYYY-MM.tld.domain.subdomain.nashost" given my home domain is subdomain.domain.com and YYYY-MM is the month I made this. nashost is my nas hostname. I set the available space threshold to 15%, and the port is default at 3260.

Under Portals, I added a new portal. The name is "nashostname Portal", and I added the IP for storage on the device. I have nothing for discovery authentication method or group.

For the Initiators Groups, we need the IQN for the proxmox servers. I couldn't find this in the GUI, but if you "cat/etc/iscsi/initiatorname.iscsi" you'll get it from the proxmox host. I added the initiator and gave it the name of the proxmox host.

I have nothing in Authorized Access, but if you wanted to use CHAP you would set it up there.

I created a new target, just named "target01." I don't have a bigger subnet for my proxmox hosts so I added "Authorized Networks" which are the Proxmox Host IP's with a /32 CIDR. For later, when plugging this into Proxmox, the IQN will be the IQN Base, then :, then the target, so "iqn.YYYY-MM.tld.domain.subdomain.nashost:target01". You need to add an iSCSI group; You'll select the portal group ID you created in the dropdown, and the Initiator Group ID you created before from the dropdown.

We do NOT create any Extents, they will be made by the API Plugin.

We do NOT create any Associated Targets, they will be associated when we create a drive by the API Plugin.

Proxmox will need to communicate with TrueNAS over ssh, so you will need to ensure SSH is enabled, and login as root is enabled. In my case, I run SSH on a non-standard port, which is an added complication which I will address later which may not impact most people.

I use the freenas-proxmox plugin by TheGrandWazoo to get this working. The installation instructions are here: https://github.com/TheGrandWazoo/freenas-proxmox Once you install that, then reboot or restart the service, you should see a new provider when adding ZFS over iSCSI storage to the Datacenter in Proxmox:

I liked the steps in this guide about doing the SSH key exchange: https://xinux.net/index.php/Proxmox_iscsi_over_zfs_with_freenas

In my case, since my truenas uses ssh on an alt port, I added "-p ### " as a param as well.

Additionally, in order to support doing this on a non-standard port, you need to edit "~/.ssh/config" to include the following 2 lines with your NAS IP and SSH Port...if you use the standard port, ignore this:

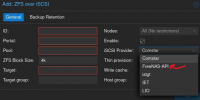

Ok, back to the UI for adding a new ZFS over iSCSI. Name it whatever. The portal is the IP of the NAS. The pool needs to be the name of the Path of the dataset, recorded above. The target needs to be the IQN Base:target as indicated above that you created in TrueNAS. The API Username is root. The iSCSI provider is freenas, I did NOT use thin provisioning, I do have write cache, the API IPv4 Host is the IP of the NAS, and the API password is the root password of the TrueNAS box. Once that is done, the LUN shows up in the sidebar on the left and in the storage list for the datacenter.

And that's it, next time I create a VM, the nas is available as a storage backing device. If I go back to TrueNAS and look in storage at the dataset, I can see the vm volume there, and if I go back to the iSCSI share, I now see the extent and Associated Targets. Seems to be working well, and if anyone has anything they would do differently, I'm open to feedback, but if people are struggling, this got me working.

First, on TrueNAS Scale, I have a ZFS dataset with a bunch of space. Initially I wanted to create a zvol under it and limit the space for VM's, but interestingly this doesn't work, you get the error "parent is not a filesystem." I dunno, but mapping it directly to the dataset works, so keep that in mind; either make it's own dataset or expect your vm drives to be in the root of the dataset next to other storage. Record the exact name of the dataset for later, visible under "Path" in the details for the dataset.

Then, go to Shares, Block (iSCSI). Most of my config was sourced from here https://www.truenas.com/blog/iscsi-shares-on-truenas-freenas/ If you go through the Wizard, give the share a "Name", the extent type is "Device", The device should be your dataset which will be in the dropdown, the sharing platform should be "Modern OS: Extent block size 4k, TPC enabled, no Xen compat mode, SSD speed". I created the target outside of this wizard which you can see about just below.

First, click Add/Configure, where you'll get a series of tabs. On the "Target Global Configuration' tab, I created an iqn for Base Name; there is documentation out there for what it should be, but mine is "iqn.YYYY-MM.tld.domain.subdomain.nashost" given my home domain is subdomain.domain.com and YYYY-MM is the month I made this. nashost is my nas hostname. I set the available space threshold to 15%, and the port is default at 3260.

Under Portals, I added a new portal. The name is "nashostname Portal", and I added the IP for storage on the device. I have nothing for discovery authentication method or group.

For the Initiators Groups, we need the IQN for the proxmox servers. I couldn't find this in the GUI, but if you "cat/etc/iscsi/initiatorname.iscsi" you'll get it from the proxmox host. I added the initiator and gave it the name of the proxmox host.

I have nothing in Authorized Access, but if you wanted to use CHAP you would set it up there.

I created a new target, just named "target01." I don't have a bigger subnet for my proxmox hosts so I added "Authorized Networks" which are the Proxmox Host IP's with a /32 CIDR. For later, when plugging this into Proxmox, the IQN will be the IQN Base, then :, then the target, so "iqn.YYYY-MM.tld.domain.subdomain.nashost:target01". You need to add an iSCSI group; You'll select the portal group ID you created in the dropdown, and the Initiator Group ID you created before from the dropdown.

We do NOT create any Extents, they will be made by the API Plugin.

We do NOT create any Associated Targets, they will be associated when we create a drive by the API Plugin.

Proxmox will need to communicate with TrueNAS over ssh, so you will need to ensure SSH is enabled, and login as root is enabled. In my case, I run SSH on a non-standard port, which is an added complication which I will address later which may not impact most people.

I use the freenas-proxmox plugin by TheGrandWazoo to get this working. The installation instructions are here: https://github.com/TheGrandWazoo/freenas-proxmox Once you install that, then reboot or restart the service, you should see a new provider when adding ZFS over iSCSI storage to the Datacenter in Proxmox:

I liked the steps in this guide about doing the SSH key exchange: https://xinux.net/index.php/Proxmox_iscsi_over_zfs_with_freenas

Code:

portal_ip=10.*.*.* (Don't use asterisks, plug in your IP)

mkdir /etc/pve/priv/zfs

ssh-keygen -f /etc/pve/priv/zfs/$portal_ip_id_rsa

ssh-copy-id -i /etc/pve/priv/zfs/$portal_ip_id_rsa.pub root@$portal_ipIn my case, since my truenas uses ssh on an alt port, I added "-p ### " as a param as well.

Additionally, in order to support doing this on a non-standard port, you need to edit "~/.ssh/config" to include the following 2 lines with your NAS IP and SSH Port...if you use the standard port, ignore this:

Code:

Host 10.*.*.*

Port ###Ok, back to the UI for adding a new ZFS over iSCSI. Name it whatever. The portal is the IP of the NAS. The pool needs to be the name of the Path of the dataset, recorded above. The target needs to be the IQN Base:target as indicated above that you created in TrueNAS. The API Username is root. The iSCSI provider is freenas, I did NOT use thin provisioning, I do have write cache, the API IPv4 Host is the IP of the NAS, and the API password is the root password of the TrueNAS box. Once that is done, the LUN shows up in the sidebar on the left and in the storage list for the datacenter.

And that's it, next time I create a VM, the nas is available as a storage backing device. If I go back to TrueNAS and look in storage at the dataset, I can see the vm volume there, and if I go back to the iSCSI share, I now see the extent and Associated Targets. Seems to be working well, and if anyone has anything they would do differently, I'm open to feedback, but if people are struggling, this got me working.

Last edited: