After upgrading to Proxmox 8 with a clean install and creating a guest VM I experience random (~24-48h) freezes of the guest VM i.e. no ping/ssh and VNC show unresponsive login screen.

I can't find any clues in the logs. Last freeze today at 12:28:

More information:

- The host is not affected

- I can

- The guest VM is Ubuntu 24.04 used for running docker containers

- No previous problems running a similar container (Ubuntu 22.04) with many docker containers on Proxmox VE 7

Stuff I've tried:

- Pinning kernel 6.5.13-5-pve

- Looking for these specific error messages

- Specifying nfsver=3 in the guest NFS mounts

-

Any ideas?

I can't find any clues in the logs. Last freeze today at 12:28:

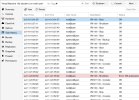

Code:

Jul 16 12:10:03 docker1 systemd[1]: sysstat-collect.service: Deactivated successfully.

Jul 16 12:10:03 docker1 systemd[1]: Finished sysstat-collect.service - system activity accounting tool.

Jul 16 12:10:33 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-36350f71e6b5c785c94d18d3d232c148d9a8aa6d18f7137d70e44d044a73822d-runc.Buk47a.mount: Deactivated successfully.

Jul 16 12:10:38 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-03424995bdff11a5d3a09e322df752f0b97a131ab95454cce4075f2517cdafe7-runc.rY7tAt.mount: Deactivated successfully.

Jul 16 12:10:53 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-af01baf74766168d567454c6f63b5f4e0093ed5a27a38c5f57050fc379783d9d-runc.1399Sv.mount: Deactivated successfully.

Jul 16 12:10:54 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-1f856eb722c23c6b5b8b70ef7f34c4be7ba19e1f8de103054ea6ce2fca856745-runc.pN1gYN.mount: Deactivated successfully.

Jul 16 12:10:59 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-331b34a9b9725ed1ec555e8e47ddedbddafae8c8f0755f8a2e447fd77150c6ef-runc.FrOsAA.mount: Deactivated successfully.

Jul 16 12:11:38 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-03424995bdff11a5d3a09e322df752f0b97a131ab95454cce4075f2517cdafe7-runc.e44mSq.mount: Deactivated successfully.

Jul 16 12:11:55 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-1f856eb722c23c6b5b8b70ef7f34c4be7ba19e1f8de103054ea6ce2fca856745-runc.ya6wTh.mount: Deactivated successfully.

Jul 16 12:12:25 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-1f856eb722c23c6b5b8b70ef7f34c4be7ba19e1f8de103054ea6ce2fca856745-runc.TeUTgg.mount: Deactivated successfully.

Jul 16 12:12:33 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-36350f71e6b5c785c94d18d3d232c148d9a8aa6d18f7137d70e44d044a73822d-runc.G4nHmB.mount: Deactivated successfully.

Jul 16 12:12:54 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-af01baf74766168d567454c6f63b5f4e0093ed5a27a38c5f57050fc379783d9d-runc.LZ9x9M.mount: Deactivated successfully.

Jul 16 12:15:01 docker1 CRON[1421295]: pam_unix(cron:session): session opened for user root(uid=0) by root(uid=0)

Jul 16 12:15:01 docker1 CRON[1421296]: (root) CMD (command -v debian-sa1 > /dev/null && debian-sa1 1 1)

Jul 16 12:15:01 docker1 CRON[1421295]: pam_unix(cron:session): session closed for user root

Jul 16 12:17:01 docker1 CRON[1422494]: pam_unix(cron:session): session opened for user root(uid=0) by root(uid=0)

Jul 16 12:17:01 docker1 CRON[1422495]: (root) CMD (cd / && run-parts --report /etc/cron.hourly)

Jul 16 12:17:01 docker1 CRON[1422494]: pam_unix(cron:session): session closed for user root

Jul 16 12:20:00 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-331b34a9b9725ed1ec555e8e47ddedbddafae8c8f0755f8a2e447fd77150c6ef-runc.uquV5A.mount: Deactivated successfully.

Jul 16 12:20:00 docker1 systemd[1]: Starting sysstat-collect.service - system activity accounting tool...

Jul 16 12:20:01 docker1 systemd[1]: sysstat-collect.service: Deactivated successfully.

Jul 16 12:20:01 docker1 systemd[1]: Finished sysstat-collect.service - system activity accounting tool.

Jul 16 12:20:04 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-36350f71e6b5c785c94d18d3d232c148d9a8aa6d18f7137d70e44d044a73822d-runc.EKKmsy.mount: Deactivated successfully.

Jul 16 12:20:22 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-3ba4a821a7ad09de62a2017ad64174d52202ca82bd5f66566e7f15085c29d2e4-runc.NVEBxx.mount: Deactivated successfully.

Jul 16 12:20:25 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-af01baf74766168d567454c6f63b5f4e0093ed5a27a38c5f57050fc379783d9d-runc.MyibSb.mount: Deactivated successfully.

Jul 16 12:20:34 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-36350f71e6b5c785c94d18d3d232c148d9a8aa6d18f7137d70e44d044a73822d-runc.zqy13Q.mount: Deactivated successfully.

Jul 16 12:20:52 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-3ba4a821a7ad09de62a2017ad64174d52202ca82bd5f66566e7f15085c29d2e4-runc.fkTveP.mount: Deactivated successfully.

Jul 16 12:20:55 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-b6fe25efb13af17dfd87cc94cb010b645cbd83b30668fdb295a809ab7cd79f2e-runc.Ojz6Al.mount: Deactivated successfully.

Jul 16 12:20:55 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-af01baf74766168d567454c6f63b5f4e0093ed5a27a38c5f57050fc379783d9d-runc.dbNuBv.mount: Deactivated successfully.

Jul 16 12:20:56 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-c92bc8bba14ed88368afb275cf9fe1f7b385d03ba15d8b24c2e07f7801779a97-runc.XyNLyL.mount: Deactivated successfully.

Jul 16 12:21:22 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-3ba4a821a7ad09de62a2017ad64174d52202ca82bd5f66566e7f15085c29d2e4-runc.3C4VZ5.mount: Deactivated successfully.

Jul 16 12:21:26 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-1f856eb722c23c6b5b8b70ef7f34c4be7ba19e1f8de103054ea6ce2fca856745-runc.idebEI.mount: Deactivated successfully.

Jul 16 12:21:34 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-36350f71e6b5c785c94d18d3d232c148d9a8aa6d18f7137d70e44d044a73822d-runc.eaij6n.mount: Deactivated successfully.

Jul 16 12:21:55 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-af01baf74766168d567454c6f63b5f4e0093ed5a27a38c5f57050fc379783d9d-runc.geocdW.mount: Deactivated successfully.

Jul 16 12:21:55 docker1 systemd[1]: run-docker-runtime\x2drunc-moby-c92bc8bba14ed88368afb275cf9fe1f7b385d03ba15d8b24c2e07f7801779a97-runc.Ub8UoO.mount: Deactivated successfully.

-- Boot e8b5ce55ec854244a122138fa8dfbb97 --

Jul 16 15:28:22 docker1 kernel: Linux version 6.8.0-38-generic (buildd@lcy02-amd64-049) (x86_64-linux-gnu-gcc-13 (Ubuntu 13.2.0-23ubuntu4) 13.2.0, GNU ld (GNU Binutils for Ubuntu) 2.42) #38-Ubuntu SMP PREEMPT_DYNAMIC Fri Jun 7 15:25:01 UTC 2024 (Ubuntu 6.8.0-38.38-generic 6.8.8)

Jul 16 15:28:22 docker1 kernel: Command line: BOOT_IMAGE=/boot/vmlinuz-6.8.0-38-generic root=UUID=f90df74e-31c6-4338-b11e-6a796b24ce10 ro

Code:

Jul 16 12:17:01 pve1 CRON[351640]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Jul 16 12:17:01 pve1 CRON[351641]: (root) CMD (cd / && run-parts --report /etc/cron.hourly)

Jul 16 12:17:01 pve1 CRON[351640]: pam_unix(cron:session): session closed for user root

Jul 16 12:51:53 pve1 smartd[535]: Device: /dev/sda [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 65 to 66

Jul 16 12:51:53 pve1 smartd[535]: Device: /dev/sda [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 35 to 34

Jul 16 12:51:58 pve1 smartd[535]: Device: /dev/sdb [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 67 to 68

Jul 16 12:51:58 pve1 smartd[535]: Device: /dev/sdb [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 33 to 32

Jul 16 13:17:01 pve1 CRON[360394]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Jul 16 13:17:01 pve1 CRON[360395]: (root) CMD (cd / && run-parts --report /etc/cron.hourly)

Jul 16 13:17:01 pve1 CRON[360394]: pam_unix(cron:session): session closed for user root

Jul 16 13:21:53 pve1 smartd[535]: Device: /dev/sda [SAT], SMART Usage Attribute: 190 Airflow_Temperature_Cel changed from 66 to 67

Code:

agent: 1

boot: order=scsi0;ide2;net0

cores: 4

cpu: x86-64-v2-AES

ide2: none,media=cdrom

memory: 49152

meta: creation-qemu=9.0.0,ctime=1720434262

name: docker1

net0: virtio=BC:24:11:2D:A5:70,bridge=vmbr0,firewall=1

net1: virtio=BC:24:11:06:0B:89,bridge=vmbr1,firewall=1

numa: 0

ostype: l26

scsi0: cryptssd:100/vm-100-disk-0.raw,iothread=1,size=256G

scsihw: virtio-scsi-single

smbios1: uuid=31dd0bf4-86fc-4c95-8161-69248bc968e5

sockets: 1

vmgenid: 0a0b9edb-48df-4422-9d39-e1c3f3d00401proxmox-ve: 8.2.0 (running kernel: 6.8.8-2-pve)

pve-manager: 8.2.4 (running version: 8.2.4/faa83925c9641325)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.8-2

proxmox-kernel-6.8.8-2-pve-signed: 6.8.8-2

proxmox-kernel-6.8.4-2-pve-signed: 6.8.4-2

proxmox-kernel-6.5.13-5-pve: 6.5.13-5

ceph-fuse: 17.2.7-pve3

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.1

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.4

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.7

libpve-cluster-perl: 8.0.7

libpve-common-perl: 8.2.1

libpve-guest-common-perl: 5.1.3

libpve-http-server-perl: 5.1.0

libpve-network-perl: 0.9.8

libpve-rs-perl: 0.8.9

libpve-storage-perl: 8.2.3

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.2.7-1

proxmox-backup-file-restore: 3.2.7-1

proxmox-firewall: 0.4.2

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.6

proxmox-widget-toolkit: 4.2.3

pve-cluster: 8.0.7

pve-container: 5.1.12

pve-docs: 8.2.2

pve-edk2-firmware: 4.2023.08-4

pve-esxi-import-tools: 0.7.1

pve-firewall: 5.0.7

pve-firmware: 3.12-1

pve-ha-manager: 4.0.5

pve-i18n: 3.2.2

pve-qemu-kvm: 9.0.0-6

pve-xtermjs: 5.3.0-3

qemu-server: 8.2.1

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.4-pve1

pve-manager: 8.2.4 (running version: 8.2.4/faa83925c9641325)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.8-2

proxmox-kernel-6.8.8-2-pve-signed: 6.8.8-2

proxmox-kernel-6.8.4-2-pve-signed: 6.8.4-2

proxmox-kernel-6.5.13-5-pve: 6.5.13-5

ceph-fuse: 17.2.7-pve3

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.1

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.4

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.7

libpve-cluster-perl: 8.0.7

libpve-common-perl: 8.2.1

libpve-guest-common-perl: 5.1.3

libpve-http-server-perl: 5.1.0

libpve-network-perl: 0.9.8

libpve-rs-perl: 0.8.9

libpve-storage-perl: 8.2.3

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.2.7-1

proxmox-backup-file-restore: 3.2.7-1

proxmox-firewall: 0.4.2

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.6

proxmox-widget-toolkit: 4.2.3

pve-cluster: 8.0.7

pve-container: 5.1.12

pve-docs: 8.2.2

pve-edk2-firmware: 4.2023.08-4

pve-esxi-import-tools: 0.7.1

pve-firewall: 5.0.7

pve-firmware: 3.12-1

pve-ha-manager: 4.0.5

pve-i18n: 3.2.2

pve-qemu-kvm: 9.0.0-6

pve-xtermjs: 5.3.0-3

qemu-server: 8.2.1

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.4-pve1

System:

Kernel: 6.8.8-2-pve arch: x86_64 bits: 64 compiler: gcc v: 12.2.0 Console: pty pts/1

Distro: Debian GNU/Linux 12 (bookworm)

Machine:

Type: Desktop Mobo: HARDKERNEL model: ODROID-H3 v: 1.0 serial: N/A UEFI: American Megatrends

v: 5.19 date: 07/19/2023

CPU:

Info: quad core model: Intel Pentium Silver N6005 bits: 64 type: MCP arch: Alder Lake rev: 0

cache: L1: 256 KiB L2: 1.5 MiB L3: 4 MiB

Speed (MHz): avg: 2675 high: 3300 min/max: 800/3300 cores: 1: 3300 2: 3300 3: 3300 4: 800

bogomips: 15974

Flags: ht lm nx pae sse sse2 sse3 sse4_1 sse4_2 ssse3 vmx

Graphics:

Device-1: Intel JasperLake [UHD Graphics] driver: i915 v: kernel arch: Gen-11 ports:

active: HDMI-A-2 empty: DP-1,HDMI-A-1 bus-ID: 00:02.0 chip-ID: 8086:4e71

Display: server: No display server data found. Headless machine? tty: 145x37

Monitor-1: HDMI-A-2 model: HP L1940T res: 1280x1024 dpi: 86 diag: 484mm (19.1")

API: OpenGL Message: GL data unavailable in console for root.

Audio:

Device-1: Intel Jasper Lake HD Audio driver: snd_hda_intel v: kernel bus-ID: 00:1f.3

chip-ID: 8086:4dc8

API: ALSA v: k6.8.8-2-pve status: kernel-api

Network:

Device-1: Realtek RTL8125 2.5GbE driver: r8169 v: kernel pcie: speed: 5 GT/s lanes: 1 port: 4000

bus-ID: 01:00.0 chip-ID: 10ec:8125

IF: enp1s0 state: up speed: 1000 Mbps duplex: full mac: <filter>

Device-2: Realtek RTL8125 2.5GbE driver: r8169 v: kernel pcie: speed: 5 GT/s lanes: 1

port: 3000 bus-ID: 02:00.0 chip-ID: 10ec:8125

IF: enp2s0 state: down mac: <filter>

IF-ID-1: bonding_masters state: N/A speed: N/A duplex: N/A mac: N/A

IF-ID-2: fwbr100i0 state: up speed: 10000 Mbps duplex: unknown mac: <filter>

IF-ID-3: fwbr100i1 state: up speed: 10000 Mbps duplex: unknown mac: <filter>

IF-ID-4: fwln100i0 state: up speed: 10000 Mbps duplex: full mac: <filter>

IF-ID-5: fwln100i1 state: up speed: 10000 Mbps duplex: full mac: <filter>

IF-ID-6: fwpr100p0 state: up speed: 10000 Mbps duplex: full mac: <filter>

IF-ID-7: fwpr100p1 state: up speed: 10000 Mbps duplex: full mac: <filter>

IF-ID-8: tap100i0 state: unknown speed: 10000 Mbps duplex: full mac: <filter>

IF-ID-9: tap100i1 state: unknown speed: 10000 Mbps duplex: full mac: <filter>

IF-ID-10: vmbr0 state: up speed: 10000 Mbps duplex: unknown mac: <filter>

IF-ID-11: vmbr1 state: up speed: 10000 Mbps duplex: unknown mac: <filter>

Drives:

Local Storage: total: 30.02 TiB used: 3.54 TiB (11.8%)

ID-1: /dev/nvme0n1 vendor: Kingston model: SNV2S1000G size: 931.51 GiB speed: 63.2 Gb/s

lanes: 4 serial: <filter> temp: 41.9 C

ID-2: /dev/sda vendor: Seagate model: ST16000NM001G-2KK103 size: 14.55 TiB speed: 6.0 Gb/s

serial: <filter>

ID-3: /dev/sdb vendor: Seagate model: ST16000NM001G-2KK103 size: 14.55 TiB speed: 6.0 Gb/s

serial: <filter>

Partition:

ID-1: / size: 63 GiB used: 3.83 GiB (6.1%) fs: btrfs dev: /dev/nvme0n1p3

ID-2: /boot/efi size: 1022 MiB used: 11.6 MiB (1.1%) fs: vfat dev: /dev/nvme0n1p2

Swap:

Alert: No swap data was found.

Sensors:

System Temperatures: cpu: 61.0 C mobo: N/A

Fan Speeds (RPM): N/A

Info:

Processes: 275 Uptime: 4h 40m Memory: 62.65 GiB used: 10.95 GiB (17.5%) Init: systemd v: 252

target: graphical (5) default: graphical Compilers: N/A Packages: pm: dpkg pkgs: 813 Shell: Sudo

v: 1.9.13p3 running-in: pty pts/1 inxi: 3.3.26

Kernel: 6.8.8-2-pve arch: x86_64 bits: 64 compiler: gcc v: 12.2.0 Console: pty pts/1

Distro: Debian GNU/Linux 12 (bookworm)

Machine:

Type: Desktop Mobo: HARDKERNEL model: ODROID-H3 v: 1.0 serial: N/A UEFI: American Megatrends

v: 5.19 date: 07/19/2023

CPU:

Info: quad core model: Intel Pentium Silver N6005 bits: 64 type: MCP arch: Alder Lake rev: 0

cache: L1: 256 KiB L2: 1.5 MiB L3: 4 MiB

Speed (MHz): avg: 2675 high: 3300 min/max: 800/3300 cores: 1: 3300 2: 3300 3: 3300 4: 800

bogomips: 15974

Flags: ht lm nx pae sse sse2 sse3 sse4_1 sse4_2 ssse3 vmx

Graphics:

Device-1: Intel JasperLake [UHD Graphics] driver: i915 v: kernel arch: Gen-11 ports:

active: HDMI-A-2 empty: DP-1,HDMI-A-1 bus-ID: 00:02.0 chip-ID: 8086:4e71

Display: server: No display server data found. Headless machine? tty: 145x37

Monitor-1: HDMI-A-2 model: HP L1940T res: 1280x1024 dpi: 86 diag: 484mm (19.1")

API: OpenGL Message: GL data unavailable in console for root.

Audio:

Device-1: Intel Jasper Lake HD Audio driver: snd_hda_intel v: kernel bus-ID: 00:1f.3

chip-ID: 8086:4dc8

API: ALSA v: k6.8.8-2-pve status: kernel-api

Network:

Device-1: Realtek RTL8125 2.5GbE driver: r8169 v: kernel pcie: speed: 5 GT/s lanes: 1 port: 4000

bus-ID: 01:00.0 chip-ID: 10ec:8125

IF: enp1s0 state: up speed: 1000 Mbps duplex: full mac: <filter>

Device-2: Realtek RTL8125 2.5GbE driver: r8169 v: kernel pcie: speed: 5 GT/s lanes: 1

port: 3000 bus-ID: 02:00.0 chip-ID: 10ec:8125

IF: enp2s0 state: down mac: <filter>

IF-ID-1: bonding_masters state: N/A speed: N/A duplex: N/A mac: N/A

IF-ID-2: fwbr100i0 state: up speed: 10000 Mbps duplex: unknown mac: <filter>

IF-ID-3: fwbr100i1 state: up speed: 10000 Mbps duplex: unknown mac: <filter>

IF-ID-4: fwln100i0 state: up speed: 10000 Mbps duplex: full mac: <filter>

IF-ID-5: fwln100i1 state: up speed: 10000 Mbps duplex: full mac: <filter>

IF-ID-6: fwpr100p0 state: up speed: 10000 Mbps duplex: full mac: <filter>

IF-ID-7: fwpr100p1 state: up speed: 10000 Mbps duplex: full mac: <filter>

IF-ID-8: tap100i0 state: unknown speed: 10000 Mbps duplex: full mac: <filter>

IF-ID-9: tap100i1 state: unknown speed: 10000 Mbps duplex: full mac: <filter>

IF-ID-10: vmbr0 state: up speed: 10000 Mbps duplex: unknown mac: <filter>

IF-ID-11: vmbr1 state: up speed: 10000 Mbps duplex: unknown mac: <filter>

Drives:

Local Storage: total: 30.02 TiB used: 3.54 TiB (11.8%)

ID-1: /dev/nvme0n1 vendor: Kingston model: SNV2S1000G size: 931.51 GiB speed: 63.2 Gb/s

lanes: 4 serial: <filter> temp: 41.9 C

ID-2: /dev/sda vendor: Seagate model: ST16000NM001G-2KK103 size: 14.55 TiB speed: 6.0 Gb/s

serial: <filter>

ID-3: /dev/sdb vendor: Seagate model: ST16000NM001G-2KK103 size: 14.55 TiB speed: 6.0 Gb/s

serial: <filter>

Partition:

ID-1: / size: 63 GiB used: 3.83 GiB (6.1%) fs: btrfs dev: /dev/nvme0n1p3

ID-2: /boot/efi size: 1022 MiB used: 11.6 MiB (1.1%) fs: vfat dev: /dev/nvme0n1p2

Swap:

Alert: No swap data was found.

Sensors:

System Temperatures: cpu: 61.0 C mobo: N/A

Fan Speeds (RPM): N/A

Info:

Processes: 275 Uptime: 4h 40m Memory: 62.65 GiB used: 10.95 GiB (17.5%) Init: systemd v: 252

target: graphical (5) default: graphical Compilers: N/A Packages: pm: dpkg pkgs: 813 Shell: Sudo

v: 1.9.13p3 running-in: pty pts/1 inxi: 3.3.26

More information:

- The host is not affected

- I can

sudo qm stop 100 and then start the VM again- The guest VM is Ubuntu 24.04 used for running docker containers

- No previous problems running a similar container (Ubuntu 22.04) with many docker containers on Proxmox VE 7

Stuff I've tried:

- Pinning kernel 6.5.13-5-pve

- Looking for these specific error messages

kvm: Desc next is 3 and Reset to device, \Device\RaidPort4, was issued in relation to this post and this github issue (iothread and VirtIO SCSI). Didn't find the error messages in any of my logs.- Specifying nfsver=3 in the guest NFS mounts

-

run-docker-runtime\x2drunc-moby messages are apparantly normal.Any ideas?