Proxmox VE 8.0 released!

- Thread starter martin

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I am currently using the r8168-dkms kernel driver. Does anyone know if the driver is rebuilt for the new kernel whenever I do a kernel update? Kernel 6.2.16-8 with its header is waiting in my update pipeline.

Also: Doesn't it make sense to add "non-free" to the security source as well?

Driver is rebuilt every kernel update (as long as the headers are available) The non-free part is just to be able to download the R8168-DKMS driver, otherwise it can't be found

Last edited:

Hi all,

I've been using Proxmox 7.4 for about a year, and today, I've installed Proxmox 8.0 on a new OVH dedicated server. However, I've encountered an issue. When I access the shell and type in:

Has anyone else experienced this issue?

Is it possible that cron is not available by default on Debian 12 or Proxmox 8.0?

Thank you for your assistance!

I've been using Proxmox 7.4 for about a year, and today, I've installed Proxmox 8.0 on a new OVH dedicated server. However, I've encountered an issue. When I access the shell and type in:

Bash:

crontab -e

bash: crontab: command not foundHas anyone else experienced this issue?

Is it possible that cron is not available by default on Debian 12 or Proxmox 8.0?

Thank you for your assistance!

I'm getting this WARNING when running pve7to8 --full

while if I check the systemd version

The CT is running Fedora 38.

What should I do ?

Code:

WARN: Found at least one CT (100) which does not support running in a unified cgroup v2 layout

Consider upgrading the Containers distro or set systemd.unified_cgroup_hierarchy=0 in the Proxmox VE hosts' kernel cmdline! Skipping further CT compat checks.while if I check the systemd version

Code:

[root@proxy ~]# systemctl --version

systemd 253 (253.7-1.fc38)

+PAM +AUDIT +SELINUX -APPARMOR +IMA +SMACK +SECCOMP -GCRYPT +GNUTLS +OPENSSL +ACL +BLKID +CURL +ELFUTILS +FIDO2 +IDN2 -IDN -IPTC +KMOD +LIBCRYPTSETUP +LIBFDISK +PCRE2 +PWQUALITY +P11KIT +QRENCODE +TPM2 +BZIP2 +LZ4 +XZ +ZLIB +ZSTD +BPF_FRAMEWORK +XKBCOMMON +UTMP +SYSVINIT default-hierarchy=unifiedThe CT is running Fedora 38.

What should I do ?

Hi,

If you are running a version with the patch, please share the container configuration.

EDIT: apparently, the original patch did not work for Fedora 38: https://forum.proxmox.com/threads/pve7to8-cgroup-warning-incorrect.128721/post-584609

are you running the latest version of 7.x? There is a patch inI'm getting this WARNING when running pve7to8 --full

Code:WARN: Found at least one CT (100) which does not support running in a unified cgroup v2 layout Consider upgrading the Containers distro or set systemd.unified_cgroup_hierarchy=0 in the Proxmox VE hosts' kernel cmdline! Skipping further CT compat checks.

while if I check the systemd version

Code:[root@proxy ~]# systemctl --version systemd 253 (253.7-1.fc38) +PAM +AUDIT +SELINUX -APPARMOR +IMA +SMACK +SECCOMP -GCRYPT +GNUTLS +OPENSSL +ACL +BLKID +CURL +ELFUTILS +FIDO2 +IDN2 -IDN -IPTC +KMOD +LIBCRYPTSETUP +LIBFDISK +PCRE2 +PWQUALITY +P11KIT +QRENCODE +TPM2 +BZIP2 +LZ4 +XZ +ZLIB +ZSTD +BPF_FRAMEWORK +XKBCOMMON +UTMP +SYSVINIT default-hierarchy=unified

The CT is running Fedora 38.

What should I do ?

pve-manager >= 7.4-14 for improving detection: https://git.proxmox.com/?p=pve-manager.git;a=commit;h=591f411f729e2f9c2f1fd45540a4d777c16b3245If you are running a version with the patch, please share the container configuration.

EDIT: apparently, the original patch did not work for Fedora 38: https://forum.proxmox.com/threads/pve7to8-cgroup-warning-incorrect.128721/post-584609

Last edited:

II have the same problem when using Hetzner KVM. I downloaded the ISO file from the official website, zfs file system (RAID0)..... I doubt that you should use a flash drive....Hello. I get error messages regarding squashfs command (the installer says that there is an error in line 870 of Install.pm file). The error pops up and installation breaks.

I get similar error (different line) both on 8.0.1 and 8.0.2. I didn't have such error when installing 7.3 version of proxmox.

My hardware - HP 600 g3 - 2 nvme disks (one is the target for installation), 2 hdd 2.5 inch; 24 GB RAM, installation medium - USB disk (sha256 is correct)

Strangely enough, when re-installed, it was successful.... ThanksII have the same problem when using Hetzner KVM. I downloaded the ISO file from the official website, zfs file system (RAID0)..... I doubt that you should use a flash drive....

I have migrated several VMs from proxmox 7 to proxmox 8 and no network on all VMs. Need to reboot all VMs.

So the live migrating is not possible. So sad...

So the live migrating is not possible. So sad...

Last edited:

Can you give more details here?I have migrated several VMs from proxmox 7 to proxmox 8 and no network on all VMs. Need to reboot all VMs.

So the live migrating is not possible. So sad...

E.g., about your

- used hardware (network cards, CPUs, server vendor, ...) from both source and target nodes (are they homogenous/identical?)

- which kernel you used on Proxmox VE 7

- if it had the latest software updates (can you attach

/var/log/apt/history.logand/var/log/apt/history.log.1?) - if the hosts had subscriptions with access to the production-ready enterprise repository

- what the VM configs looked like

- any errors in the VM guest OS or on the Proxmox VE host logs?

The last available software was installed on all servers. But was not rebooted from the latest kernel. Also I tried to migrate from the kernel 5.15.35-2-pve and from 5.15.74-1-pve.

Uses the same HW. All servers have the subscription.

and pve8:

Uses the same HW. All servers have the subscription.

# pveversion -v

proxmox-ve: 7.4-1 (running kernel: 5.15.74-1-pve)

pve-manager: 7.4-16 (running version: 7.4-16/0f39f621)

pve-kernel-5.15: 7.4-4

pve-kernel-5.15.108-1-pve: 5.15.108-2

pve-kernel-5.15.107-2-pve: 5.15.107-2

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.15.30-2-pve: 5.15.30-3

ceph-fuse: 15.2.16-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx4

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4.1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.4-2

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-3

libpve-rs-perl: 0.7.7

libpve-storage-perl: 7.4-3

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.4.3-1

proxmox-backup-file-restore: 2.4.3-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.2

proxmox-widget-toolkit: 3.7.3

pve-cluster: 7.3-3

pve-container: 4.4-6

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-4~bpo11+1

pve-firewall: 4.3-5

pve-firmware: 3.6-5

pve-ha-manager: 3.6.1

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-2

qemu-server: 7.4-4

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.11-pve1

and pve8:

# pveversion -v

proxmox-ve: 8.0.2 (running kernel: 6.2.16-3-pve)

pve-manager: 8.0.4 (running version: 8.0.4/d258a813cfa6b390)

pve-kernel-6.2: 8.0.5

proxmox-kernel-helper: 8.0.3

proxmox-kernel-6.2.16-10-pve: 6.2.16-10

proxmox-kernel-6.2: 6.2.16-10

proxmox-kernel-6.2.16-6-pve: 6.2.16-7

pve-kernel-6.2.16-3-pve: 6.2.16-3

ceph-fuse: 17.2.6-pve1+3

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx4

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-4

libknet1: 1.25-pve1

libproxmox-acme-perl: 1.4.6

libproxmox-backup-qemu0: 1.4.0

libproxmox-rs-perl: 0.3.0

libpve-access-control: 8.0.4

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.0.7

libpve-guest-common-perl: 5.0.3

libpve-http-server-perl: 5.0.4

libpve-rs-perl: 0.8.4

libpve-storage-perl: 8.0.2

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve3

novnc-pve: 1.4.0-2

proxmox-backup-client: 3.0.2-1

proxmox-backup-file-restore: 3.0.2-1

proxmox-kernel-helper: 8.0.3

proxmox-mail-forward: 0.2.0

proxmox-mini-journalreader: 1.4.0

proxmox-widget-toolkit: 4.0.6

pve-cluster: 8.0.2

pve-container: 5.0.4

pve-docs: 8.0.4

pve-edk2-firmware: 3.20230228-4

pve-firewall: 5.0.3

pve-firmware: 3.7-1

pve-ha-manager: 4.0.2

pve-i18n: 3.0.5

pve-qemu-kvm: 8.0.2-5

pve-xtermjs: 4.16.0-3

qemu-server: 8.0.6

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.1.12-pve1

# qm config 11750 --current

acpi: 1

agent: 0

autostart: 1

boot: cdn

bootdisk: virtio0

cores: 1

cpu: qemu64,flags=+pcid;+spec-ctrl;+ssbd;+pdpe1gb;+aes

cpuunits: 1024

description: Client%3A

hotplug: 1

ide2: none,media=cdrom

kvm: 1

machine: pc-i440fx-7.2

memory: 4096

migrate_speed: 300

name: bg-resolver

net0: virtio=3E:59:18:7A:90:43,bridge=vmbr0,firewall=1,rate=12.5

numa: 0

onboot: 1

ostype: l26

protection: 1

reboot: 1

scsihw: virtio-scsi-pci

smbios1: uuid=3d314736-74bf-49ae-b702-54a0c2852f75

sockets: 2

template: 0

virtio0: local-zfs:vm-11750-disk-0,format=raw,iops_rd=3000,iops_rd_max=9000,iops_wr=2000,iops_wr_max=6000,mbps_rd=100,mbps_rd_max=1000,mbps_wr=70,mbps_wr_max=700,size=10G

vmgenid: 834aab69-8bd1-45bc-98de-5bc661c56240

Also I can migrate the VM from pve8 to pve7 and it works. Then migrate back to pve8 and it stop working until reboot it.

Last edited:

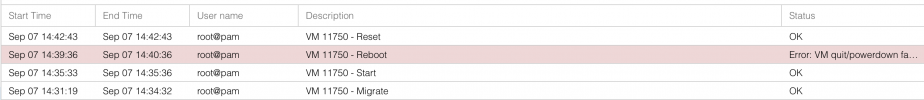

You only answered half of my questions.. please open a new thread an answer them all.Such VMs can be rebooted. Timeout returned. Need to reset the VM.View attachment 55149

Seam to be the VM is stuck.

So I guess you did not follow the official upgrade guide, or at least not run theBut was not rebooted from the latest kernel.

pve7to8 checker tool which warns about such stuff?https://pve.proxmox.com/wiki/Upgrade_from_7_to_8

There even was some fix in the kernel for hanging VMs on migrations under some specific situations a bit ago (without the full info like asked it's hard to tell if yours is one of those situations), but one needs to have both source and target up-to-date for such things to work.

I mean that I have several servers with pve7 with different kernel version. But all pve7.

And I have only one server with pve8, which was not upgraded, but installed from ISO month ago.

And I have only one server with pve8, which was not upgraded, but installed from ISO month ago.

Hello, just tried to update to 8, following the guide. after full upgrade on 7 i first ran ran

output was

and pve7to8 --full resulted in all green.

at the end of the upgrade process i get:

I'm guessing i should delete linux-headers-6.1.0-11-amd64 ?

But i just wanted to ask here if it's something serious i'm not seeing? Thx.

Code:

apt --purge autoremove

pve7to8 --full

pveversion

Code:

pve-manager/7.4-16/0f39f621 (running kernel: 6.2.16-11-bpo11-pve)at the end of the upgrade process i get:

Code:

Processing triggers for initramfs-tools (0.142) ...

update-initramfs: Generating /boot/initrd.img-6.2.16-12-pve

cryptsetup: ERROR: Couldn't resolve device rpool/ROOT/pve-1

cryptsetup: WARNING: Couldn't determine root device

Running hook script 'zz-proxmox-boot'..

Re-executing '/etc/kernel/postinst.d/zz-proxmox-boot' in new private mount namespace..

Copying and configuring kernels on /dev/disk/by-uuid/8515-4738

Copying kernel and creating boot-entry for 5.15.116-1-pve

Copying kernel and creating boot-entry for 6.2.16-11-bpo11-pve

Copying kernel and creating boot-entry for 6.2.16-12-pve

Processing triggers for dbus (1.14.8-2~deb12u1) ...

Processing triggers for pve-ha-manager (4.0.2) ...

Errors were encountered while processing:

linux-headers-6.1.0-11-amd64

linux-headers-amd64

E: Sub-process /usr/bin/dpkg returned an error code (1)I'm guessing i should delete linux-headers-6.1.0-11-amd64 ?

But i just wanted to ask here if it's something serious i'm not seeing? Thx.

Hi,

I'm not using cryptsetup so I wouldn't know, but do you usually have those complains from cryptsetup? Those are not what apt complained about below though:Code:Processing triggers for initramfs-tools (0.142) ... update-initramfs: Generating /boot/initrd.img-6.2.16-12-pve cryptsetup: ERROR: Couldn't resolve device rpool/ROOT/pve-1 cryptsetup: WARNING: Couldn't determine root device

If you want to check, the actual errors are likely further up in the log (seeCode:Errors were encountered while processing: linux-headers-6.1.0-11-amd64 linux-headers-amd64 E: Sub-process /usr/bin/dpkg returned an error code (1)

/var/log/apt/term.log).But yes, you shouldn't need those headers. For Proxmox, the relevant header packages areI'm guessing i should delete linux-headers-6.1.0-11-amd64 ?

proxmox-headers-<version>, not linux-headers(-<version>)-amd64.Yes, the cryptsetup complaints are normal.Hi,

I'm not using cryptsetup so I wouldn't know, but do you usually have those complains from cryptsetup? Those are not what apt complained about below though:

If you want to check, the actual errors are likely further up in the log (see/var/log/apt/term.log).

But yes, you shouldn't need those headers. For Proxmox, the relevant header packages areproxmox-headers-<version>, notlinux-headers(-<version>)-amd64.

here's my complete term.log