# Interfaces BEFORE ifreload -a -d

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp7s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether rr:ii:uu:bb:aa:xx brd ff:ff:ff:ff:ff:ff

3: vmbr0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether rr:ii:uu:bb:aa:xx brd ff:ff:ff:ff:ff:ff

# Output of ifreload -a -d

debug: args = Namespace(all=True, currentlyup=False, CLASS=None, iflist=[], noact=False, verbose=False, debug=True, withdepends=False, perfmode=False, nocac

he=False, excludepats=None, usecurrentconfig=False, syslog=False, systemd=False, force=False, syntaxcheck=False, version=None, nldebug=False)

debug: creating ifupdown object ..

info: requesting link dump

info: requesting address dump

info: requesting netconf dump

debug: nlcache: reset errorq

debug: {'enable_persistent_debug_logging': 'yes', 'use_daemon': 'no', 'template_enable': '1', 'template_engine': 'mako', 'template_lookuppath': '/etc/networ

k/ifupdown2/templates', 'default_interfaces_configfile': '/etc/network/interfaces', 'disable_cli_interfacesfile': '0', 'addon_syntax_check': '0', 'addon_scr

ipts_support': '1', 'addon_python_modules_support': '1', 'multiple_vlan_aware_bridge_support': '1', 'ifquery_check_success_str': 'pass', 'ifquery_check_erro

r_str': 'fail', 'ifquery_check_unknown_str': '', 'ifquery_ifacename_expand_range': '0', 'link_master_slave': '1', 'delay_admin_state_change': '0', 'ifreload

_down_changed': '0', 'addr_config_squash': '0', 'ifaceobj_squash': '0', 'adjust_logical_dev_mtu': '1', 'state_dir': '/run/network/'}

info: loading builtin modules from ['/usr/share/ifupdown2/addons']

info: module openvswitch not loaded (module init failed: no /usr/bin/ovs-vsctl found)

info: module openvswitch_port not loaded (module init failed: no /usr/bin/ovs-vsctl found)

info: module ppp not loaded (module init failed: no /usr/bin/pon found)

info: module batman_adv not loaded (module init failed: no /usr/sbin/batctl found)

debug: bridge: using reserved vlan range (0, 0)

debug: bridge: init: warn_on_untagged_bridge_absence=False

debug: bridge: init: vxlan_bridge_default_igmp_snooping=None

debug: bridge: init: arp_nd_suppress_only_on_vxlan=False

debug: bridge: init: bridge_always_up_dummy_brport=None

info: executing /sbin/sysctl net.bridge.bridge-allow-multiple-vlans

debug: bridge: init: multiple vlans allowed True

info: module mstpctl not loaded (module init failed: no /sbin/mstpctl found)

info: executing /bin/ip rule show

info: executing /bin/ip -6 rule show

info: address: using default mtu 1500

info: address: max_mtu undefined

info: executing /sbin/sysctl net.ipv6.conf.all.accept_ra

info: executing /sbin/sysctl net.ipv6.conf.all.autoconf

info: executing /usr/sbin/ip vrf id

info: mgmt vrf_context = False

debug: dhclient: dhclient_retry_on_failure set to 0

info: executing /bin/ip addr help

info: address metric support: OK

info: module ppp not loaded (module init failed: no /usr/bin/pon found)

info: module mstpctl not loaded (module init failed: no /sbin/mstpctl found)

info: module batman_adv not loaded (module init failed: no /usr/sbin/batctl found)

info: module openvswitch_port not loaded (module init failed: no /usr/bin/ovs-vsctl found)

info: module openvswitch not loaded (module init failed: no /usr/bin/ovs-vsctl found)

info: looking for user scripts under /etc/network

info: loading scripts under /etc/network/if-pre-up.d ...

info: loading scripts under /etc/network/if-up.d ...

info: loading scripts under /etc/network/if-post-up.d ...

info: loading scripts under /etc/network/if-pre-down.d ...

info: loading scripts under /etc/network/if-down.d ...

info: loading scripts under /etc/network/if-post-down.d ...

info: 'link_master_slave' is set. slave admin state changes will be delayed till the masters admin state change.

info: using mgmt iface default prefix eth

debug: reloading interface config ..

info: processing interfaces file /etc/network/interfaces

debug: processing sourced line ..'source /etc/network/interfaces.d/*'

debug: vmbr0: evaluating port expr '['enp7s0']'

info: reload: scheduling up on interfaces: ['lo', 'enp7s0', 'vmbr0']

debug: scheduling '['pre-up', 'up', 'post-up']' for ['lo', 'enp7s0', 'vmbr0']

debug: dependency graph {

lo : []

enp7s0 : []

vmbr0 : ['enp7s0']

}

debug: graph roots (interfaces that dont have dependents): ['lo', 'vmbr0']

info: lo: running ops ...

debug: lo: pre-up : running module xfrm

debug: lo: pre-up : running module link

debug: lo: pre-up : running module bond

debug: lo: pre-up : running module vlan

debug: lo: pre-up : running module vxlan

debug: lo: pre-up : running module usercmds

debug: lo: pre-up : running module bridge

debug: lo: pre-up : running module bridgevlan

debug: lo: pre-up : running module tunnel

debug: lo: pre-up : running module vrf

debug: lo: pre-up : running module ethtool

debug: lo: pre-up : running module auto

debug: lo: pre-up : running module address

info: executing /sbin/sysctl net.mpls.conf.lo.input=0

info: executing /sbin/sysctl net.ipv6.conf.lo.accept_ra=0

info: executing /sbin/sysctl net.ipv6.conf.lo.autoconf=0

debug: lo: up : running module dhcp

debug: lo: up : running module address

debug: lo: up : running module addressvirtual

debug: lo: up : running module usercmds

debug: lo: up : running script /etc/network/if-up.d/postfix

info: executing /etc/network/if-up.d/postfix

debug: lo: up : running script /etc/network/if-up.d/ntpsec-ntpdate

info: executing /etc/network/if-up.d/ntpsec-ntpdate

debug: lo: post-up : running module usercmds

debug: lo: statemanager sync state pre-up

debug: vmbr0: found dependents ['enp7s0']

info: enp7s0: running ops ...

debug: enp7s0: pre-up : running module xfrm

debug: enp7s0: pre-up : running module link

debug: enp7s0: pre-up : running module bond

debug: enp7s0: pre-up : running module vlan

debug: enp7s0: pre-up : running module vxlan

debug: enp7s0: pre-up : running module usercmds

debug: enp7s0: pre-up : running module bridge

info: enp7s0: not enslaved to bridge vmbr0: ignored for now

debug: enp7s0: pre-up : running module bridgevlan

debug: enp7s0: pre-up : running module tunnel

debug: enp7s0: pre-up : running module vrf

debug: enp7s0: pre-up : running module ethtool

debug: enp7s0: pre-up : running module auto

debug: enp7s0: pre-up : running module address

info: executing /sbin/sysctl net.mpls.conf.enp7s0.input=0

debug: enp7s0: up : running module dhcp

debug: enp7s0: up : running module address

debug: enp7s0: up : running module addressvirtual

debug: enp7s0: up : running module usercmds

debug: enp7s0: up : running script /etc/network/if-up.d/postfix

info: executing /etc/network/if-up.d/postfix

debug: enp7s0: up : running script /etc/network/if-up.d/ntpsec-ntpdate

info: executing /etc/network/if-up.d/ntpsec-ntpdate

debug: enp7s0: post-up : running module usercmds

debug: enp7s0: statemanager sync state pre-up

info: vmbr0: running ops ...

debug: vmbr0: pre-up : running module xfrm

debug: vmbr0: pre-up : running module link

debug: vmbr0: pre-up : running module bond

debug: vmbr0: pre-up : running module vlan

debug: vmbr0: pre-up : running module vxlan

debug: vmbr0: pre-up : running module usercmds

debug: vmbr0: pre-up : running module bridge

info: vmbr0: bridge already exists

info: vmbr0: applying bridge settings

info: vmbr0: set bridge-fd 0 (cache 1500)

info: vmbr0: reset bridge-hashel to default: 4

info: vmbr0: reset bridge-hashmax to default: 512

info: reading '/sys/class/net/vmbr0/bridge/stp_state'

info: vmbr0: netlink: ip link set dev vmbr0 type bridge (with attributes)

debug: attributes: {1: 0, 26: 4, 27: 512}

debug: vmbr0: evaluating port expr '['enp7s0']'

info: writing '1' to file /proc/sys/net/ipv6/conf/enp7s0/disable_ipv6

info: executing /bin/ip -force -batch - [link set dev enp7s0 master vmbr0]

info: vmbr0: applying bridge port configuration: ['enp7s0']

info: vmbr0: applying bridge configuration specific to ports

info: vmbr0: processing bridge config for port enp7s0

info: executing /bin/ip -force -batch - [link set dev enp7s0 up]

debug: vmbr0: pre-up : running module bridgevlan

debug: vmbr0: pre-up : running module tunnel

debug: vmbr0: pre-up : running module vrf

debug: vmbr0: pre-up : running module ethtool

debug: vmbr0: pre-up : running module auto

debug: vmbr0: pre-up : running module address

info: executing /sbin/sysctl net.mpls.conf.vmbr0.input=0

info: vmbr0: netlink: ip link set dev vmbr0 address rr:ii:uu:bb:aa:xx

info: vmbr0: netlink: ip addr add 1.2.3.4/26 dev vmbr0

info: writing '0' to file /proc/sys/net/ipv4/conf/vmbr0/arp_accept

info: vmbr0: netlink: ip link set dev vmbr0 up

debug: vmbr0: up : running module dhcp

debug: vmbr0: up : running module address

info: executing /bin/ip route replace default via 1.2.3.193 proto kernel dev vmbr0 onlink

debug: vmbr0: up : running module addressvirtual

debug: vmbr0: up : running module usercmds

debug: vmbr0: up : running script /etc/network/if-up.d/postfix

info: executing /etc/network/if-up.d/postfix

debug: vmbr0: up : running script /etc/network/if-up.d/ntpsec-ntpdate

info: executing /etc/network/if-up.d/ntpsec-ntpdate

debug: vmbr0: post-up : running module usercmds

info: executing ip route add 1.2.3.1/26 via 1.2.3.193 dev vmbr0

info: executing ip route add 1.2.2.1/32 dev vmbr0

debug: vmbr0: statemanager sync state pre-up

debug: saving state ..

info: exit status 0

# Interfaces AFTER ifreload -a -d

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp7s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr0 state UP group default qlen 1000

link/ether rr:ii:uu:bb:aa:xx brd ff:ff:ff:ff:ff:ff

3: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether rr:ii:uu:bb:aa:xx brd ff:ff:ff:ff:ff:ff

inet 1.2.3.4/26 scope global vmbr0

valid_lft forever preferred_lft forever

inet6 fe80::yyy:xxx.../64 scope link

valid_lft forever preferred_lft forever

#######

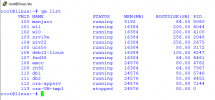

# journalctl -u networking.service

-- Boot 472544a572114ac3bb34c6c281aab866 --

Jun 24 23:20:55 hv3 systemd[1]: Starting networking.service - Network initialization...

Jun 24 23:20:55 hv3 networking[750]: networking: Configuring network interfaces

Jun 24 23:21:05 hv3 systemd[1]: networking.service: start operation timed out. Terminating.

Jun 24 23:21:05 hv3 systemd[1]: networking.service: Main process exited, code=killed, status=15/TERM

Jun 24 23:21:35 hv3 systemd[1]: networking.service: State 'final-sigterm' timed out. Killing.

Jun 24 23:21:35 hv3 systemd[1]: networking.service: Killing process 830 (python3) with signal SIGKILL.

Jun 24 23:21:35 hv3 systemd[1]: networking.service: Failed with result 'timeout'.

Jun 24 23:21:35 hv3 systemd[1]: Failed to start networking.service - Network initialization.