It looks like this is changed with the cpupower tool:Thanks for the update, if it is CPU scheduling then that's fine - happy for it to be power saving.

Having said that, is there a straightforward way to change the scheduler if we get issues?

Thanks!

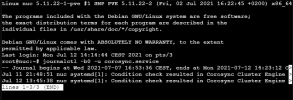

Code:

cpupower frequency-set -g SCHEDULER

# Examples

cpupower frequency-set -g performance

cpupower frequency-set -g schedutil